I <3 Kernels

Published:

In this post I’m going to go through the kernel trick and how it helps or enables various tools in statistics and machine learning including support vector machines, gaussian processes, kernel regression and kernel PCA. This is going to be a bit of a long one, I’ll probably split it up later but for now … sorry? {}

Table of Contents

- Linear Methods

- Kernel Trick

- Awesome Kernel-Based Methods

- Gaussian Processes

- Gallery of Kernels

- Conclusions / Pros and Cons of Kernel Methods

- More Examples

Prerequisites

Sorry in advance if this post isn’t as accessible as my other ones. Since it’s quite a long one I’m going to presume some knowledge going in. Primarily,

- dot products + inner products and that one is a subset of the other

- Projections on to lines and planes from a Linear Algebra perspective

- Lagrange Multiplier problems

- and as usual, basic calculus (primarily multivariable derivatives of and with scalars and vectors) and some matrix algebra.

And yes all the links are Wikipedia, they’re good pages. And if Wikipedia is there, why not use it.

General Resources

- Cambridge series in statistical and probabilistic mathematics: Asymptotic statistics series number 3

- Sorry that this one isn’t open access, but I was lucky enough to have access through my institution and it’s great. If you have institutional access or can afford it I’d highly recommend it (as of 2025)

- High-Dimensional Statistics - A Non-Asymptotic Viewpoint - Martin J. Wainwright

- Also sorry that this one isn’t open access either, same situation as above.

- Jeff Calder: “An intro to concentration of measure with applications to graph-based l… (Part 1/2)” - Institute for Pure & Applied Mathematics (IPAM)

- Not directly relevant, but is something to think about with all the talk of high-dimensional statistics on this page

- Steinwart - “Support Vector Machines”

- Rasmussen - “Gaussian Processes for Machine Learning”

Intro

The goal of this blog post is to make you the reader aware or appreciate more kernel-based methods. For that I’m going to structure the post as 1. Some linear-ish methods that show promise for being even better in non-linear contexts but seem computationally expensive, 2. How the kernel trick allows us to get around this computational bottlenecks, and finally 3. The final form of the kernel-based methods and those that simply don’t work without it (GPs).

I’m not going to presume knowledge of these methods beforehand as much as possible, but I am going to do a bit of a whirlwind tour, so if any of them seem interesting to you and you don’t think the level of detail I provide is good enough, I’ve tried to include some independent resources for each that maybe will provide another perspective or more detail for every sub-section (the ‘Resources’ sections).

And as should be stated in all of my posts (but to be clear isn’t), I use notation through which I understand everything or simply want consistent notation throughout a given post so will likely differ from standard notation for a given topic(s). If you think I should make a given idea or object different notation either to make it clearer or because it’s simply incorrect please email me at lc[LastNamelowerCase]@[googleAddress].com or [FirstName].[Lastname]@[my institution].edu

Linear Methods

Fisher Linear Discriminatory Analysis (KDA I)

Resources

- StatQuest: Linear Discriminant Analysis (LDA) clearly explained.

- Linear discriminant analysis - Wikipedia

- Basics of Quadratic Discriminant Analysis (QDA) - Kaggle

- FISHER’S DISCRIMINANT ANALYSIS - Sanjoy Das

- The Use Of Multiple Measurements In Taxonomic Problems - Fisher

- Kernel Fisher discriminant analysis

- Has a nice concise section on the linear case

The Gist

Fisher Linear Discriminatory Analysis or simply FLDA1 is a supervised method (meaning we know the class labels) for creating a linear projection (single value in 1D, line in 2D, plane in 3D) that separates two or more classes of objects. Here we will focus on the separation of just two classes.

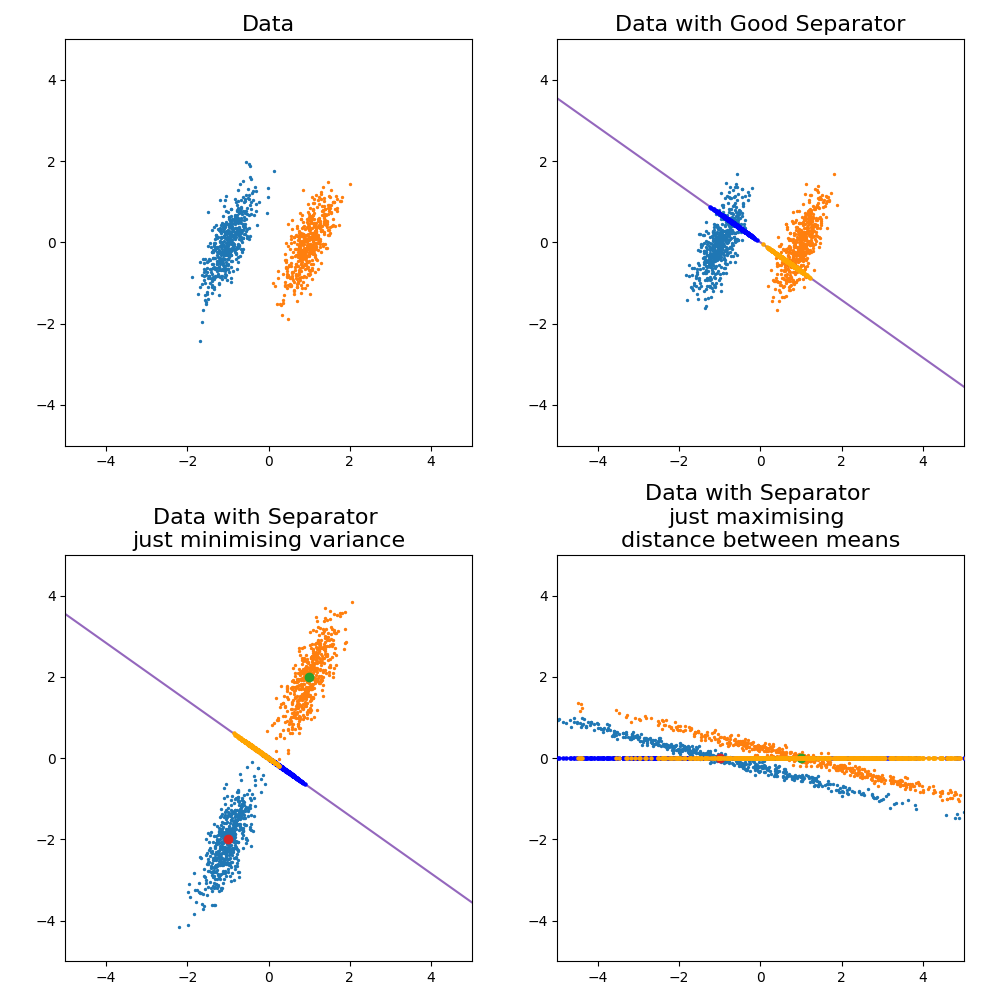

The key idea behind FLDA is that you wish to construct some linear combination of the input variables to demarcate the two classes that you project the objects onto. You do this by 1. maximising the distance or variance between the two groups on the projected space and 2. minimising the variance of each group in this space. Below are some examples of this in action before we get into it to emphasise that both conditions must be satisfied to get good discrimination.

In a very non-statiscian way I’m just going to throw the formula here and leave the derivation to another day (as I will do for quite a few things in this post).

\[\begin{align} Z = \frac{\sigma^2_{\textrm{inbetween}}}{\sigma^2_{\textrm{within}}} = \frac{(\vec{w}\cdot(\vec{\mu}_1 - \vec{\mu}_0))^2}{\vec{w}^T\left(\Sigma_0 + \Sigma_1\right)\vec{w}} \end{align}\]The \(\vec{w}\) is a vector in the direction of line (or linear operator on variables for generality) that is used in the above as a projection operator, to note the statistical measures on the line. There is any analytical solution to this where,

\[\begin{align} \vec{w} \propto (\Sigma_0 + \Sigma_1)^{-1}(\vec{\mu}_1 - \vec{\mu}_0). \end{align}\]Where the solution as increases or decreases in the magnitude, the direction vector still return the same line object. For the sake of a cool gif and for later on when we generalise this method, let’s look at how it looks when you try to optimise for \(\vec{w}\) and compare it to the exact solution above.

Let’s compare the optimisation result to my “guesses” above.

And voila, my good guess wasn’t as good as I thought and my bad guesses were in fact … bad.

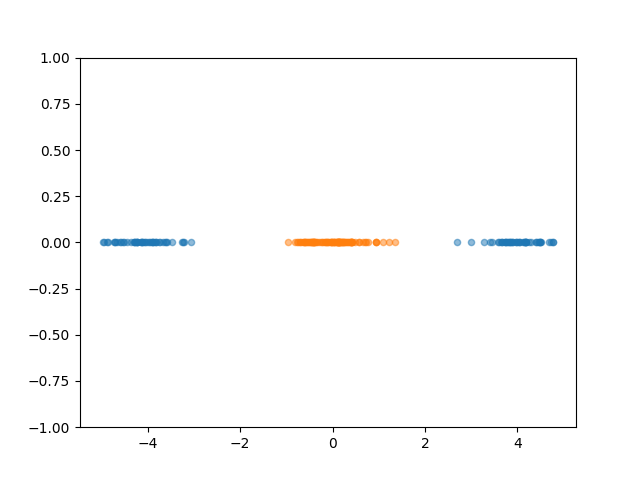

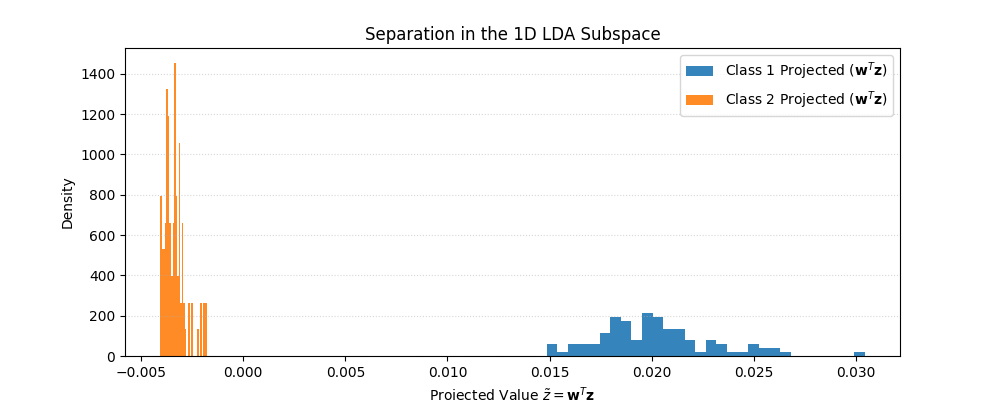

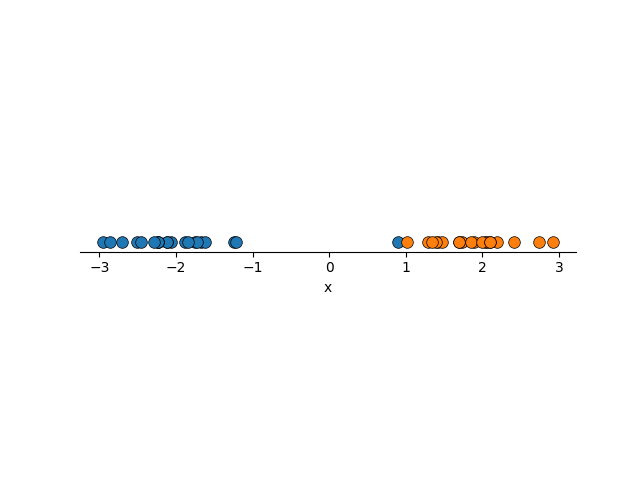

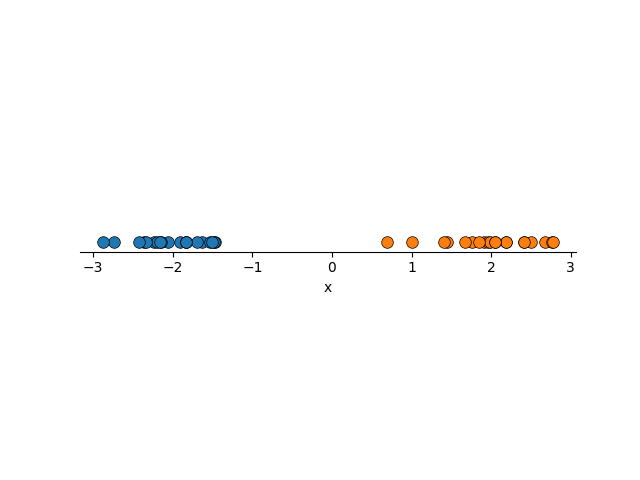

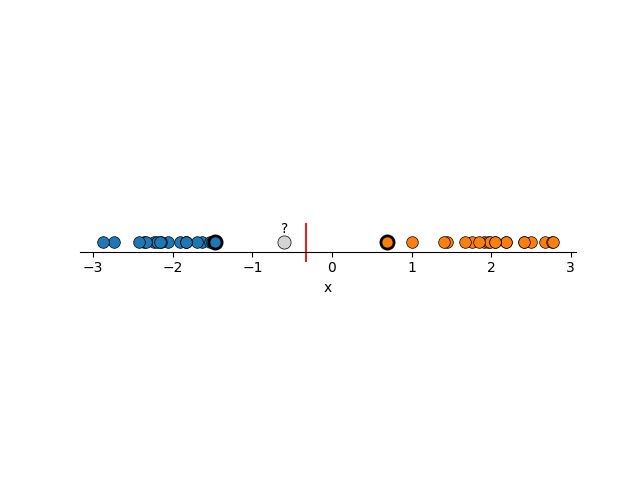

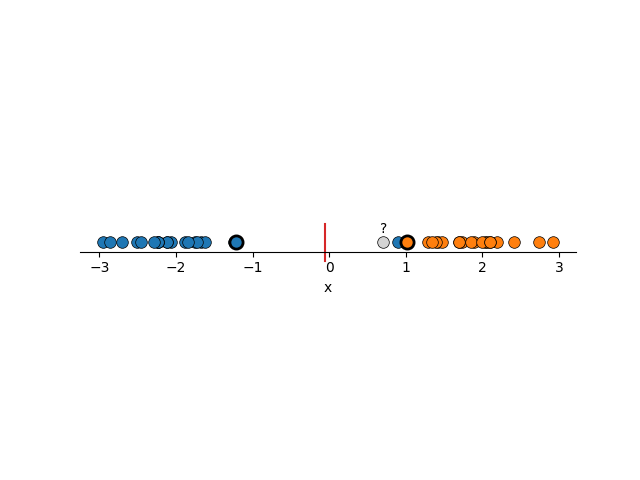

Switching gears a little bit, let’s simplify the problem and look at a 1D example, still 2 groups.

Now, if we wanted to do FLDA, we can only create a single value to discriminate the groups. But no matter what value you pick, there is no single value that will perfectly discriminate the groups despite it visually looking very simple.

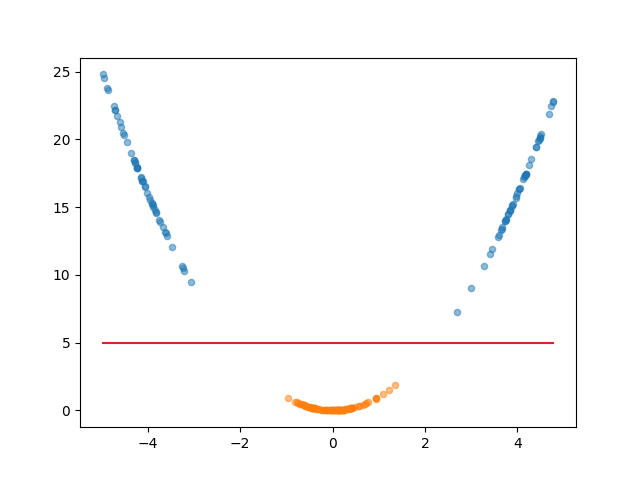

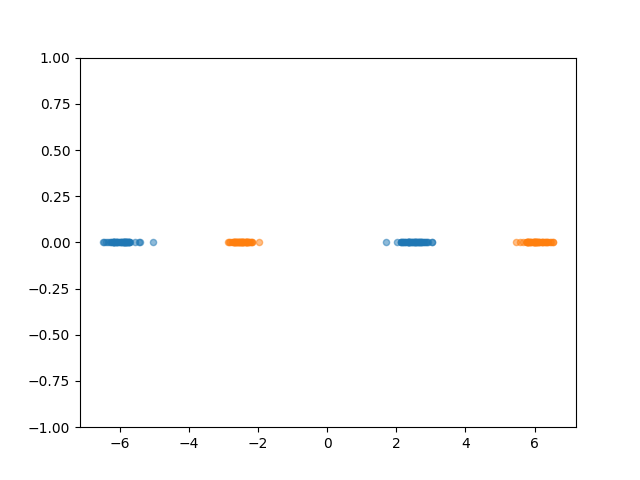

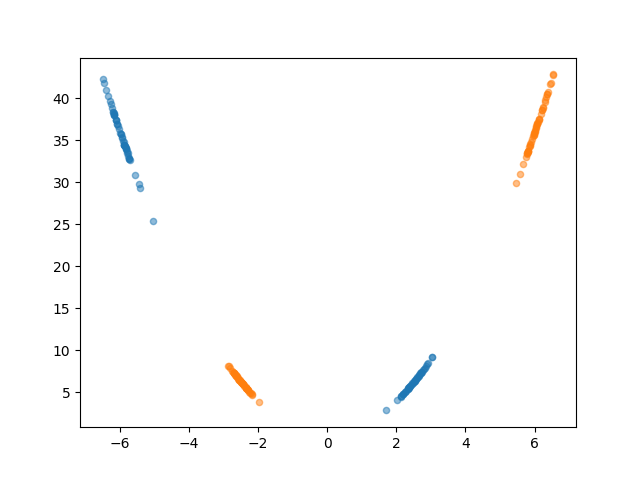

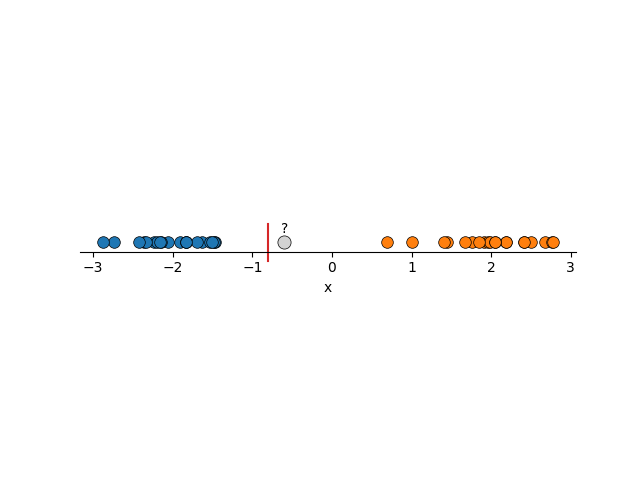

The trick is to2 increase the dimension of the space, projecting the 1D dimensional data \(x\) into two dimensions where the second is \(x^2\).

In this space, it is an extremely simple task of constructing a linear combination of the variables (again just a line in 2D) that the data can be projected onto and be separated. Additionally, I’ll start plotting the decision boundary that this implies in the space, which both separates the data and shows what direction it needs to go to be projected onto the LDA space.

Mathematically, nothing really changes compared to before, but we can slightly rephrase it so that it’s easier to generalise to more dimensions.

So we go back to maximising the variance \(B\)etween the class and \(W\)ithin for data from group 0, \(x_0\), and group 1 \(x_1\).

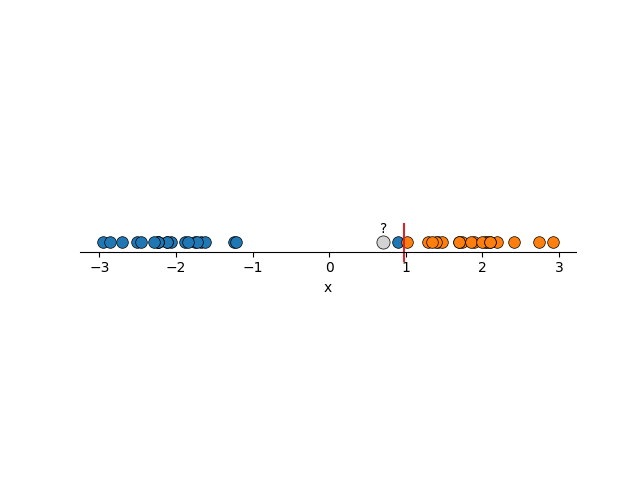

\[\begin{align} T &= \frac{\vec{w}^T S_B \vec{w}}{\vec{w}^T S_W \vec{w}} \\ &= \frac{\left(\vec{w} \cdot \left(\hat{x}_1 - \hat{x}_0\right) \right)^2}{\sum_i \left( \vec{w}^T (x_0^i - \hat{x}_0) \;\; (x_0^i - \hat{x}_0)^T \vec{w}\right) + \sum_j \left(\vec{w}^T (x_1^j - \hat{x}_1) \;\; (x_1^j - \hat{x}_1)^T \vec{w}\right)} \end{align}\]Okay great now we can separate groups like that. But let’s add one more cluster of points, and project it into the same space

The projection doesn’t really help us again, no matter what line you pick you can’t separate the groups. But what if we keep going? Projecting it into the 3D space with the third dimension being \(x^3\) gives us the plot below (interactive).

Where we’ve constructed the line and planar boundary which are just linear combinations of the variables we are considering \(x^3\), \(x^2\) and \(x^1\). I like to think of the plane here as the surface over which the samples radially converge onto the LDA projection.

You can see that increasing the dimensionality allows us to separate groups with more complicated morphologies, or to handle morphologies were we don’t know what projection will work a priori, but the computational cost quickly explodes in the case of even low dimensional data. e.g. If our data is just two dimensional \(x\) and \(y\) then the “cubic” projected space would contain \([1, x, y, xy, x^2, y^2, xy^2, x^2y, x^3, y^3]\) (with the 1 included to highlight how the lower dimensional contributions stick around). So we went from \(\mathbb{R}^2\) to \(\mathbb{R}^9\) (excluding 1) and you can imagine how bad this would get in the case of 4D data like \(x, y, z\) and time, and a degree 4 polynomial projection … but note that all the optimisation and the projections only go through dot products …

Here’s the code for calculating the FLDA projection cost function for an arbitrary set of samples and dimensionality.

def calculate_scatter_matrices(samples1: np.ndarray, samples2: np.ndarray):

mean1 = np.mean(samples1, axis=0)

mean2 = np.mean(samples2, axis=0)

N1 = samples1.shape[0]

N2 = samples2.shape[0]

N_total = N1 + N2

total_mean = (N1 * mean1 + N2 * mean2) / N_total

centered1 = samples1 - mean1

S1 = np.dot(centered1.T, centered1)

centered2 = samples2 - mean2

S2 = np.dot(centered2.T, centered2)

S_W = S1 + S2

diff_mean1 = (mean1 - total_mean)[:, np.newaxis]

diff_mean2 = (mean2 - total_mean)[:, np.newaxis]

S_B = N1 * np.dot(diff_mean1, diff_mean1.T) + N2 * np.dot(diff_mean2, diff_mean2.T)

return S_W, S_B

def lda_objective_function(w, S_W, S_B):

numerator = w.T @ S_B @ w

denominator = w.T @ S_W @ w

J_w = numerator / denominator

return -J_w

Linear Support Vector Machines (SVM I)

Resources

- Support Vector Machines Part 1 (of 3): Main Ideas!!!

- 16. Learning: Support Vector Machines - MIT OCW

- CS229 Lecture notes - Support Vector Machines

The Gist

Support vector machines are another supervised linear method where we want to construct some way to separate data, except unlike FLDA where we want to develop the projection, instead, we want to figure out the boundary, then we can figure out the projection later if we want. I’m just gonna come out and say that I’m stealing most of my examples from the MIT OCW lecture above.

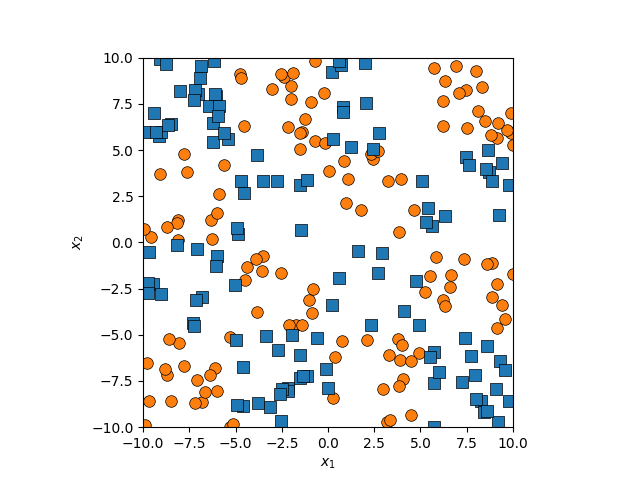

The use case is similar to before except I want to raise two particular very similar issues in FLDA. Let’s say we have the two datasets below.

Using FLDA we would construct boundaries similar to the below, and we want to introduce some new data that is unlabelled.

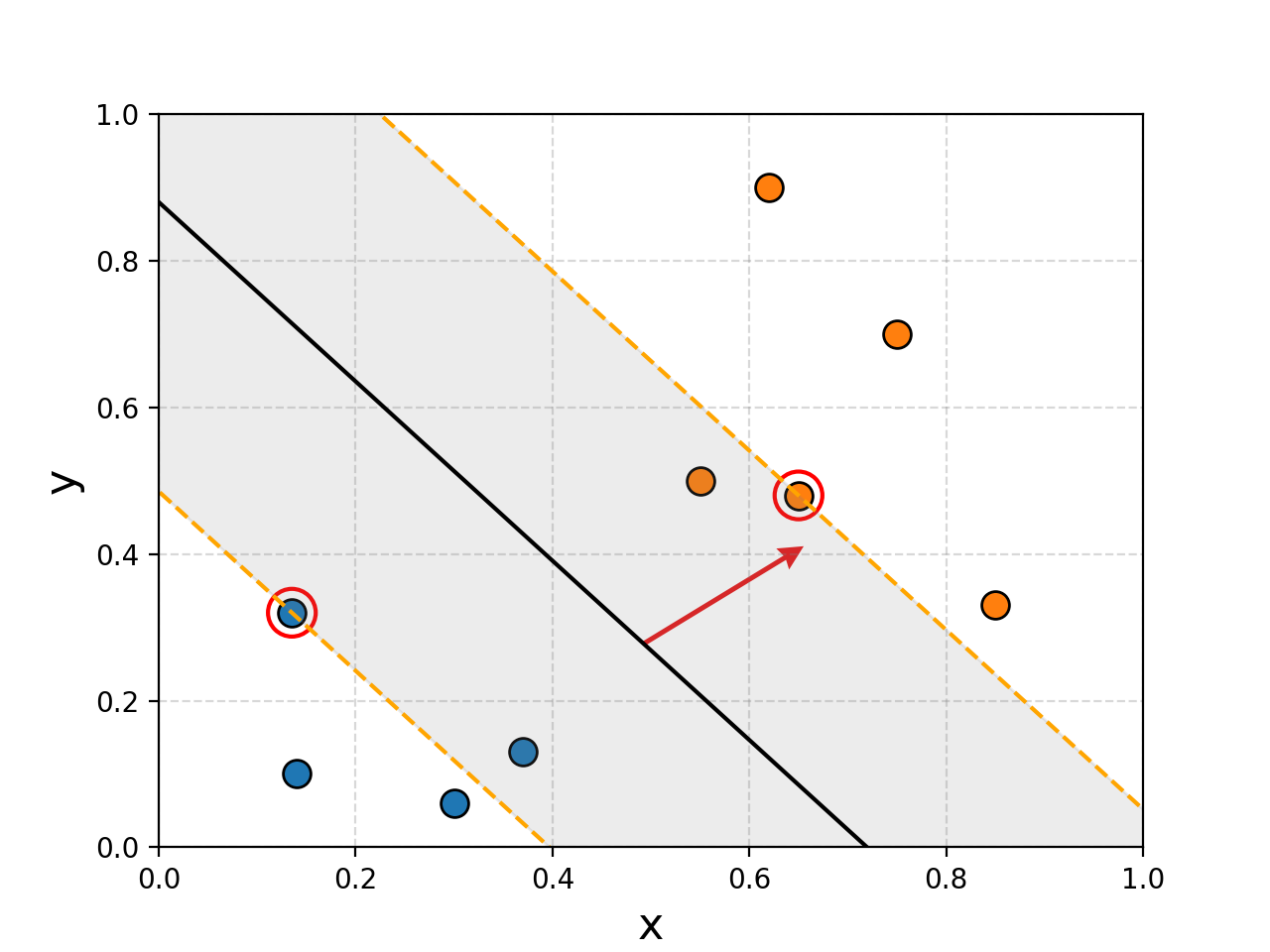

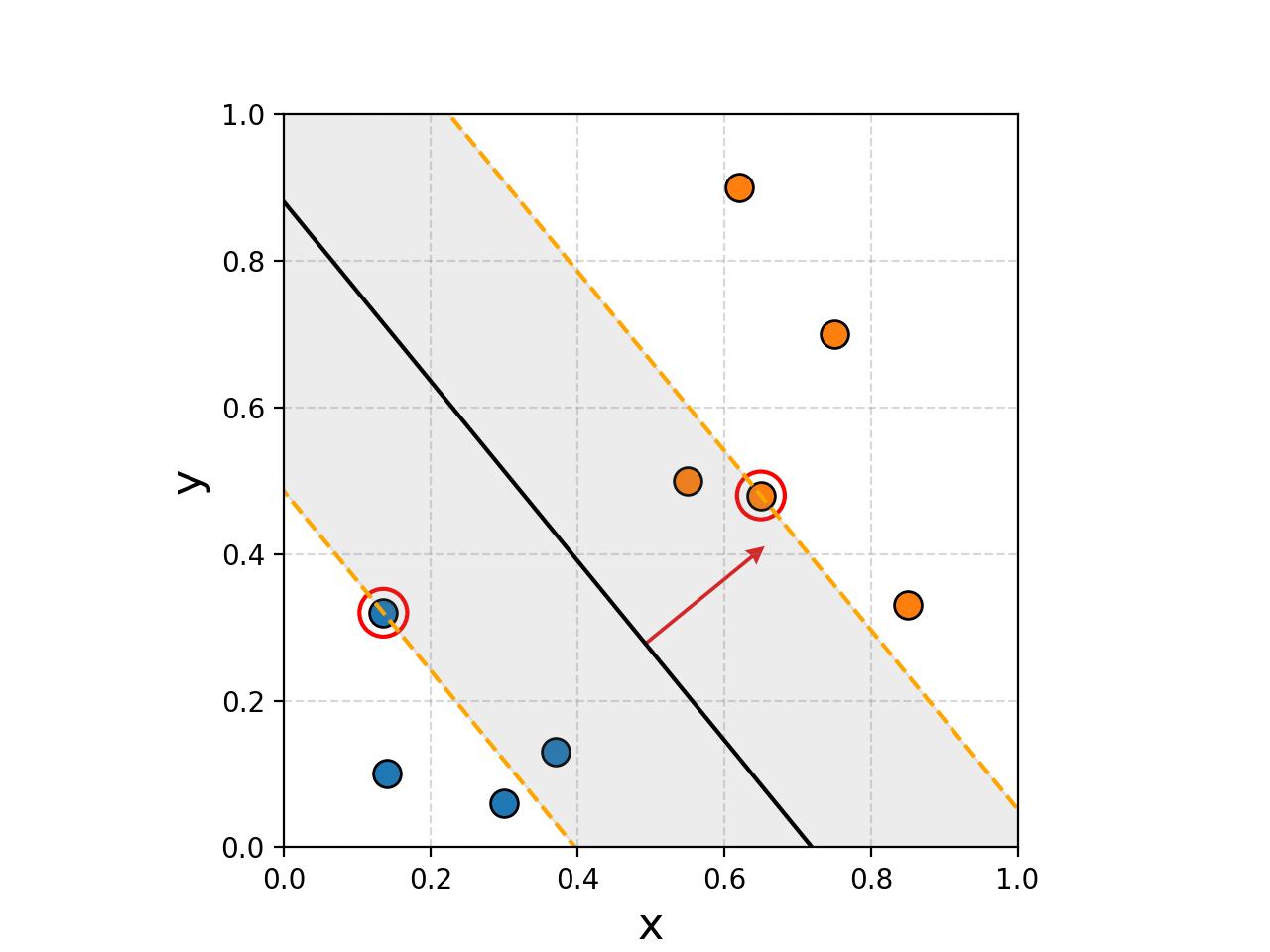

In both cases, it seems obvious to us what the new points should be labelled as, but using two lines that are perfectly fine under FLDA, both would be mis-characterised. The solution, is to 1. Maximise the region around the boundary and 2. not care so much about mis-labelling some points in our training data. Using these principles you can imagine that we would get something more reasonable.

To me, this seems much more reasonable. And you might have noticed that I’ve highlighted a couple points, reason being that the decision boundary that is constructed here is basically just the average position of these two points. Removing any other points wouldn’t make the boundary any better or worse (except maybe the blue point in the right example). We call these points support vectors and it’s where the name for Support Vector Machines come from. I’ll circle back to why this is in a bit.

Before jumping into Support Vector Machines, instead I want to start with Support Vector Models and how they are constructed. (From here I’m pretty closely “following” the MIT OCW lecture.)

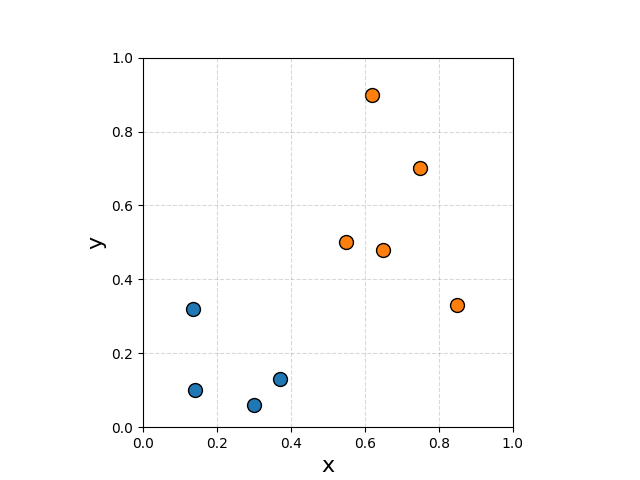

To make the visualisations less boring let’s go back to 2D. I spent too much time coming up with some useful randomish values.

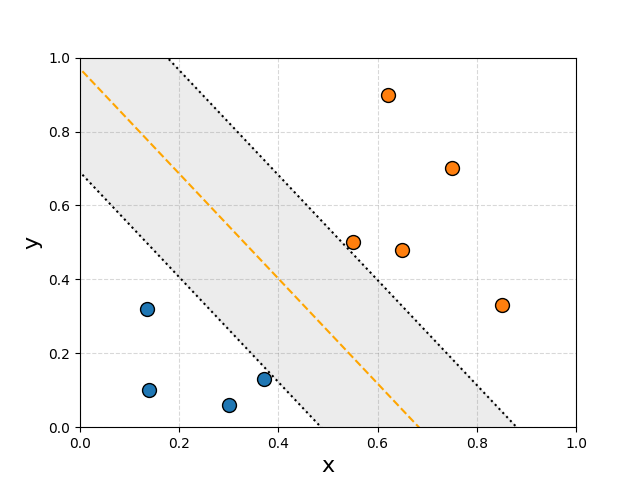

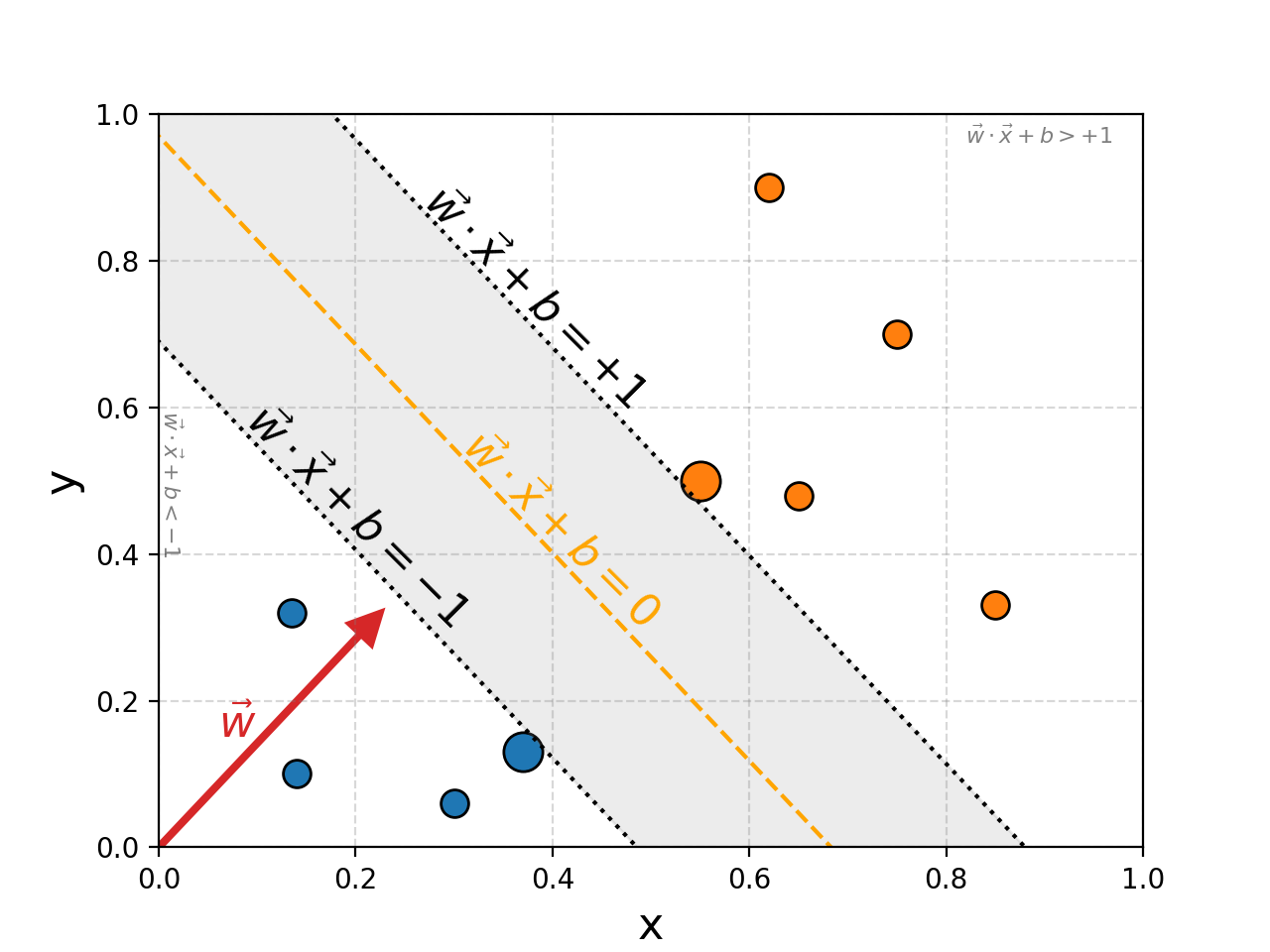

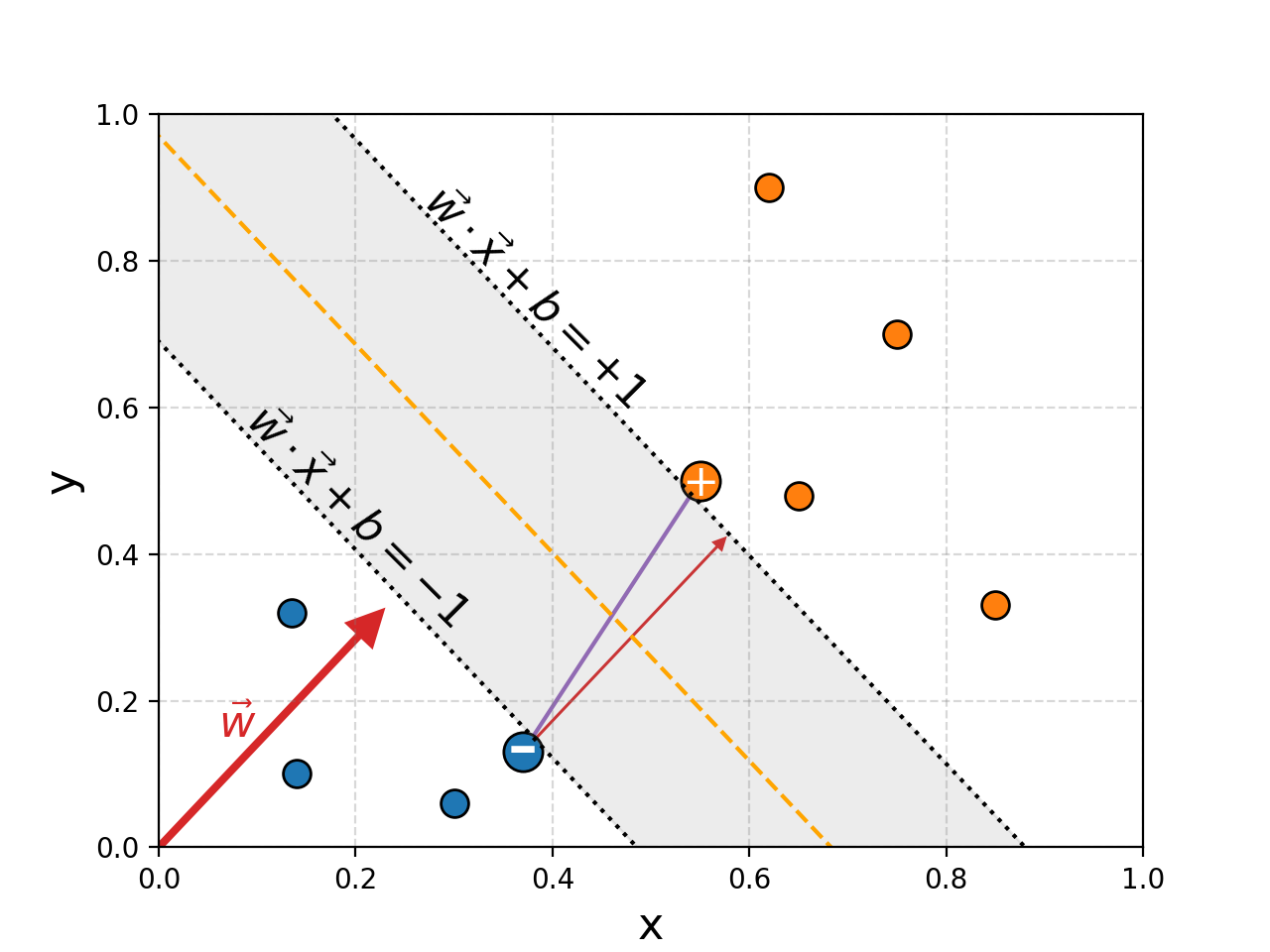

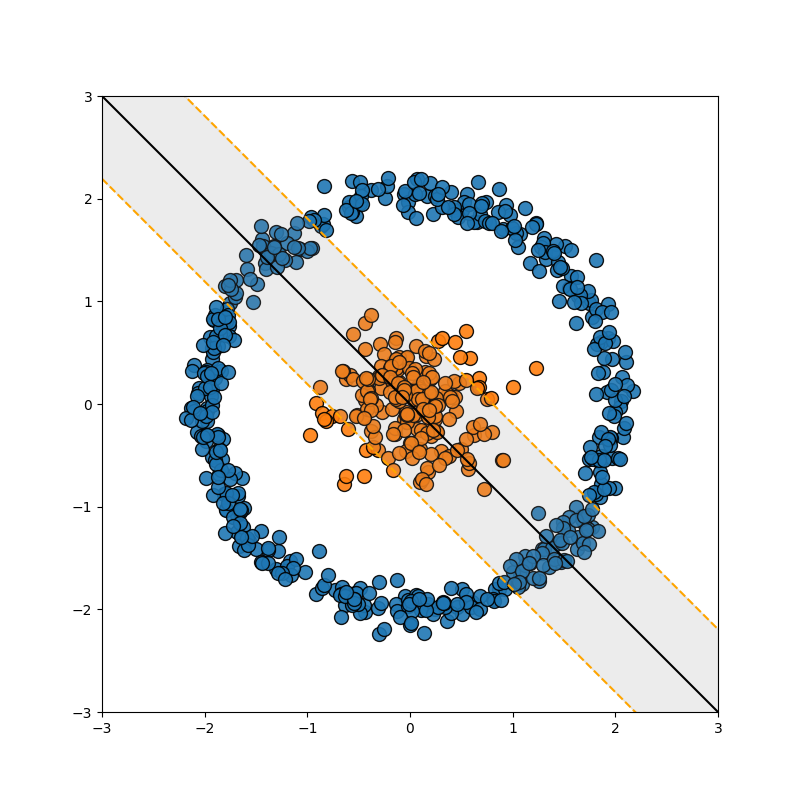

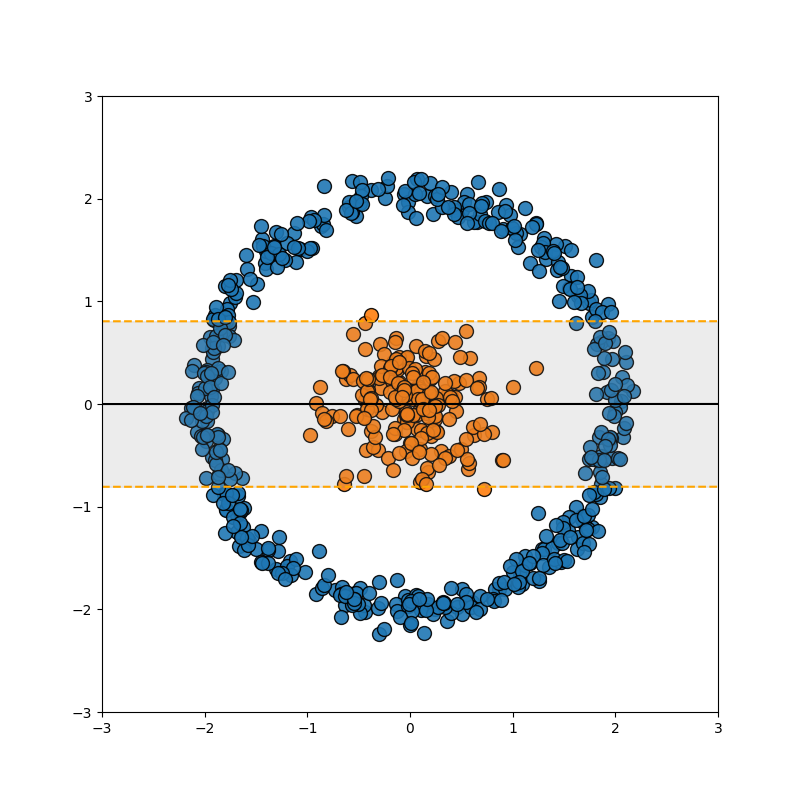

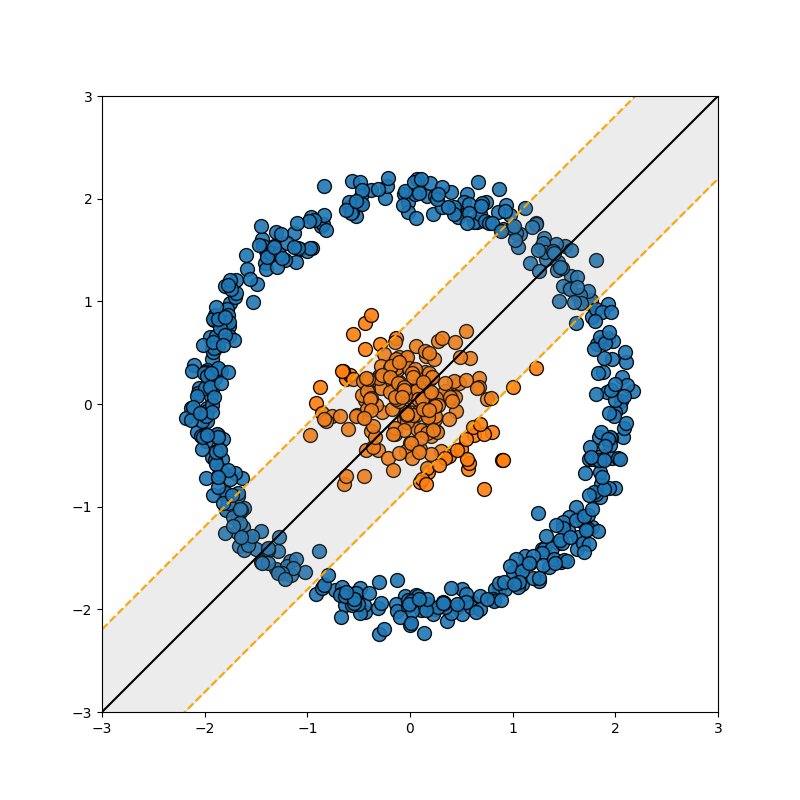

If we wanted to create a boundary that is maximally wide that distinguishes the two classes it would look something like this.

The region would then be defined either by the direction vector of the orange/yellow dashed line or the normal vector. For now let’s say we want to use a normal, the length doesn’t matter, just the direction. This then lets us very nicely define whether a point \(\vec{x}\) is contained in the region by a projection onto this line \(\vec{w}\cdot\vec{x} + b\). We define the yellow line to be the 0 point on the projection \(\vec{w}\cdot\vec{x} + b = 0\), and the boundaries to correspond to \(\pm1\), \(\vec{w}\cdot\vec{x} + b = \pm 1\).

The area above the region will then be larger than \(1\), \(\vec{w}\cdot\vec{x} + b > 1\), and below will be less than \(-1\), \(\vec{w}\cdot\vec{x} + b < -1\).

Okay, so we have the kind of goal we want, the question is how do we mathematically recover it (can’t just do it ‘by’ eye in 4D, or 16D for that matter). Let’s outline what we know.

We know for points from group 1/orange points, that they evaluate to larger than or equal to \(1\),

\[\begin{align} \vec{w} \cdot \vec{x}_1 + b \geq 1, \end{align}\]and for points from group 2/blue points, that they evaluate to less than or equal to \(-1\),

\[\begin{align} \vec{w} \cdot \vec{x}_2 + b \leq -1. \end{align}\]Keeping track of two separate expressions like this is kind of annoying though. So let’s introduce a mathematical ‘nicety’ where we label points from group 1 with \(+1\) and those from group 2 with \(-1\). Hence, for all points (\(\forall i\in [1, N]\) \(N\) being the total number of points),

\[\begin{align} y_i(\vec{w} \cdot \vec{x}_i + b) \geq 1. \end{align}\]That’s better! Now, the single mathematical object that we wanted to maximise was the width of the region.

The width of the region is dictated by the support vectors that I’ve highlighted with a ‘+’ for group 1 and ‘-‘ for group 2.

To figure out the width of the region we just need to project the distance between these two points and that of the perpendicular direction vector defining the region, this is show with a smaller red arrow in the above figure. It’s defined by a simple dot product as well (these dot products seem to be showing up a lot in this blog (hint hint)) with the normalised direction vector.

\[\begin{align} \textrm{Width} = (\vec{x}_+ - \vec{x}_-) \cdot \frac{\vec{w}}{||\vec{w}||} \end{align}\]With a bit of algebra, and using the fact that the points lie at the lines at which \(\vec{w}\cdot\vec{x}_+ + b = +1\) and \(\vec{w}\cdot\vec{x}_- + b = -1\) to go from line 2 to 3.

\[\begin{align} \textrm{Width} &= (\vec{x}_+ - \vec{x}_-) \cdot \frac{\vec{w}}{||\vec{w}||} \\ &= \frac{1}{||\vec{w}||} \left( \vec{x}_+ \cdot \vec{w} - \vec{x}_- \cdot \vec{w} \right)\\ &= \frac{1}{||\vec{w}||} \left( (1-b) - (-1-b) \right)\\ &= \frac{2}{||\vec{w}||} \end{align}\]So the goal is to maximise \(\frac{2}{\vert\vert\vec{w}\vert\vert}\), which is equivalent to maximising \(\frac{1}{\vert\vert\vec{w}\vert\vert}\), which is equivalent to minimising \(\vert\vert\vec{w}\vert\vert\), which is finally equivalent to minimising \(\vec{w}^2\), cool!3. But now instead of us wondering what the width is, we’re wondering what \(\vec{w}\) is…

From here we view the problem a little differently, we have an object that we wish to minimise, \(w^2\), subject to a bunch of constraints, \(y_i(\vec{w} \cdot \vec{x}_i + b) \geq 1\), … sounds like a Lagrange Multiplier problem. Jumping straight into it.

\[\begin{align} L = {\color{blue} \frac{1}{2} \vec{w}^2} + {\color{red} \sum_i \alpha_i \left(y_i (\vec{w}\cdot \vec{x}_i + b) -1 \right) } \end{align}\]In \({\color{blue}\textrm{blue/left term}}\) is the thing we are minimising, and in \({\color{red}\textrm{red/right term}}\) are the constraints with \({\color{red} \alpha_i}\) the set of lagrange multipliers. The cool thing here is that \(\vec{w}\) is just some linear combination of the points in the two sets! That’s it, no weird error functions or logs or exponentials, just a simple linear combination (one might even say dot product).

Then we do the same ol’ song and dance. Taking derivatives with respect to \({\color{red} \alpha_i}\) just gives us back our constraints, so focusing in on \(\vec{w}\) and \(b\).

\[\begin{align} \frac{\partial L}{\partial \vec{w}} = {\color{blue} \vec{w}} + {\color{red} \sum_i \alpha_i y_i \vec{x}_i } = 0 \\ \rightarrow {\color{green} \vec{w} = - \sum_i \alpha_i y_i \vec{x}_i} \end{align}\] \[\begin{align} \frac{\partial L}{\partial b} = {\color{red} \sum_i \alpha_i y_i} = 0 \\ \rightarrow {\color{purple} \sum_i \alpha_i y_i = 0 } \end{align}\]Highlighting the important bits in green and purple. We can then sub this back into our loss \(L\)/lagrange equation.

\[\begin{align} L = \frac{1}{2} &\left({\color{green} - \sum_i \alpha_i y_i \vec{x}_i}\right) \cdot \left({\color{green} - \sum_j \alpha_j y_j \vec{x}_i}\right) \\ &+ \sum_i \alpha_i \left(y_i \left[\left({\color{green} - \sum_j \alpha_j y_i \vec{x}_j}\right)\cdot \vec{x}_i + b\right] -1 \right) \\ = \frac{1}{2} &\left({\color{green} \sum_i \alpha_i y_i \vec{x}_i}\right) \cdot \left({\color{green} \sum_j \alpha_j y_j \vec{x}_j}\right) \\ &- \left({\color{green} \sum_i \alpha_i y_i \vec{x}_i}\right) \cdot \left({\color{green} \sum_j \alpha_j y_j \vec{x}_j}\right) \\ &+ b{\color{purple} \sum_i \alpha_i y_i} - \sum_i \alpha_i \\ L = \frac{1}{2} &\sum_i \left [ \alpha_i y_i \left(\sum_j \alpha_j y_j \, \vec{x}_i \cdot\vec{x}_j \right) - \alpha_i \right] \end{align}\]Staying to the theme, the final calculation comes down to a simple dot product between the data points \(\vec{x}_i \cdot\vec{x}_j\). So unlike FLDA we don’t have an analytical solution, but we do have a function that we can optimise.

The aim of the game is now to optimise over \(\alpha_i\) to minimise \(L\).

Translating this into the code.

def supportvecmodel_costfunc(alphavec, datavecs, labelvec):

K = np.dot(datavecs, datavecs.T) # wink wink

alpha_y = alphavec * labelvec

P = np.outer(alpha_y, alpha_y) * K

quadratic_term = 0.5 * np.sum(P)

linear_term = -np.sum(alphavec)

cost = quadratic_term + linear_term

return cost

You can hopefully see that the margins are significantly wider despite not having 100% accuracy when discriminating between the two groups. This is a pretty simple example though, let’s see what it looks like when we increase the number of samples.

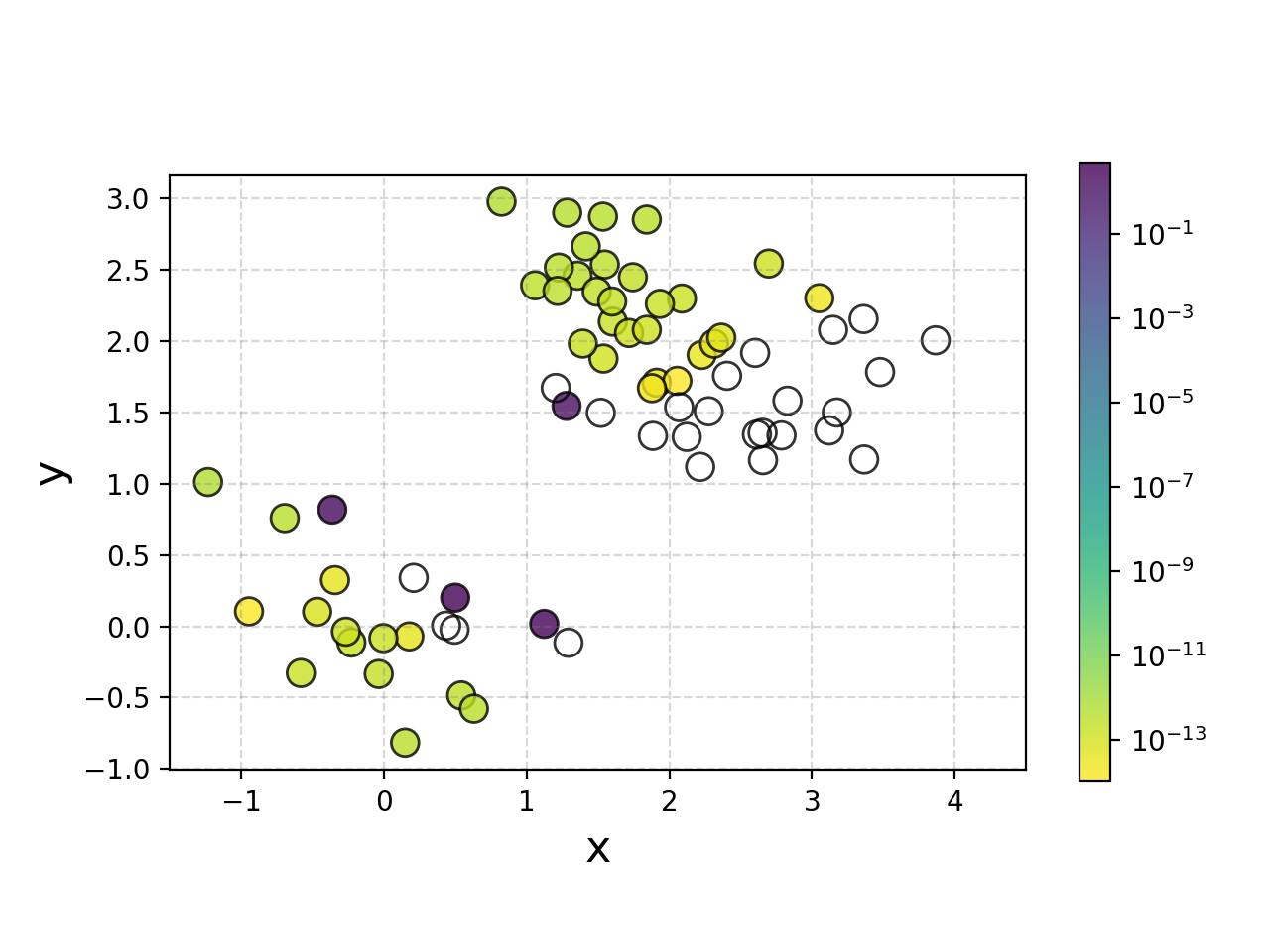

We can also plot the weights for how much each point is contributing to the decision boundary for fun, and broadly it makes sense.

So this is great, but as noted in the previous section, data isn’t always separated so nicely. For example…

Well, we can use the exact same trick we used in the FLDA case, we add a dimension which is some polynomial combination of the variables (for those that already know where I’m going, this corresponds to a degree 2 polynomial kernel).

And to be clear, this uses the exact same math as above, just now \(x^2+y^2\) is another dimension in \(\vec{x}_i = [x, y, x^2+y^2]\) with the same ol’ dot products. This is great! Right? Well then what about this example?

It might seem a bit contrived but you could imagine a study where they’re looking at the interaction of two drugs. A little of both does nothing, a good amount of one and a lil of the other yields good outcomes, but too much of both leads to unwanted drug interactions and bad outcomes. The quadratic projection wouldn’t help here, and other low order polynomial projections likely wouldn’t either. So once again.

Ridge Regression (KRR I)

Resources

- Bayesian Linear Regression : Data Science Concepts - by my man ritvikmath

- Data analysis recipes:Fitting a model to data - ArXiV:1008.4686 - David Hogg, Jo Bovy, Dustin Lang

- Ordinary least squares

- Ridge regression

- Generalised Kernel Machines

- Gauss–Markov theorem

- Bias-Variance Trade Off

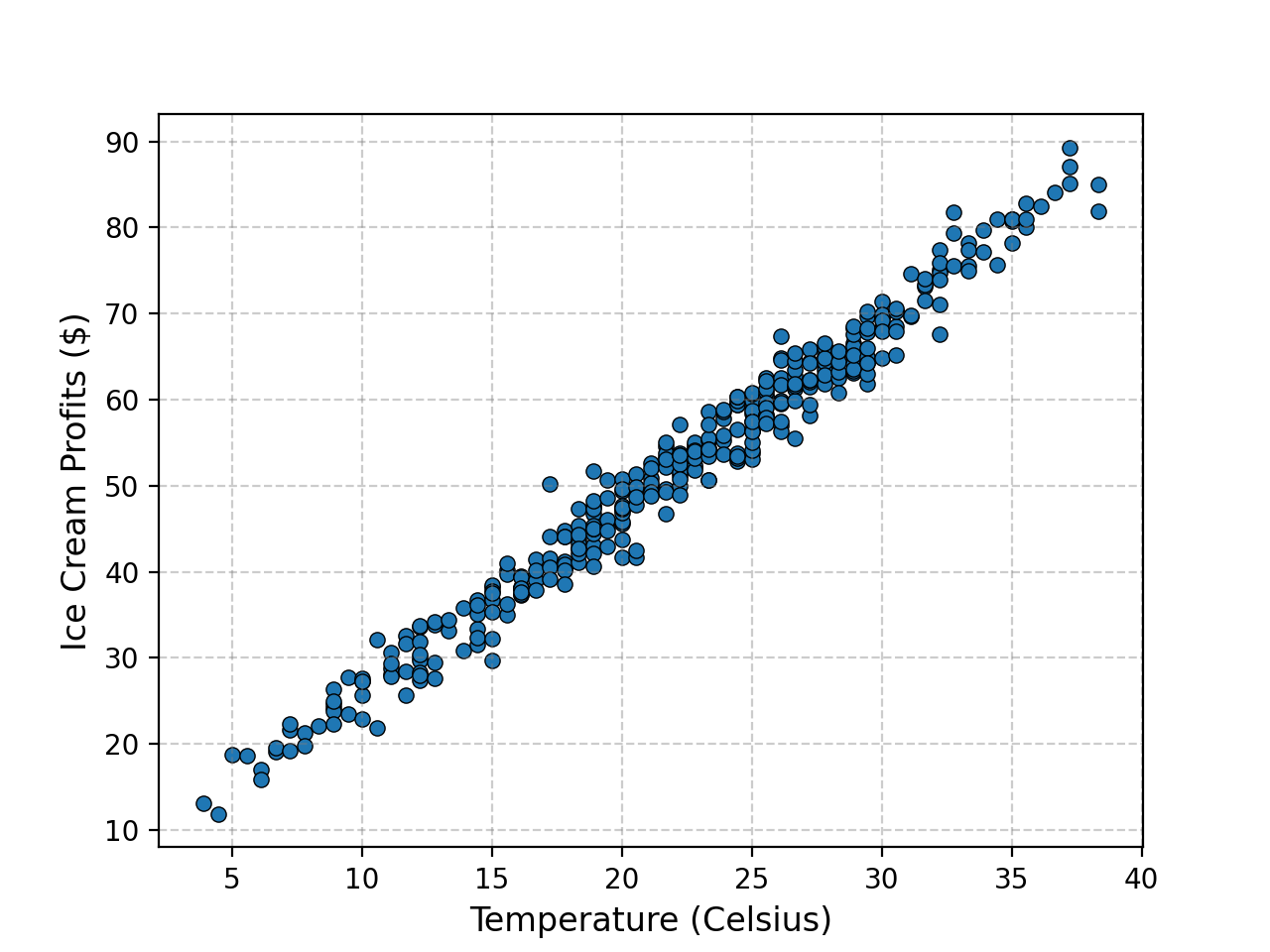

- Temperature and Ice Cream Sales Kaggle dataset by Raphael Manayon

The Gist

Switching gears, or more switching cars at this point, let’s talk about regression. Plain ol’ linear regression. You have a dependent variable, \(y\), an independent variable \(x\), a set of samples of each \(\{y_i\}\) and \(\{x_i\}\), and you believe they are related via \(y= \alpha_1 x + \alpha_0\) (excuse the pointlessly mathy way of describing things, it will be useful later).

This is exactly what I did in my first blog post but in that case I went straight into the Bayesian probabilistic route. If we just wanted a best fit however, we don’t need to go through all the effort.

We can just define a linear system of matrices. Defining,

\[\begin{align} Y = \left[\begin{matrix} y_1 \\ y_2 \\ \vdots \\ y_N \end{matrix} \right] \end{align}\] \[\begin{align} X = \left[ \begin{matrix} 1 & x_1 \\ 1 & x_2 \\ \vdots & \vdots \\ 1 & x_N \\ \end{matrix} \right] \end{align}\] \[\begin{align} \vec{\alpha} = \left[\begin{matrix} \alpha_0 \\ \alpha_1 \\ \end{matrix} \right] \end{align}\]Using the above4 with \(Y=[N\times 1]\), \(\vec{\alpha}=[P\times 1]\) and \(X=[N\times P]\) we can define the system as,

\[\begin{align} Y = X \vec{\alpha}. \end{align}\]And that’s it. \(Y\) is called the regressand, and \(X\) the design matrix. The aim of the game is to just figure out \(\vec{\alpha}\) … but wait, sure I don’t need uncertainties on my final parameter values, but I should include the uncertainties on my data if I have them right?? That doesn’t show up in the above. Well for that I find it easier to actually go back to the probabilities.

If we’re assuming that our data is distributed according to some normal distribution like the following (assuming homoscedacity for simplicity),

\[\begin{align} y_i \sim \mathcal{N}(\alpha_1 x_i + \alpha_0, \sigma^2), \end{align}\]then our system becomes,

\[\begin{align} Y = X \vec{\alpha} + \vec{\epsilon}. \end{align}\]Where \(\vec{\epsilon}\sim \mathcal{N}(0, \sigma^2)\). Splitting it up this way is pretty close to the reparameterisation trick where all the stochasticity has movied to \(\vec{\epsilon}\) now and \(X \vec{\alpha}\) is deterministic. But that’s just a fun little aside.

As people who are aware of full probability theory, or want to go about this a lil rigorously, we would then want to maximise the probability of our parameters,

\[\begin{align} \DeclareMathOperator*{\argmax}{arg\,max \;} \argmax_\vec{\alpha} p(\vec{\alpha} \vert Y, X, \vec{\epsilon}) \propto \argmax_\vec{\alpha} {\color{red} p(Y, X, \vec{\epsilon} \vert \vec{\alpha})}{\color{blue} p(\vec{\alpha})}. \end{align}\]i.e. We want to find the maximum a posteriori (MAP) estimate. As part of the bias-variance tradeoff, the more parameters we include the higher our accuracy (less bias) but we get less individual information on the parameters (higher variance). Additionally, we run the risk of overfitting.

The way that this is typically done is via regularisation on the parameters fitted, giving them a tendency to go towards 0, such that they only meaningfully contribute in the case where they really improve the fit. We can encode this as a prior on our parameters, that they are distributed according to a normal distribution with some variance \(\tau_j = \tau\). (further assuming that we just put the same regularisation on all of them).

\[\begin{align} \alpha_j \sim &\mathcal{N}(0, \tau_j^2)\\ &\textrm{or} \\ \vec{\alpha} \sim &\tau \mathcal{N}(0, \vec{1}) \end{align}\]Where I denote a vector full of ones that is the relevant length \(\vec{1}\), and when I need it the identity matrix as \(I\). We can thus write down our likelihood and prior, a value proportional to our posterior.

\[\begin{align} \DeclareMathOperator*{\argmax}{arg\,max \;} &\argmax_\vec{\alpha} p(\vec{\alpha} \vert Y, X, \vec{\epsilon}) \\ &\propto \argmax_\vec{\alpha} {\color{red} \prod_i \frac{1}{\left(2\pi\right)^{1/2}} \det\left(C_Y \right)^{-1/2} \exp\left(-\frac{1}{2} (Y_i - X_i \vec{\alpha})^T C_X^{-1}(Y_i - X_i \vec{\alpha} ) \right)} \\ &\;\;\;\;\; \times {\color{blue} \frac{1}{\left(2\pi\right)^{k/2}} \det\left(C_\alpha \right)^{-1/2} \exp\left(-\frac{1}{2} \vec{\alpha}^T C_\alpha^{-1}\vec{\alpha} \right)}. \end{align}\]Where \(k\) is the length/dimensionality of \(\vec{\alpha}\), \(C_Y\) is the covariance matrix for our data \(Y\), which is just \(\sigma^2\) and \(C_\alpha\) is the covariance matrix for our parameters in our prior which is a diagonal matrix of size \(k\) which \(\tau^2\) on the diagonal. Simplifying the above,

\[\begin{align} \DeclareMathOperator*{\argmax}{arg\,max \;} &\argmax_\vec{\alpha} p(\vec{\alpha} \vert Y, X, \vec{\epsilon}) \\ &\propto \argmax_\vec{\alpha} {\color{red} \exp\left(-\frac{1}{2\sigma^2} (Y - X\vec{\alpha})^2 \right)}{\color{blue} \exp\left(-\frac{1}{2\tau^2} \vec{\alpha}^2 \right)}. \end{align}\]Now because of numerical instability issues and many other reasons, we then take the log of this expression and reverse the sign.

\[\begin{align} \DeclareMathOperator*{\argmin}{arg\,min \;} &\argmin_\vec{\alpha} - \log p(\vec{\alpha} \vert Y, X, \vec{\epsilon}) \\ &= \argmin_\vec{\alpha} {\color{red} \frac{1}{2\sigma^2} (Y - X \vec{\alpha})^2} {\color{blue} + \frac{1}{2\tau^2} \vec{\alpha}^2} + C. \end{align}\]Where \(C \in \mathbb{R}\) is some arbitrary constant relating to the normalisation. If we’re just wishing to minimise this expresion with respect to \(\vec{\alpha}\) we can drop all the terms that don’t involve it and multiply everything by the constant \(2\sigma^2\) setting \(\sigma^2/\tau^2 = \lambda\) to make the math nicer.

\[\begin{align} L(\vec{\alpha}) = (Y - X \vec{\alpha})^2 + \lambda \vec{\alpha}^2 \end{align}\]Which is just ordinary least squares with a \(L_2\) regularisation on the parameters5, or ridge regression. Note that the uncertainties only come through in how they influence the regularisation, which is because we assumed that they are shared the same noise variance.

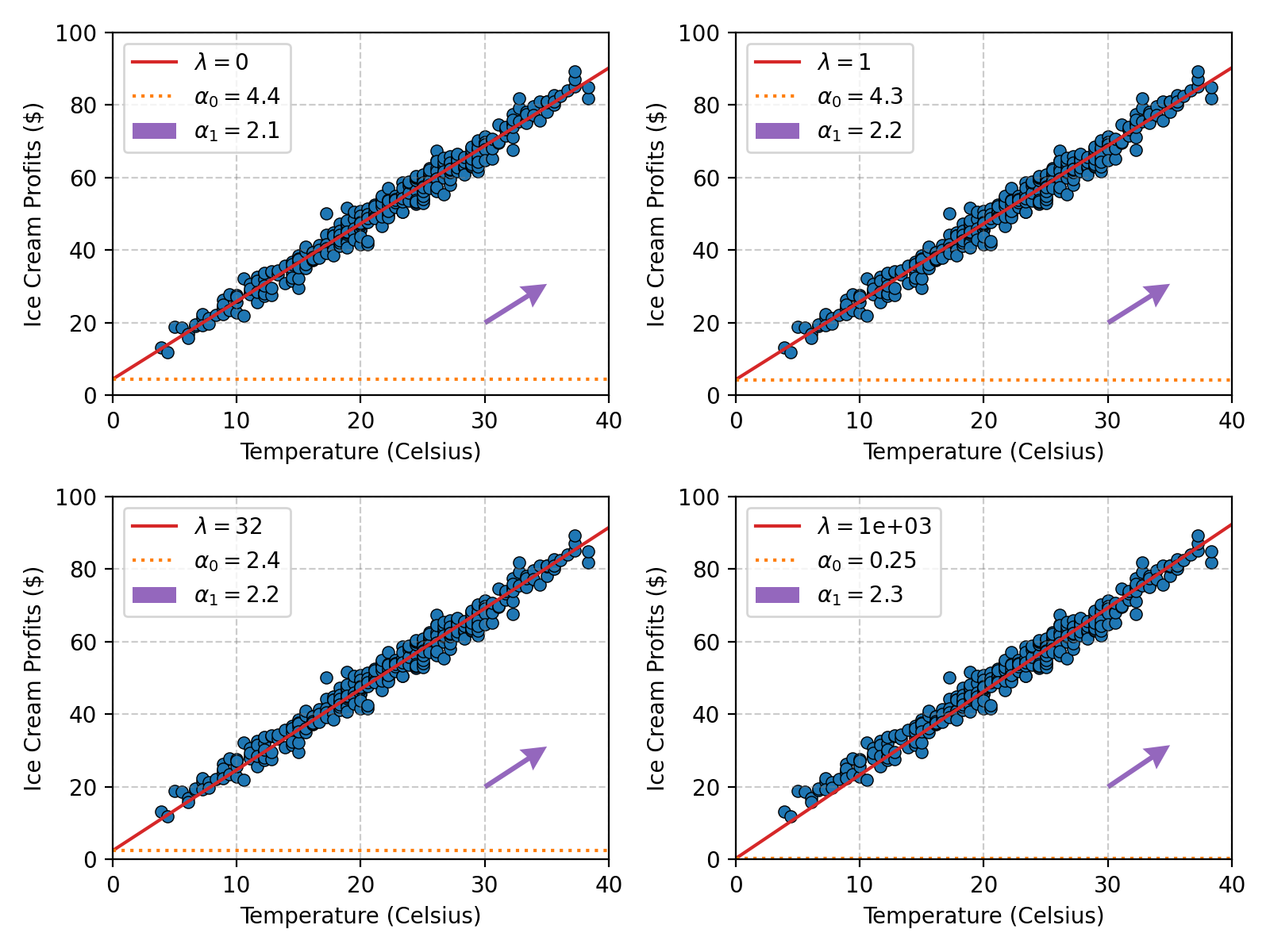

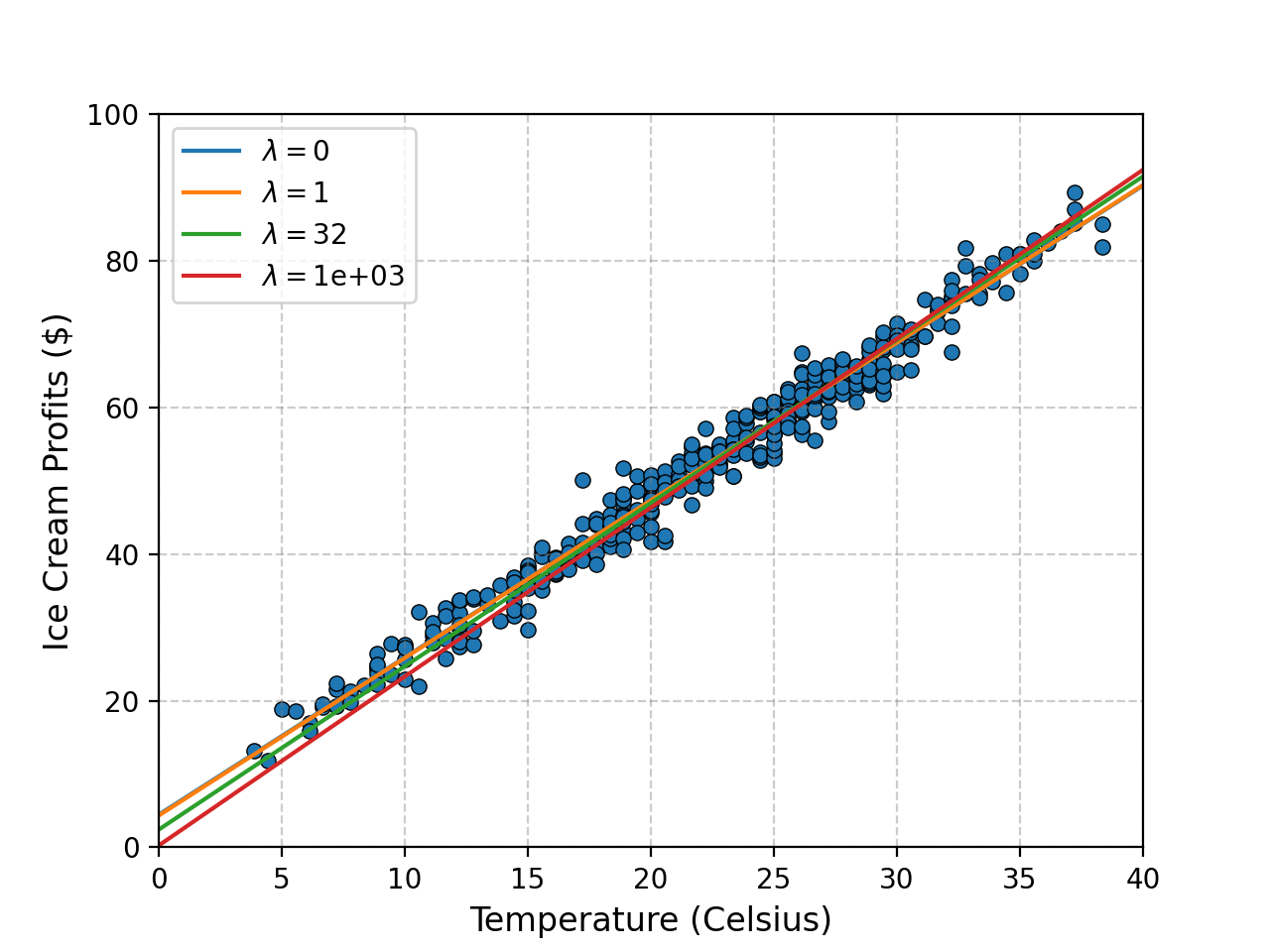

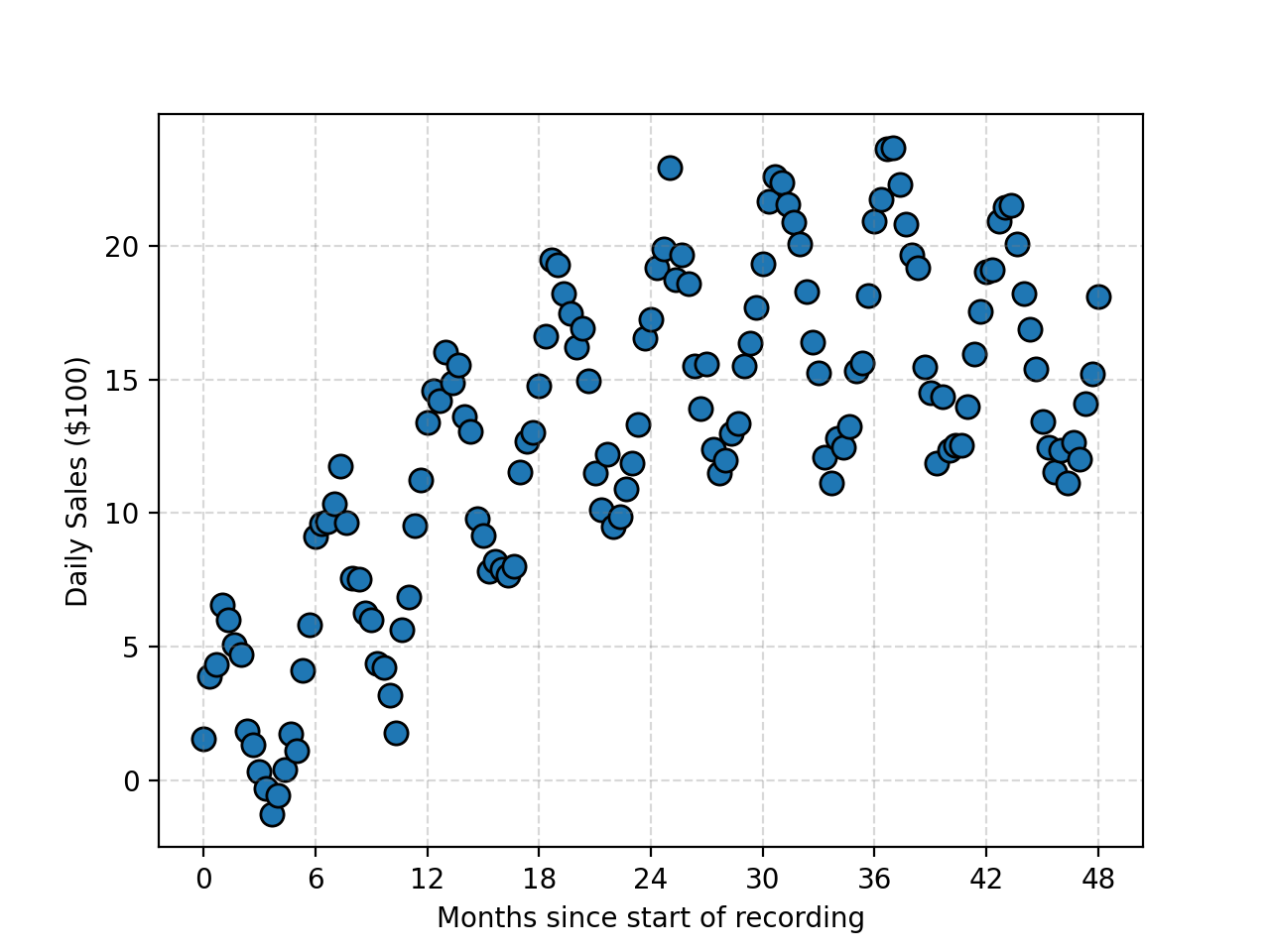

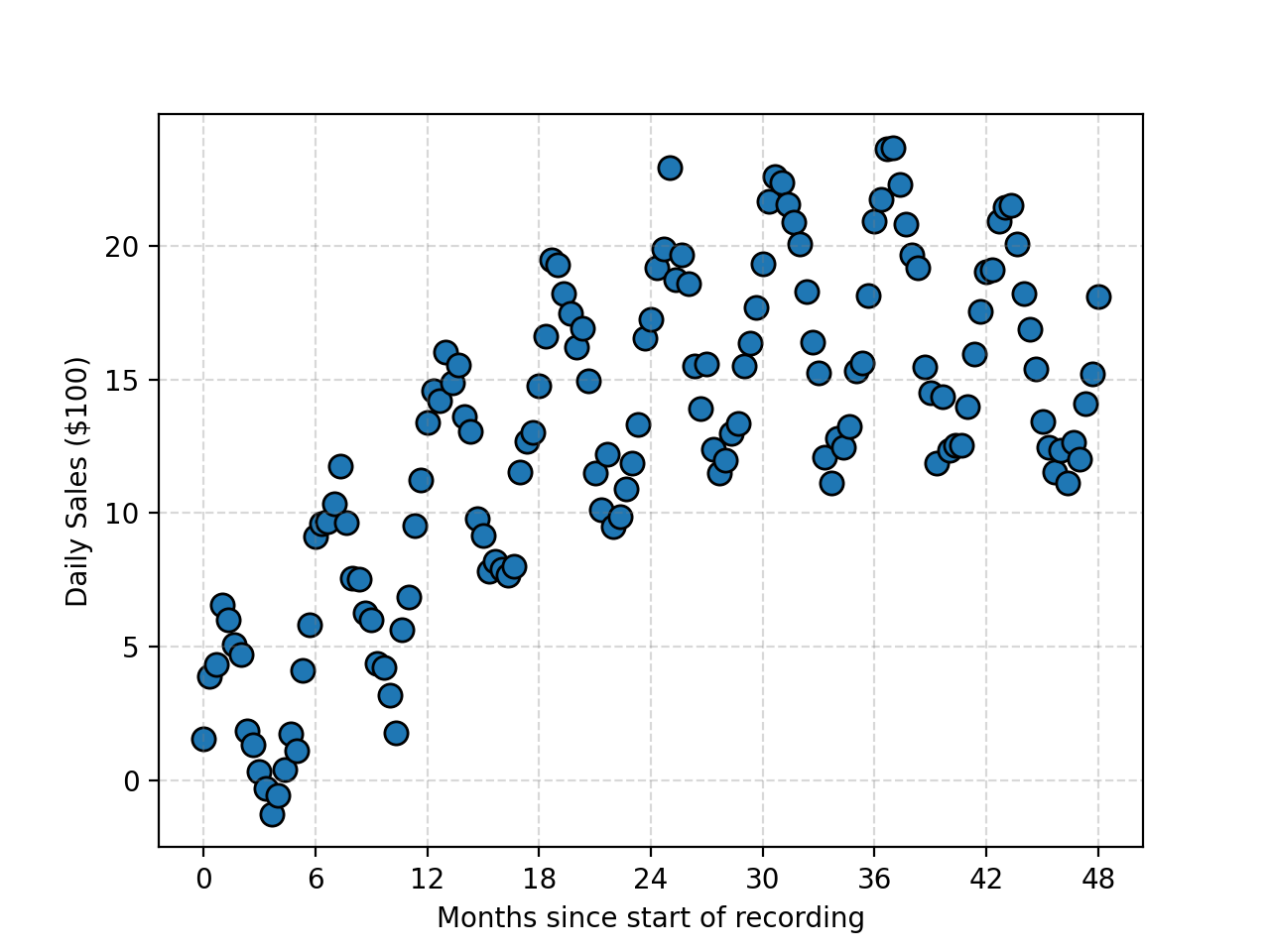

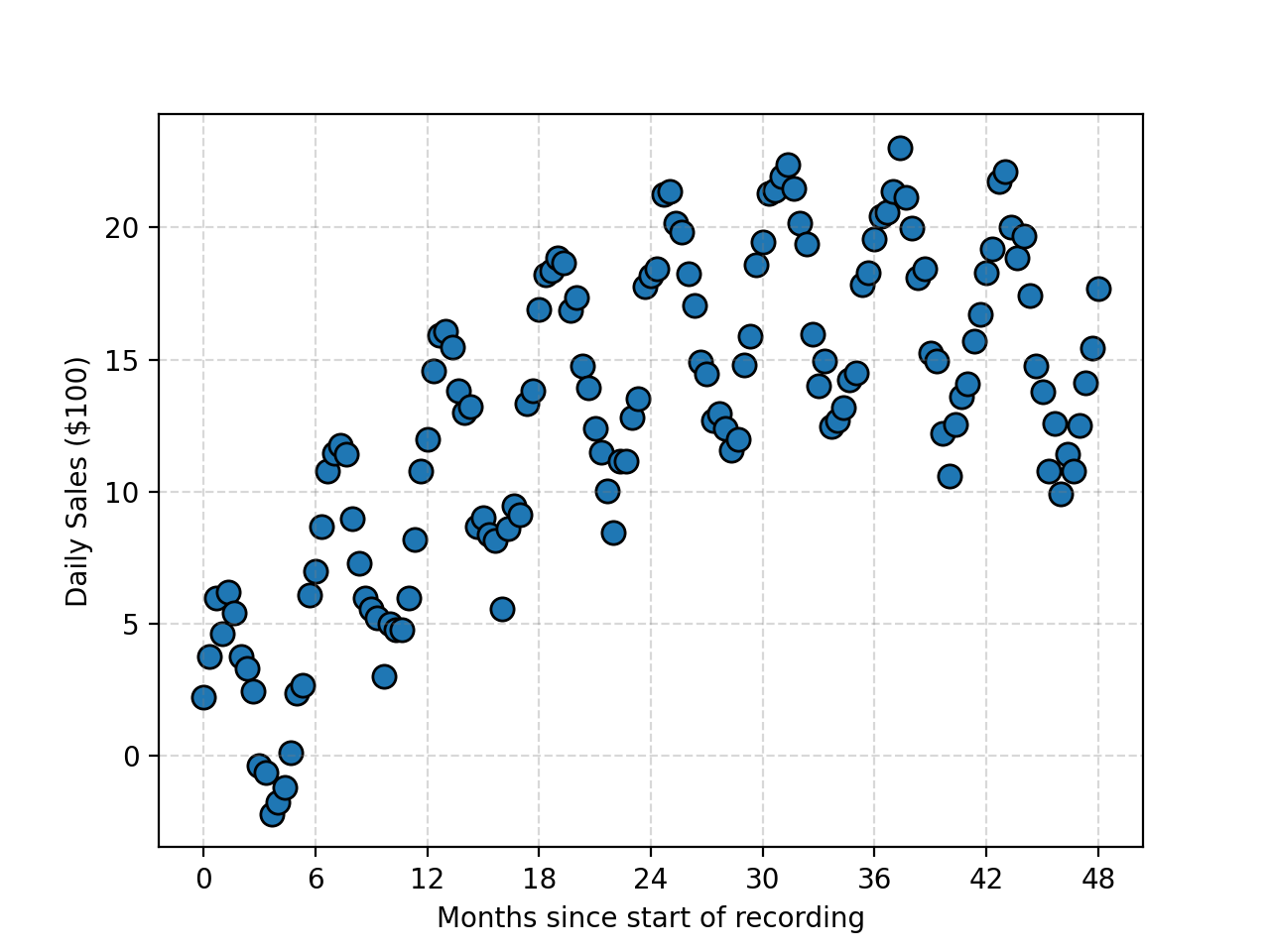

What’s the point of all this if we aren’t going to use it though? Let’s look at the relationship between the Temperature and Ice Cream Sales Kaggle dataset.

We can then see what the Ridge regression fit gives for different values of \(\lambda\) remembering that \(\lambda=0\) means no regularisation.

Here’s the code.

from scipy.optimize import minimize

linearY = data['Ice Cream Profits']

linearX = np.array([data['Temperature']*0 + 1., data['Temperature']]).T

def ridge_regression_cost(alpha, Y, X, lambdaval):

return np.linalg.norm(Y - X @ alpha)**2 + lambdaval * np.linalg.norm(alpha)**2

ridge_regression_cost(np.array([0., 2.]), linearY, linearX, 0.1)

result_dict = {}

for lambdaval in np.append(np.array([0.]), np.logspace(0, 3., 3)):

linear_data_ridge_regression_result = minimize(

ridge_regression_cost,

x0 = np.array([0., 2.]),

args = (linearY, linearX, lambdaval)

)

result_dict[lambdaval] = linear_data_ridge_regression_result.x

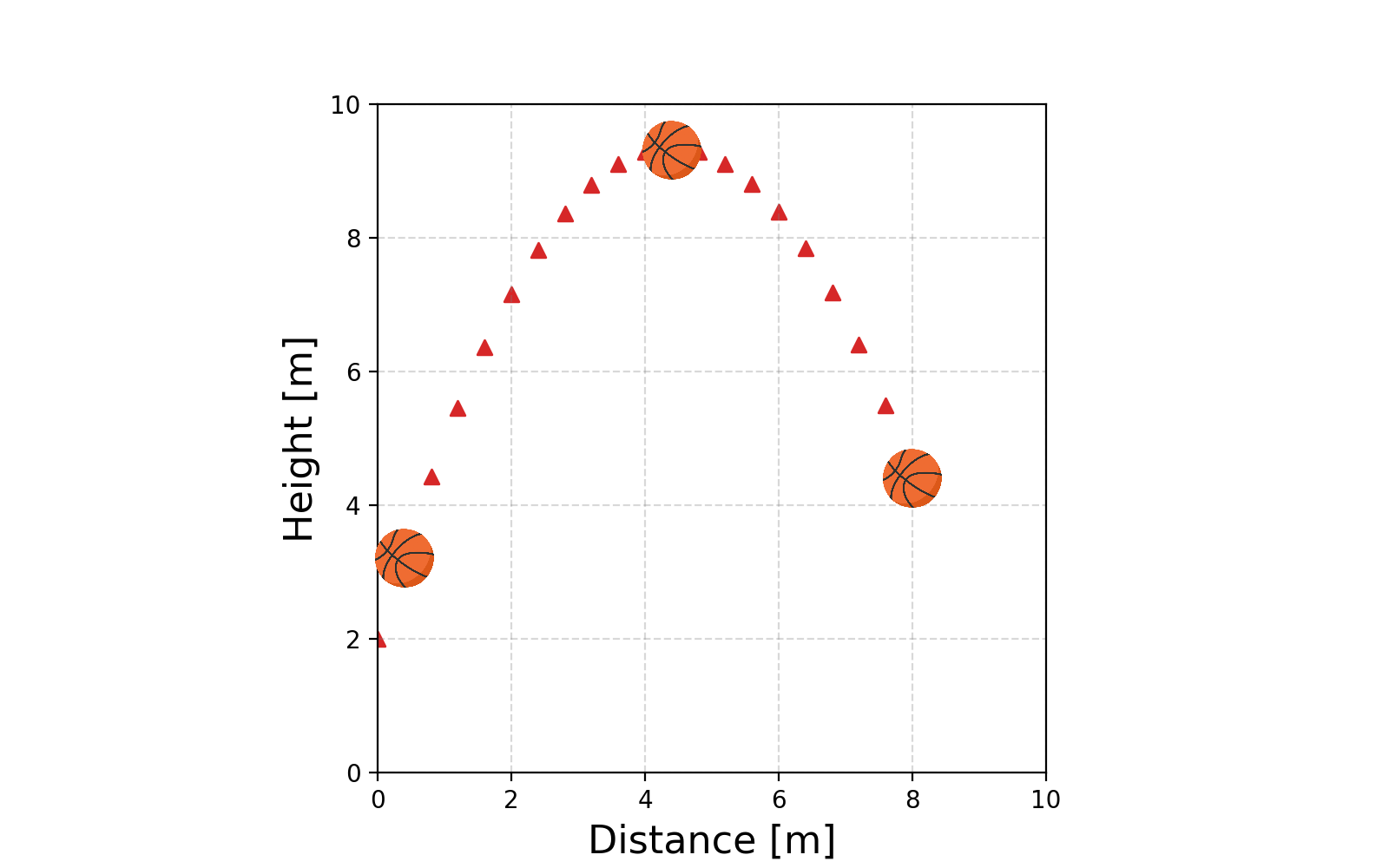

Alrighty, we have this very nice very automated way to perform our fitting. Let’s look at another example of the path of a basketball.

We know beforehand that the path will be parabolic and hence can’t be modelled linearly (or at least properly). And now we can’t use our very nice method any more 😢 … or can we?

We employ the exact same trick as in the previous two sections, particularly on \(x\). We pretend that we have a new variable, that is really just \(x^2\), and then fit a straight line in that space (everything is just dot or matrix products), which will be equivalent to fitting a polynomial, but to the framework, it’s still just fitting a straight line! So now our data looks like.

\[\begin{align} Y = \left[\begin{matrix} y_1 \\ y_2 \\ \vdots \\ y_N \end{matrix} \right] \end{align}\] \[\begin{align} X = \left[ \begin{matrix} 1 & x_1 & x_1^2\\ 1 & x_2 & x_2^2\\ \vdots & \vdots \\ 1 & x_N & x_N^2\\ \end{matrix} \right] \end{align}\] \[\begin{align} \vec{\alpha} = \left[\begin{matrix} \alpha_0 \\ \alpha_1 \\ \alpha_2 \\ \end{matrix} \right] \end{align}\]Let’s look at the data in the project space.

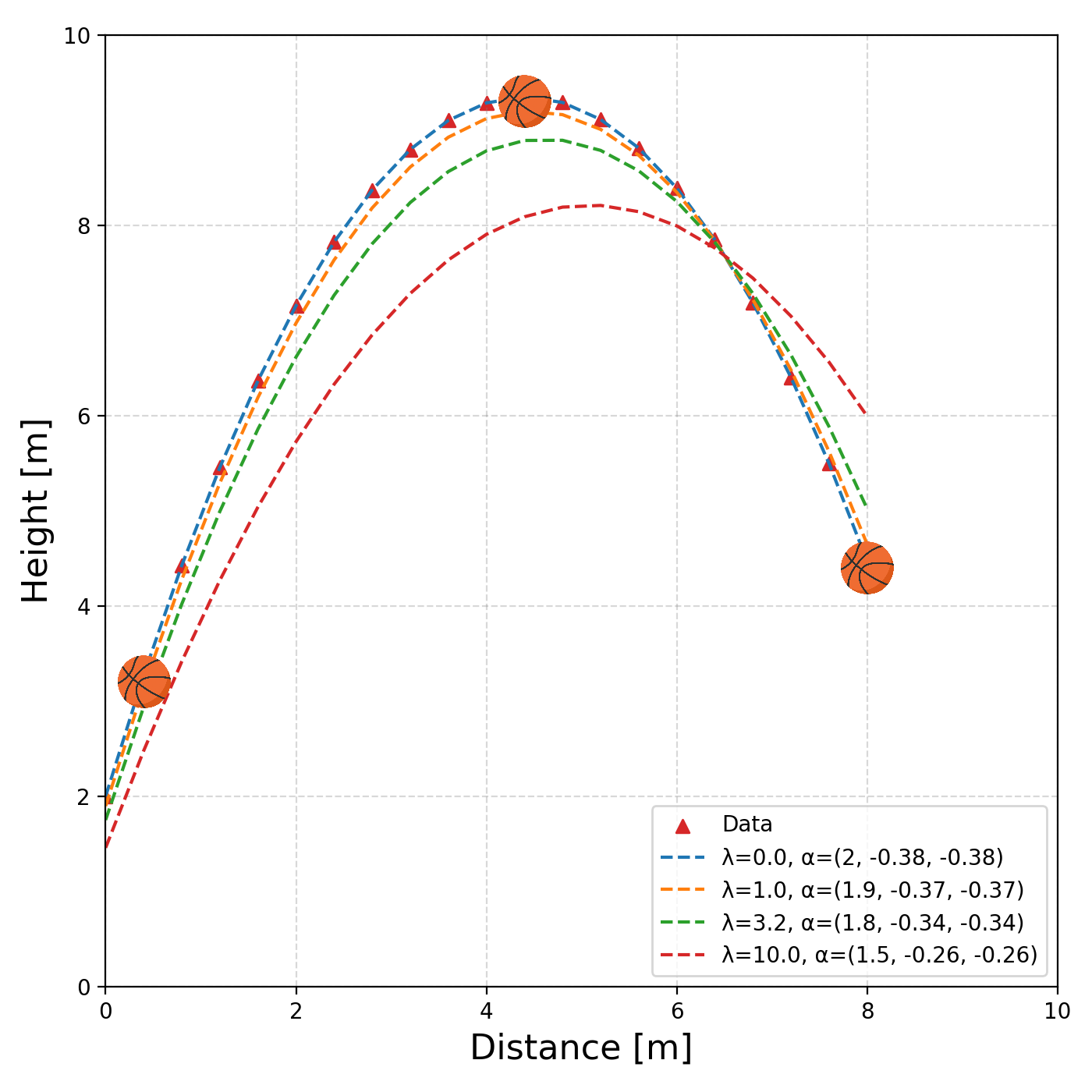

Wait, that still doesn’t look linear? What’s going on? Well that’s because the problem is linear with respect to \(\vec{\alpha}\) not \(X\) still. The linear object we are fitting is closer to plane within which the curve we want is contained, and more specifically the region with the constraint that \(X_1 = X_2\). Let’s look at some results of the fitting (same code).

We can also look at what these look like in the original space.

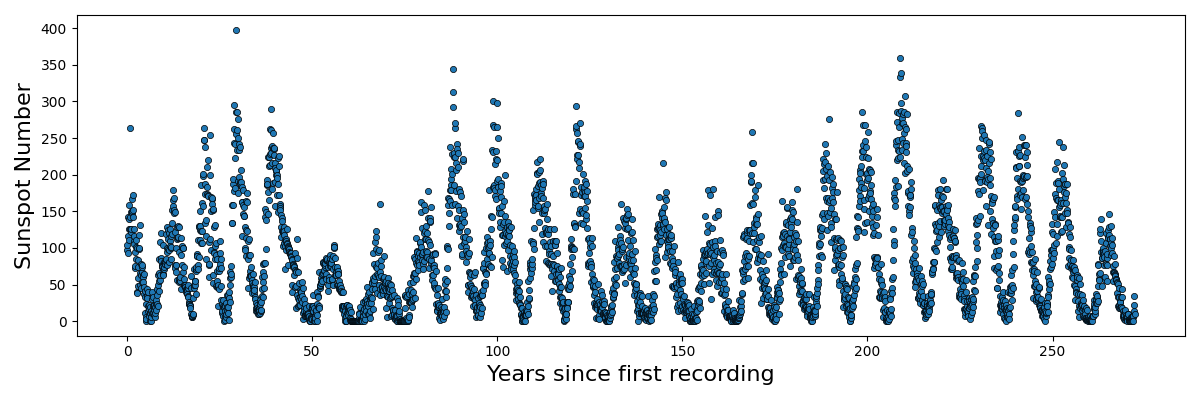

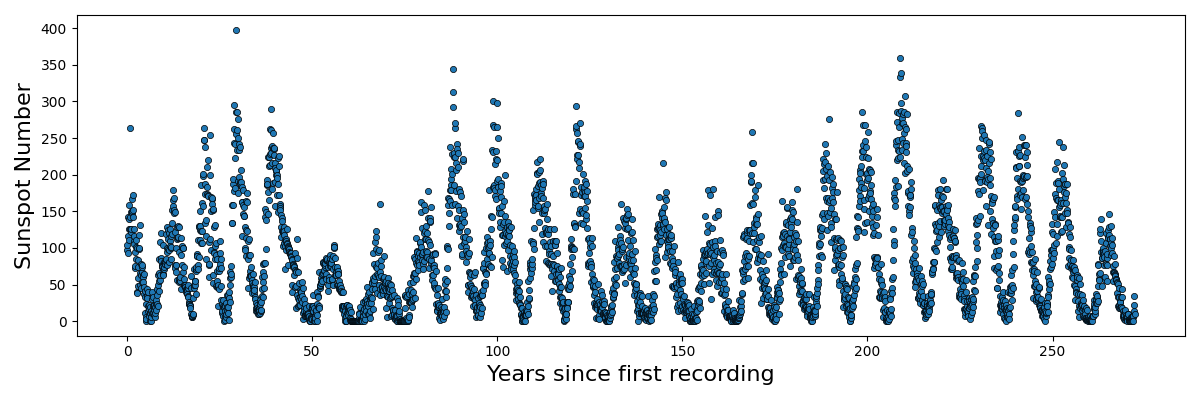

We can see that as the regularisation tightens, or we increase \(\lambda\), the constant term is particularly being penalised. That’s great, but again, we were helped out by knowing what form of equation to expect. Additionally, what if something was periodic like this sunspot data?

Do we just give up or…?

Principle Component Analysis (Kernel PCA I)

Resources

- Principal component analysis - Wikipedia

- Kernel Principal component analysis - Wikipedia

- Principal Component Analysis (PCA) | Dimensionality Reduction Techniques (2/5) - DeepFindr

- 19. Principal Component Analysis - MIT OCW

- Principle component regression - Wikipedia

- Principle component regression - Wikipedia

- Higher Order Reduced Rank Regression - ArXiv: 2503.06528

The Gist

To me Principle Component Analysis (PCA) is very similar to FLDA where we want to find a set of vectors that we can project the data on to best distinguish the data. PCA of course does it entirely differently (more robustly imo) but finding the direction(s) that maximise the variance in the given direction. The general process is as follows.

You have a set of n observations \(Z_i = [Z_i^1, Z_i^2, ..., Z_i^d] \in \mathbb{R}^d\) with \(Z_i^j \in \mathbb{R}^1\) with \(\mathbb{Z}^T = [Z_1, Z_2, ..., Z_n]\).

You shift it so that the mean is \(\vec{0}\), \(X_i = Z_i - \bar{Z}\) or \(\mathbb{X} = \mathbb{Z} - \frac{1}{n} \mathbb{Z} \vec{1}_n\) where \(\vec{1}_n\) is a vector of size \(n\) with every element equal to \(1\).

We then calculate the covariance matrix of the transformed data by first noting that the empirical covariance, \(S\), can be calculated as,

\[\begin{align} S = \mathbb{E}[X^2] - \mathbb{E}[X]^2 = \frac{1}{n}\sum_{i=1}^n X_i X_i^T = \frac{1}{n} \mathbb{X}^T\mathbb{X}. \end{align}\]We can then perform Singular Value Decomposition which in this case is an eigendecompoisition as \(S\) is positive semi-definite and thus diagonalizable matrix. We know this as if we perform the operation for any vector in \(\mathbb{R}^d\), then we get the variance in that direction. i.e.

\[\begin{align} v^T S v = \textrm{variance in the direction of v} \geq 0 \end{align}\]Hence, \(\exists P, D\) where \(P\) is orthonormal (\(P^T P = P P^T = I\)) and \(D\) is diagonal. Equivalently, this means we can represent \(P\) and \(D\) as,

\[\begin{align} D = \left[ \begin{matrix} \lambda_1 & 0 & \ldots & 0 \\ 0 & \lambda_2 & \ldots & 0 \\ \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & \ldots & \lambda_d \\ \end{matrix}\right] \end{align}\]where \(\lambda_1 > \lambda_2 > ... >\lambda_d \geq 0\) and

\[\begin{align} P = \left[ \begin{matrix} \vert & \vert & & \vert \\ v_1 & v_2 & \ldots & v_d \\ \vert & \vert & & \vert \\ \end{matrix}\right] \end{align}\]such that \(S = P^T D P\). The \(P\) matrix can be viewed as a projection operator that maps the centralised data into the space of eigenvectors, \(Y_i = P\, X_i\), within which data’s covariance matrix is the diagonal matrix \(D\).

\[\begin{align} S_Y &= \frac{1}{n}\sum_i Y_i \, Y_i^T \\ &= \frac{1}{n}\sum_i P\,X_i \,(P\,X_i)^T \\ &= \frac{1}{n}\sum_i P\,X_i \,X_i^T\,P^T \\ &= P\, \left(\frac{1}{n}\sum_i X_i \, X_i^T \right)\, P^T \\ &= P \, P^T \, D \,P \, P^T \\ &= D \end{align}\]We can also show that the variance in direction along which the variance is maximised, \(\vec{\mu}\) with \(\vert\vert\vec{\mu}\vert\vert = 1\), have a magnitude less than the largest eigenvalue \(\lambda_1\).

\[\begin{align} \vec{\mu}^T S \vec{\mu} &= \vec{\mu}^T \, P \, D \, P^T \, \vec{\mu} \\ &= (P^T \, \vec{\mu})^T \, D \, (P^T \, \vec{\mu}) \\ &= \vec{b}^T \, D \, \vec{b} \\ &= \sum_{j=1}^d \lambda_j b_j^2 \\ &\leq \lambda_1 \sum_{j=1}^d b_j^2 \\ &\leq \lambda_1 || \vec{b}||^2 \\ &\leq \lambda_1 || (P^T \, \vec{\mu})^T (P^T \, \vec{\mu}) || \\ &\leq \lambda_1 || \vec{\mu}^T \, P \, P^T \, \vec{\mu} || \\ &\leq \lambda_1 || \vec{\mu}^T \vec{\mu} || \\ &\leq \lambda_1 \\ \end{align}\]And by the definition of the eigenvector and eigenvalues, but just to go through the math explicitly,

\[\begin{align} \vec{v_1}^T S \vec{v_1} &= \vec{v_1}^T \, P \, D \, P^T \, \vec{v_1} \\ &= (P^T \, \vec{v_1})^T \, D \, (P^T \, \vec{v_1}) \\ &= \left(\left[ \begin{matrix} - & \vec{v_1} & & - \\ - & \vec{v_2} & & - \\ - \\ - & \vec{v_d} & & - \\ \end{matrix}\right] \vec{v_1} \right)^T \, D \, \left[ \begin{matrix} - & \vec{v_1} & & - \\ - & \vec{v_2} & & - \\ & - & \\ - & \vec{v_d} & & - \\ \end{matrix}\right] \vec{v_1} \\ &= \left[ \begin{matrix} 1 & 0 & \ldots & 0 \end{matrix}\right] \, D \, \left[ \begin{matrix} 1 \\ 0 \\ \vdots \\ 0 \\ \end{matrix}\right] \\ &= \lambda_1 \\ \end{align}\]Hence the eigenvectors are the ones that maximise the variance. So we skipped any optimisation (which wouldn’t be easy) by some simple linear algebra with the projection of new points just involving some dot products with the orthonormal bases. Assuming we have some quick method to calculate the eigenvectors and eigenvalues our mission is done!

For our data, the covariance matrix is as follows.

\[\begin{align} \begin{bmatrix} 12.687 & 17.438 & -9.877 \\ 17.438 & 38.263 & -18.308 \\ -9.877 & -18.308 & 13.074 \\ \end{bmatrix} \end{align}\]Then just blindly using the np.linalg.eig function we find the eigenvalues to be,

and eigenvectors to be

\[\begin{align} \begin{bmatrix} -0.412 & -0.691 & 0.594 \\ -0.804 & 0.582 & 0.119 \\ 0.428 & 0.429 & 0.795 \\ \end{bmatrix}. \end{align}\]Let’s chuck this in an interactive plot to see how the eigenvectors look against the data.

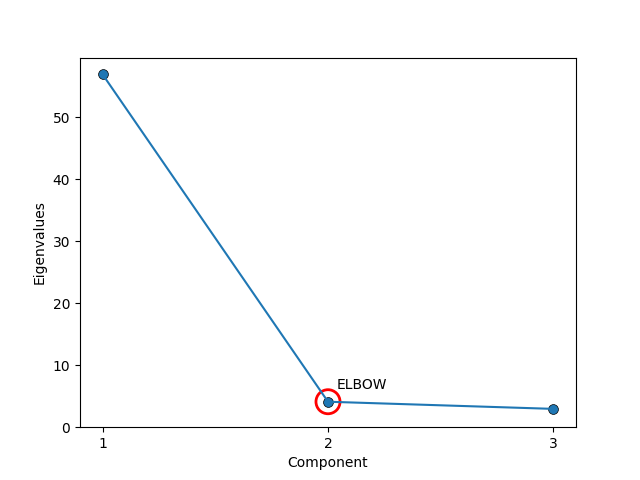

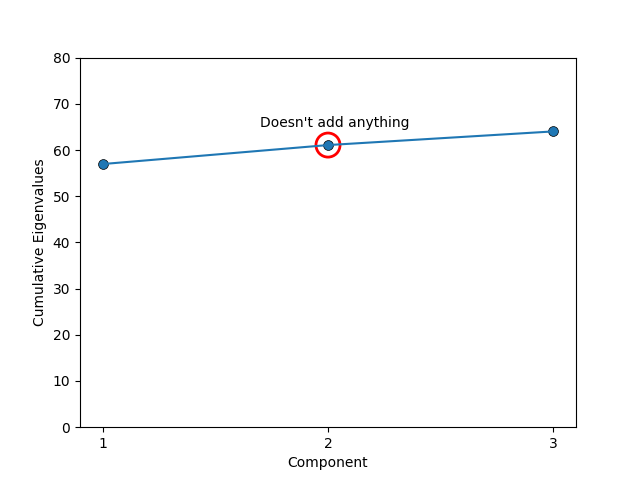

The process now gives us a whole set of vectors that we can use to reconstruct the data, but part of the point of this is to find the directions in the space of our data along which most of the information lies. So, we don’t actually want to keep all the vectors, but how many do we decide to keep? Typically, people just keep 1 to 3 (easy to visualise, and should explain most of the variation) but if one want to do this a little more rigorously we can use something called a Scree plot. Apparently named after its resemblance to the natural formation of broken rocks at the bottom of a cliff (image below).

All that it is though is that you plot either the eigenvalues in order (automatically descending) where you look for an ‘elbow’ where the magnitude of the components drop off or their cumulative values \(\lambda_1\), \(\lambda_1 + \lambda_2\), \(\lambda_1 + \lambda_2 + \lambda_3\), etc where instead you look for when the cumulation of the eigenvalues doesn’t significantly increase (basically the same thing).

But let’s say that the data has some periodic component? e.g. In the below (fake) data, if we blindly apply PCA the amplitude of the periodicity would be included in the variance. Despite the data haing relatively low noise compared to the baseline sinusoidal signal with quadratic-like trend. At this point I’ll stop messing you around and just tell you the trick. (Unless you’re one of the unfortunate few to look at this before it’s finished.)

The Kernel Trick

Resources

- The Kernel Trick - Udacity

- RBF kernel as an infinite feature expansion - Andy Jones

- Lecture 15 of 18 of Caltech’s Machine Learning Course - CS 156 - Professor Yaser Abu-Mostafa

- Matthew N. Bernstein’s notes - The Radial Basis Function Kernel

- Support Vector Machines Part 3: The Radial (RBF) Kernel (Part 3 of 3) -StatQuest with Josh Starmer

The Gist

The idea that I keep coming back to in this post is that all of these methods on rely on just a series of dot products. I additionally showed that they all have (except PCA) much more generality and expressive power when we project our data into a higher dimensional space and use our linear methods there. But the grub was that even for low dimensional data, e.g. 4 for x, y, z and time, and just a degree 5 polynomial would have 59 columns in the design matrix (including constant). We then often perform an outer product so then we have to do \(59^2 = 3481\) calculations …. instead of 16 …. real expensive.

For example let’s say we have some 2D data,

\[\begin{align} X^i = [x^i, y^i, z^i], \end{align}\]that I then project into the higher dimensional ‘quadratic’ feature space (with some slight scalar adjustments compared to previous sections that wouldn’t make a difference to the end result),

\[\begin{align} X'^i = [1, {\color{red} \sqrt{2} x^i}, {\color{blue}\sqrt{2} y^i }, {\color{green}\sqrt{2} z^i}, {\color{purple} (x^i)^2}, {\color{orange} \sqrt{2} (x^i) \, (y^i)}, {\color{magenta} (y^i)^2 }, {\color{teal} \sqrt{2} (z^i)\, (y^i) }, {\color{violet} (z^i)^2 }]. \end{align}\]We’ve also established that all of these methods simply compute the dot products between the vectors in this transformed space.

\[\begin{align} (X'^i)^T (X'^j) = 1 &+ {\color{red} 2 x^i \,x^j} + {\color{blue} 2 y^i \, y^j} + {\color{green} 2 z^i\, z^j }\\ &+ {\color{purple} (x^i)^2 \, (x^j)^2 } +{\color{orange} 2 (x^i) \, (y^i) \, (x^j) \, (y^j) } \\ &+ {\color{magenta} (y^i)^2 \, (y^j)^2 } + {\color{teal} 2 (z^i)\, (y^i) \, (z^j)\, (y^j) } + {\color{violet} (z^i)^2 \, (z^j)^2} \end{align}\]Then the magical thing is that this is equivalent to,

\[\begin{align} (X'^i)^T (X'^j) = (1 + (X^i)^T (X^j))^2 = K^{\textrm{poly}}_2(X^i, X^j), \end{align}\]where \(K^{\textrm{poly}}_2(X^i, X^j)\) is referred to as a kernel function, more specifically a degree 2 polynomial kernel. Similarly we can look at two dimensional data,

\[\begin{align} X^i = [x^i, y^i], \end{align}\]and transform it into the cubic space,

\[\begin{align} X'^i = [1, {\color{red} \sqrt{3} x^i}, {\color{blue}\sqrt{3} y^i }, {\color{purple} \sqrt{3} (x^i)^2}, {\color{orange} \sqrt{6} (x^i) \, (y^i)}, {\color{magenta}\sqrt{3} (y^i)^2 }, {\color{teal} (x^i)^3 }, {\color{brown} \sqrt{3} (x^i)^2 (y^i) },{\color{Tan} \sqrt{3} (x^i) (y^i)^2}, {\color{violet} (y^i)^3 }], \end{align}\]the dot product between any two points in the this feature space would be,

\[\begin{align} (X'^i)^T(X'^j) &= 1 + {\color{red} 3 x^i \, x^j} + {\color{blue} 3 y^i \, y^j } + {\color{purple}3 (x^i)^2 \, (x^j)^2} \\ &\;\;\;\;\;\;\;\, + {\color{orange} 6 (x^i y^i) \, (x^j y^j)} \;\; + {\color{magenta} 3 (y^i)^2 \, (y^j)^2 } + {\color{teal} (x^i)^3 \, (x^j)^3 } \\ &\;\;\;\;\;\;\;\, + {\color{brown} 3 ((x^i)^2 (y^i) )\, ((x^j)^2 (y^j) )} \;\;\;\;\;\;\;\;\;\, + {\color{Tan} 3 ((x^i) (y^i)^2) \, ((x^j) (y^j)^2) } \\ &\;\;\;\;\;\;\;\, + {\color{violet} (y^i)^3 (y^j)^3 } \\ &= (1 + (X^i)^T (X^j))^3 \\ &= K^{\textrm{poly}}_3(X^i, X^j) \\ \end{align}\]For the second last line I’m just going to have to ask you to trust me if it doesn’t immediately seem right…

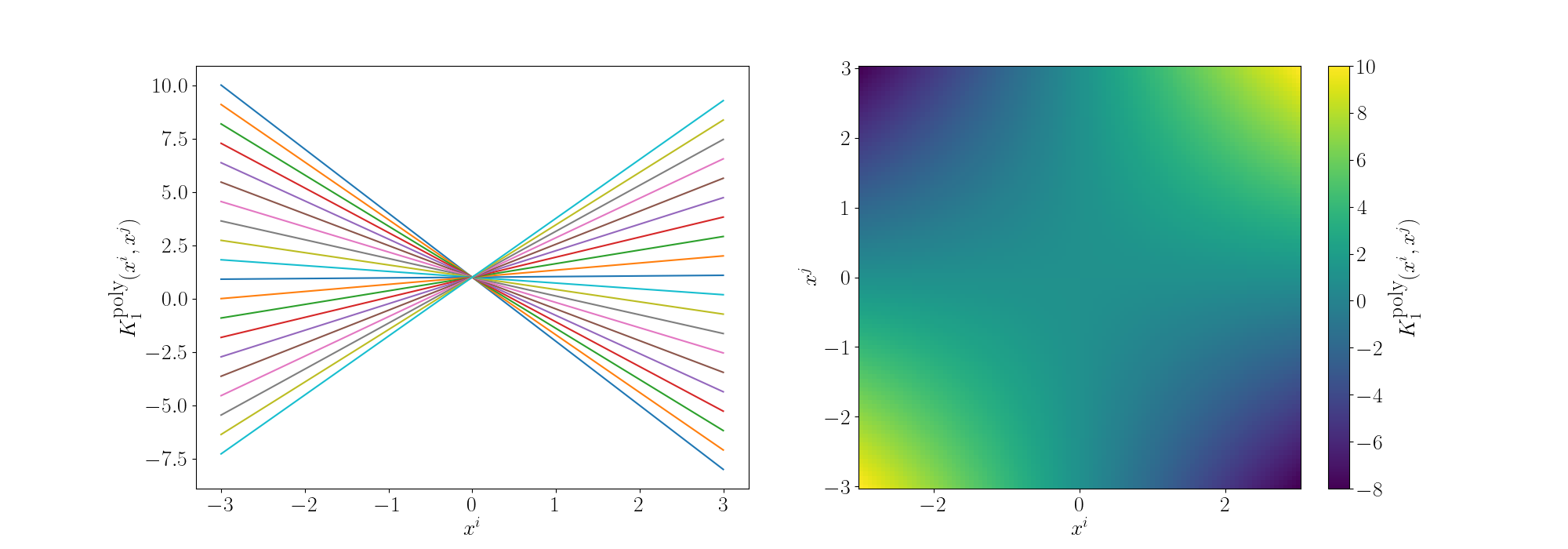

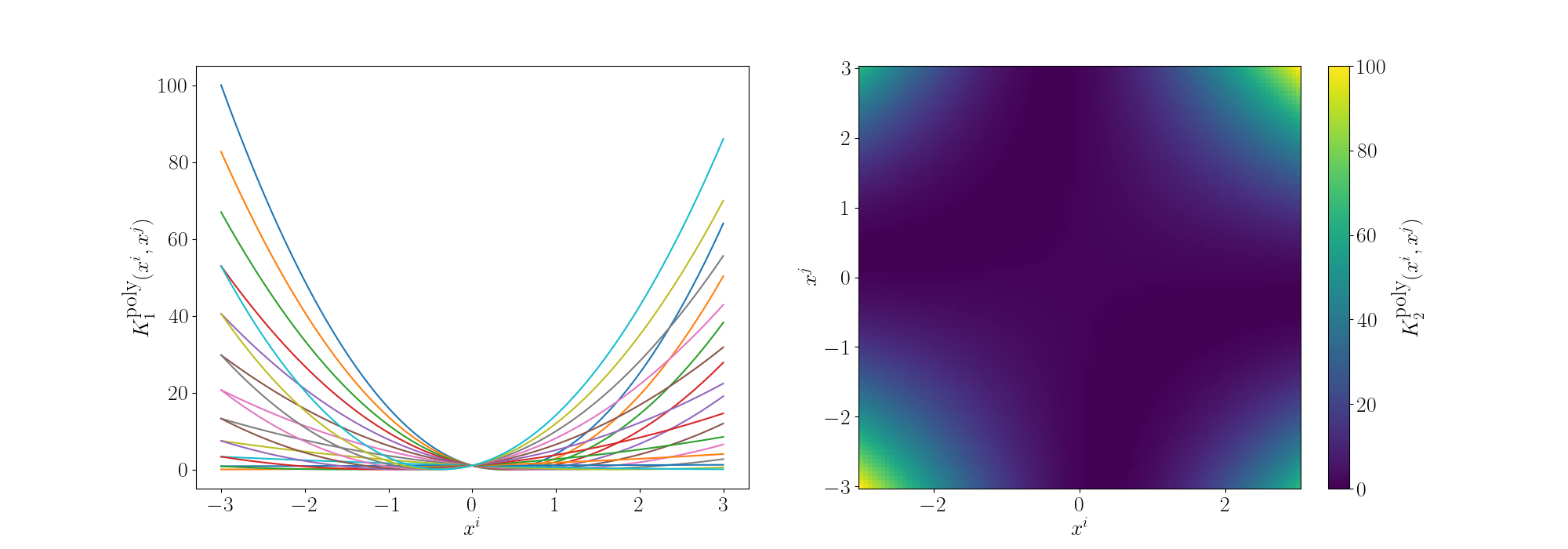

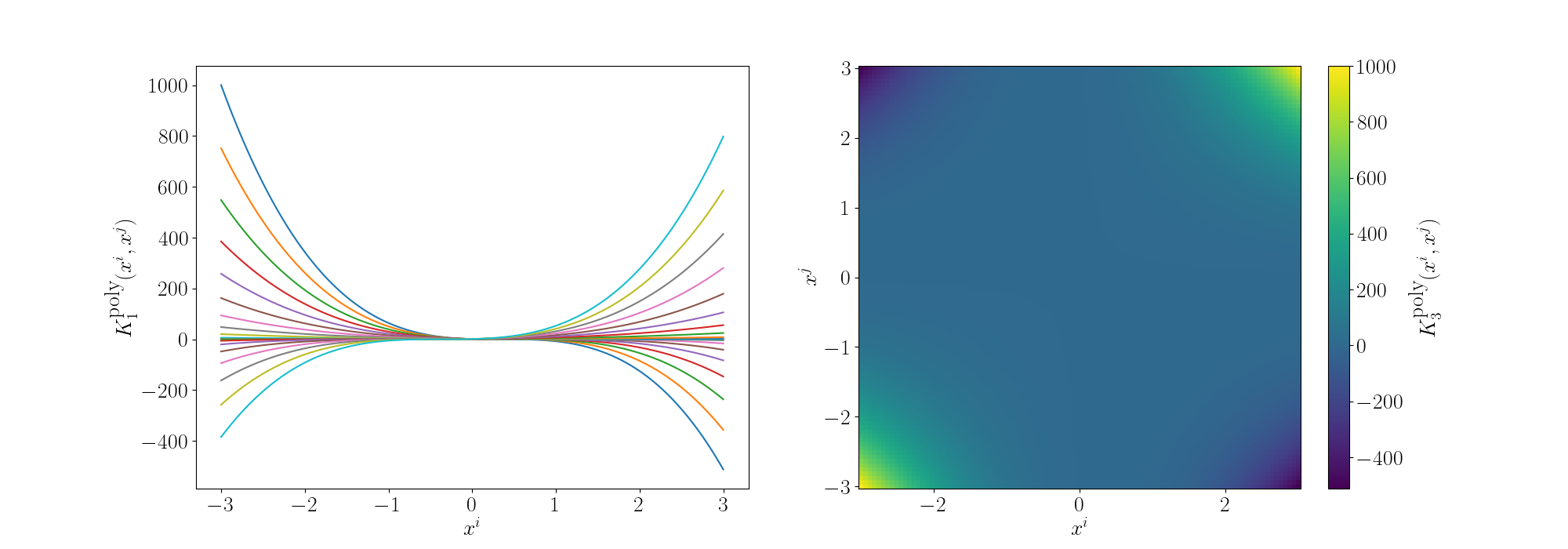

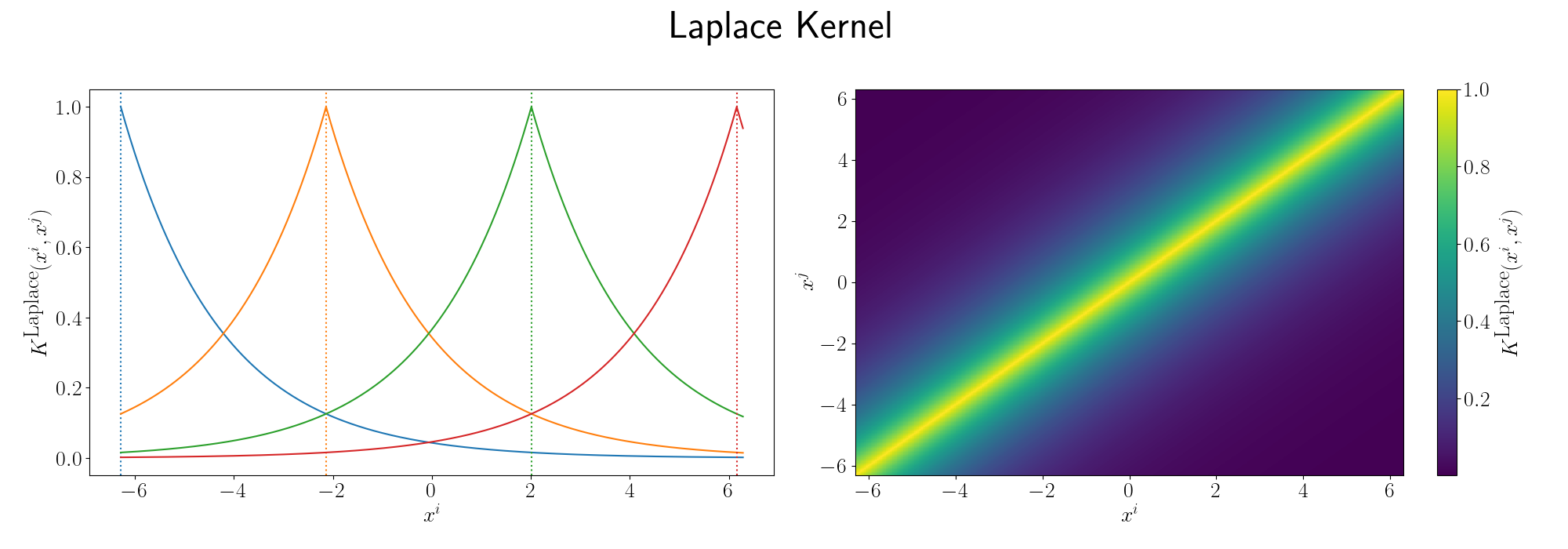

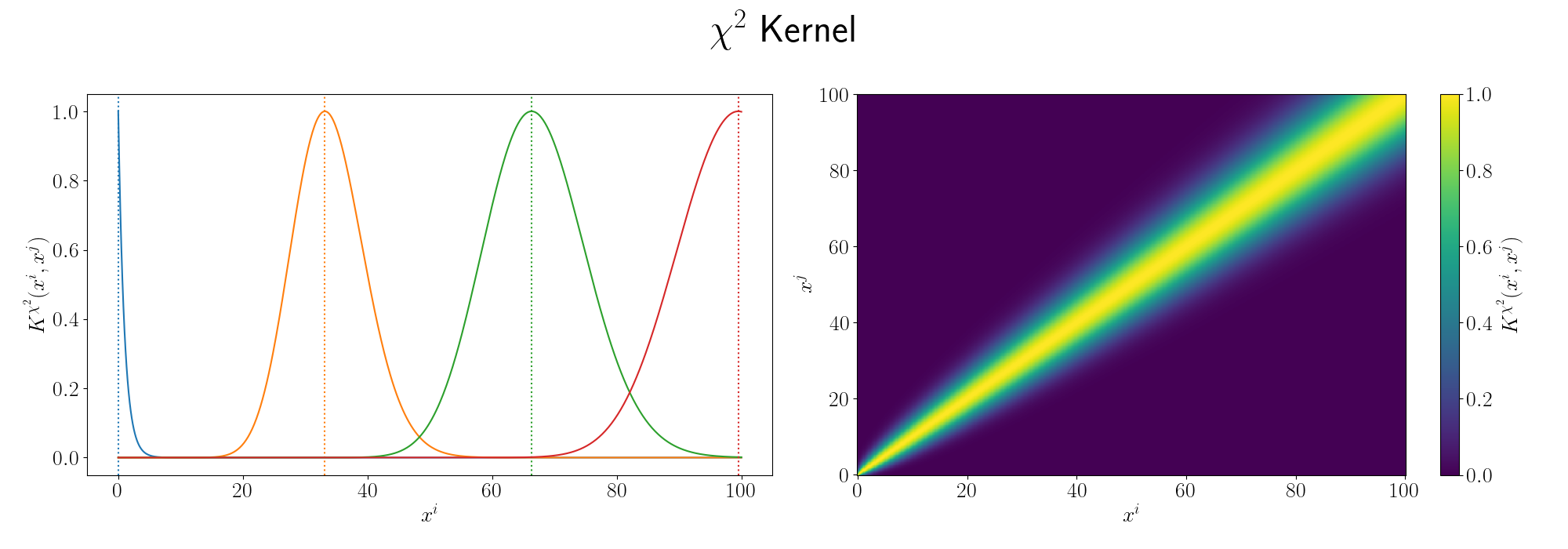

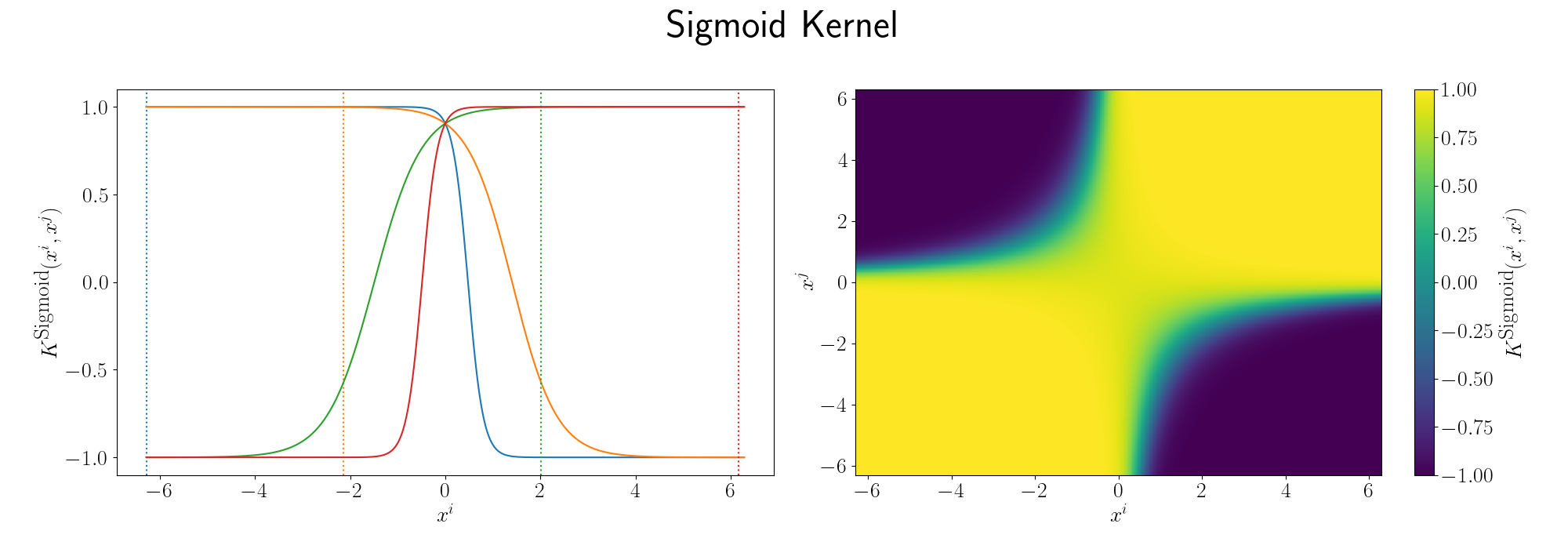

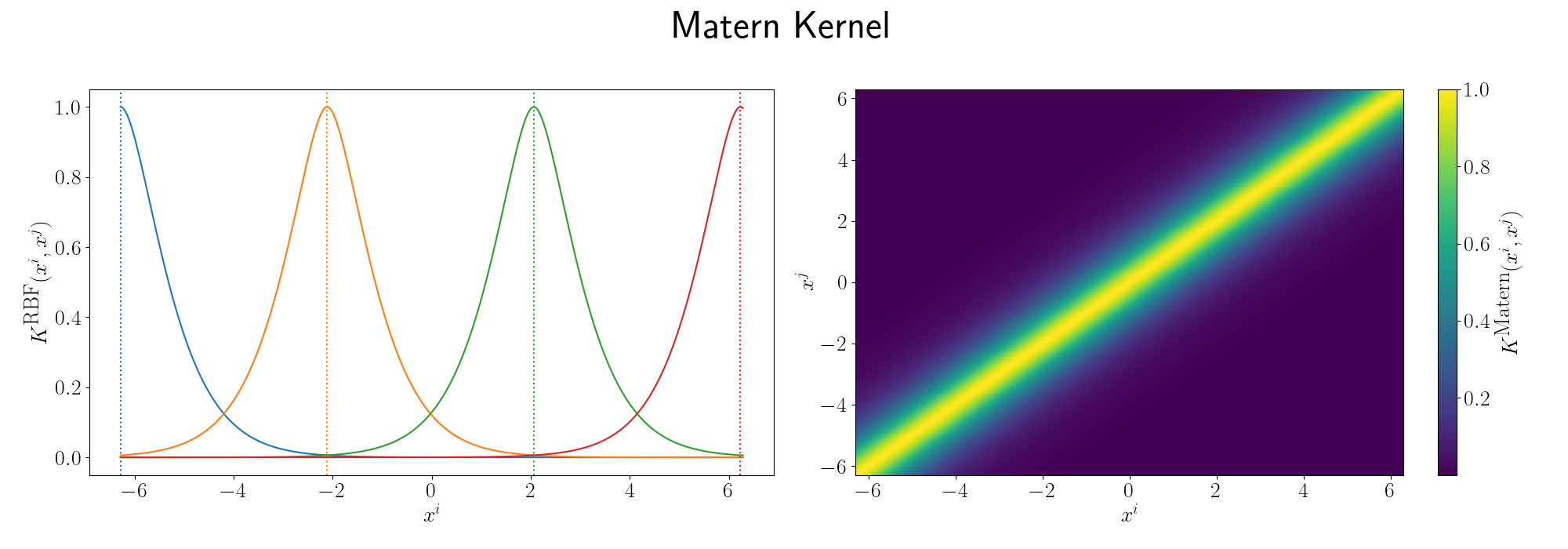

So the key point is that we can effectively do the calculations in the higher dimensional space without actually having to explicitly first transform our data into that space, as long as our kernel function is a valid inner product. What we can do in practice is store the values of the inner products between our data in what’s called a Kernel or Gram matrix. For now, we can just think of how the kernel’s behave in the context of the variables we give them. So we’ll plot the examples of the matrix for some 1D variable and just examine how the kernel behaves as a function of space.

For the degree 1 polynomial (directly below) we can see that the relationship between \(x^i\) and \(x^j\) is linear, as expected as we’re using a linear kernel. For a given \(x^j\) the value decreases as you move to the left and increases as you move to the right.

For the degree 2 polynomial (directly below) we can see that the relationship between \(x^i\) and \(x^j\) is quadratic. For a single value of \(x^j\) you can see that multiple values of \(x^i\) give the same inner product value. Meaning that if \(x^j\) was equal to 1 for example, \(x^i=1\) and \(x^i=-1\) would be equivalent from the eyes of the inner product.

And for the degree 3 polynomial (directly below) we can see that the relationship between \(x^i\) and \(x^j\) is cubic. And we can see more nonlinear behaviour pop up but the functions are monotonic so multiple values don’t give the same answer (for 1D, isn’t true for higher).

Not only can we implicitly transform our data into some finite dimensional polynomial space, we can also use kernels where the number of terms would be technically infinite.

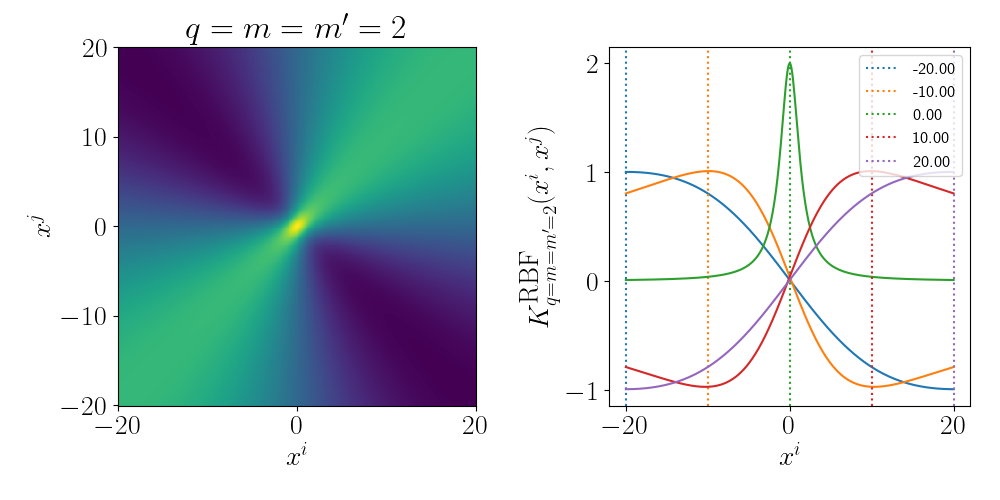

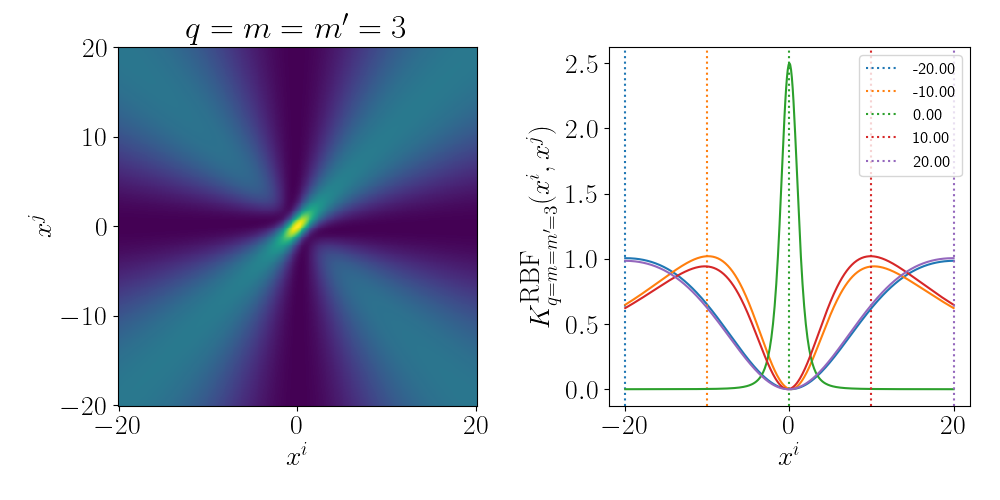

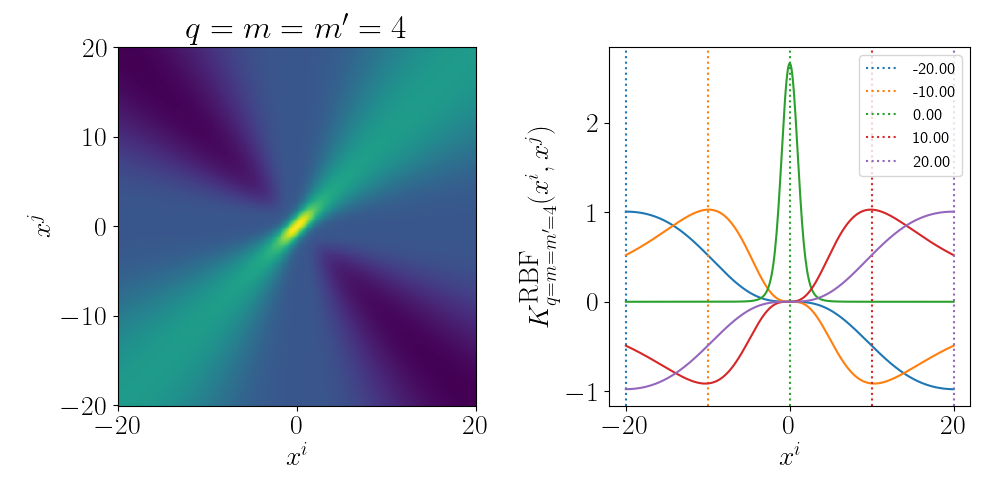

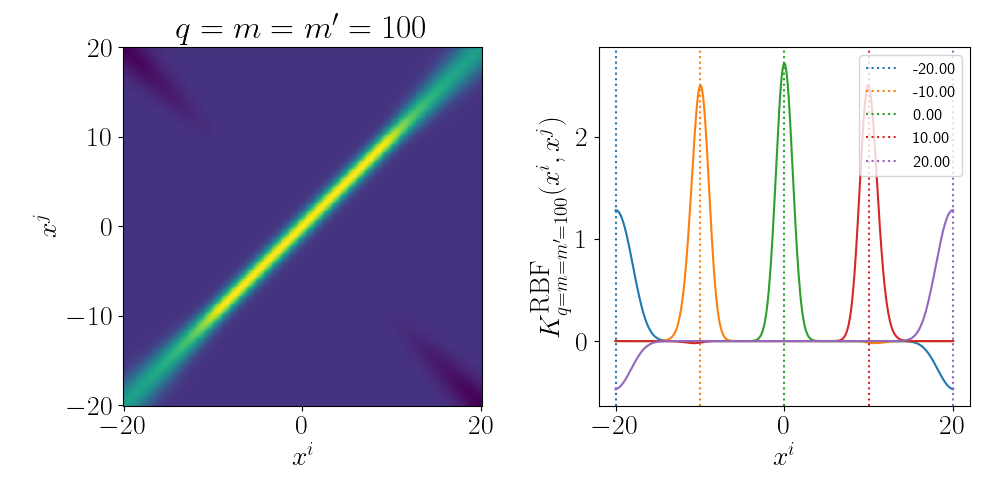

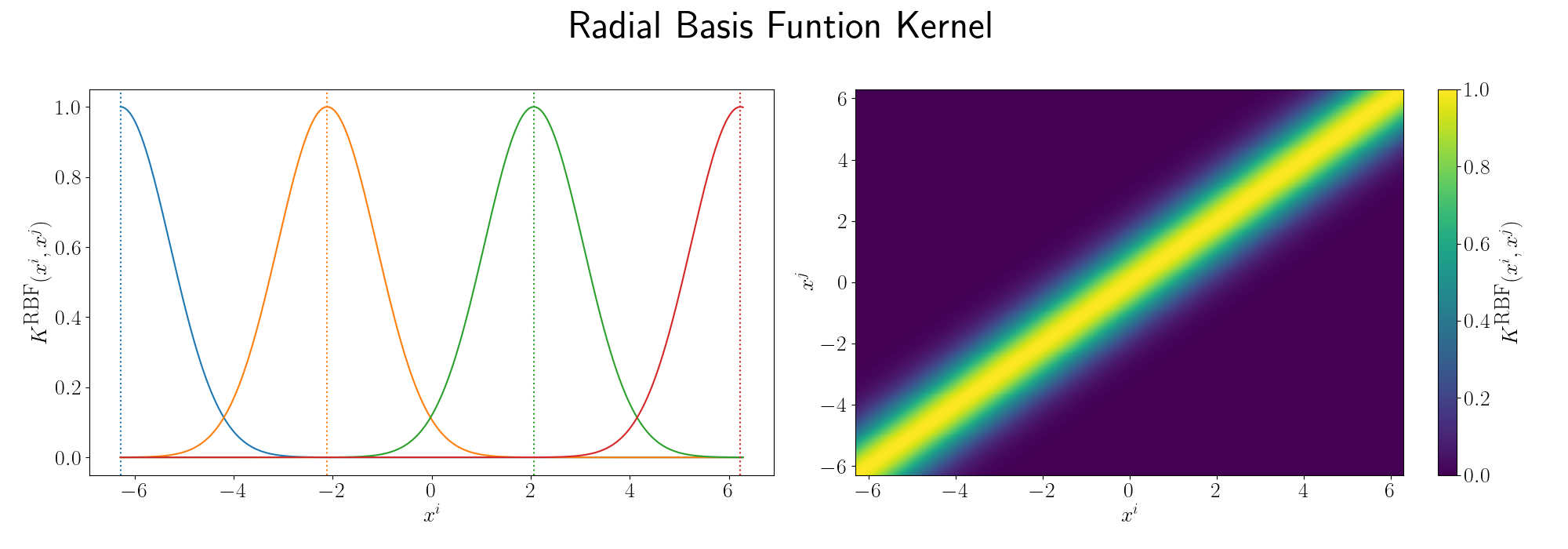

e.g. the most commonly used kernel across disciplines is the Radial basis function kernel or simply the RBF kernel, defined as,

\[\begin{align} K(X_i, X_j) = \exp\left(- \frac{\vert\vert X_i - X_j\vert\vert^2}{2\sigma^2} \right), \end{align}\]seeing as it has an exponential in it you can see that if we made the equivalent expansion as above that the series would be infinite. Setting the length scale \(\sigma\) to \(1\).

\[\begin{align} K(X_i, X_j) &= \exp\left(- \frac{\vert\vert X_i - X_j\vert\vert^2}{2} \right) \\ &= \exp\left[-\frac{1}{2} \left( (X^i)^2 + (X^j)^2\right)\right] \sum_{n=0}^\infty \frac{K^{poly}_n(X^i, X^j)}{n!} \\ &= \sum_{n=0}^\infty \frac{K^{poly}_n(X^i, X^j)}{n!} \\ & \;\;\;\;\;\;\; \div \left(\sum_{m=0}^\infty \frac{(\frac{1}{2} (X^i)^T (X^i))^m}{m!}\right)\\ & \;\;\;\;\;\;\; \div \left(\sum_{m'=0}^\infty \frac{(\frac{1}{2} (X^j)^T (X^j))^{m'}}{(m')!}\right) \end{align}\]What the inner products between vectors would imply is pretty much a gaussian distribution with euclidean distance metric, or more simply, a quantity related to how close they are (that follows a gaussian distribution) or something like a weighted nearest neighbour model. If they’re far away from each other, the inner product is small, if they are near each other, the inner product is large. Spread out in a smooth manner due to the gaussian distribution.

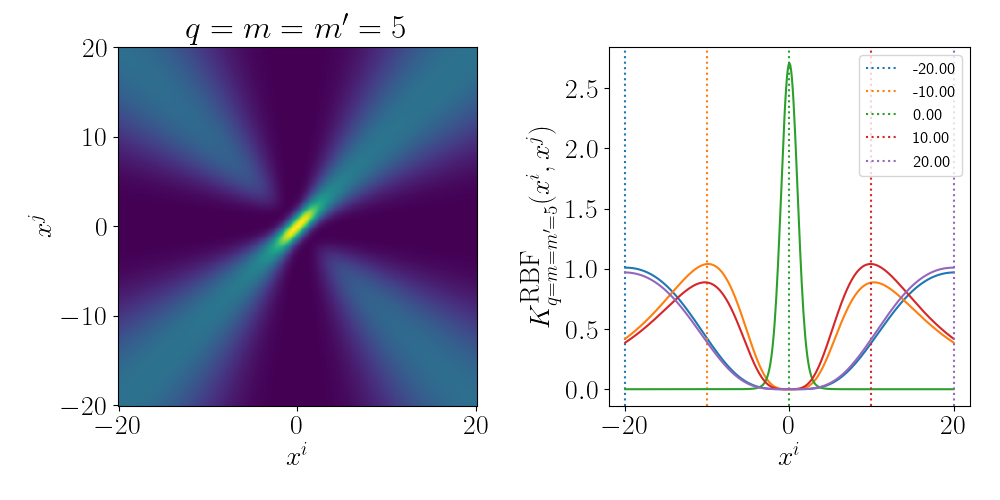

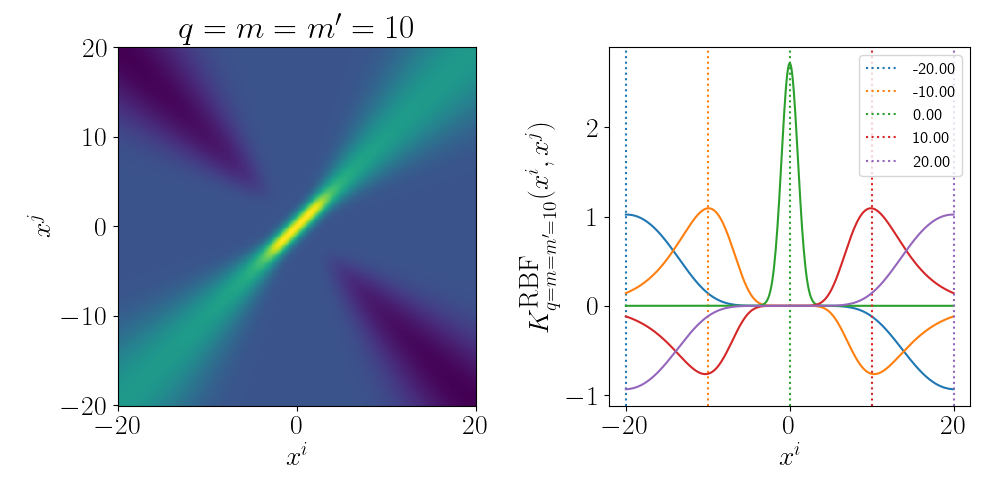

Although the RBF Kernel works in infinite dimensions, we can visualise it’s truncated version to see how the behaviour arises. Thanks to Andy Jones for the heatmap code in their blog posts RBF kernel as an infinite feature expansion so I didn’t have to write it myself, please check it out if you have the time!

Essentially as stated above, the inner product/kernel function value is large when the variables are of a similar value.

There are a bunch more kernels to model different behaviours including the periodic kernel and Matern kernel, and you can combine them to model different behaviours! You can play around with some different kernels here. It’s a Gaussian Process interactive website that you can just think of it as something that will plot the forms of equations/relationships between coordinates that the kernels imply. I’ll demonstrate some more through the following examples.

Awesome Kernel-Based Methods

Kernel Discriminatory Analysis (KDA II)

Resources

The Gist

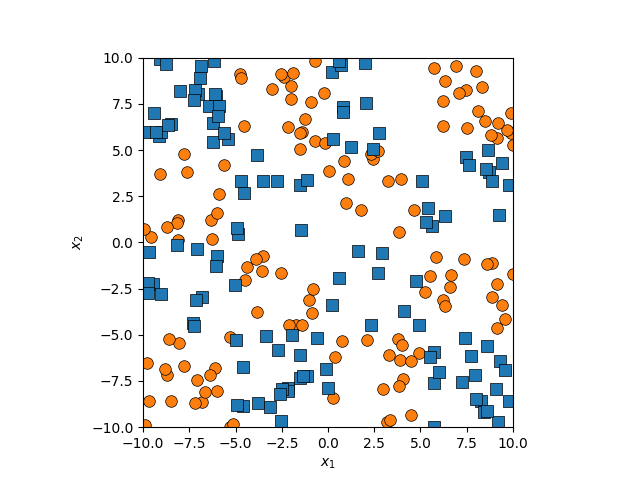

So, apply the kernel trick to Fisher Discriminant Analysis yields us Kernel Fisher Discriminant Analysis or KDA for short. Let’s first have a look at the last example I gave for the support vector model section.

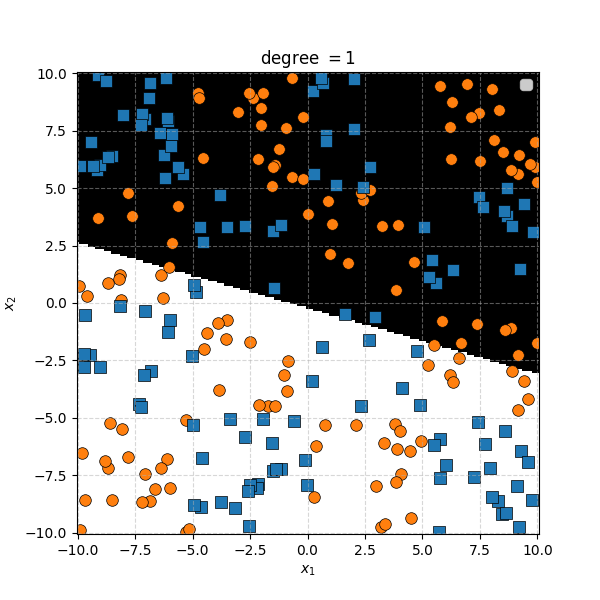

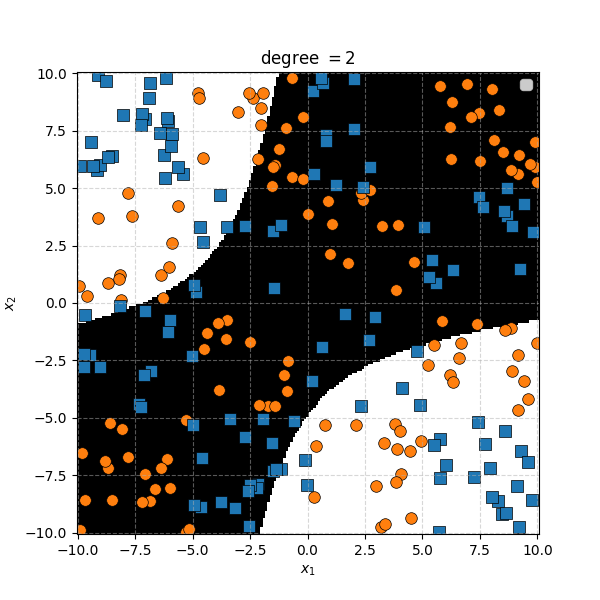

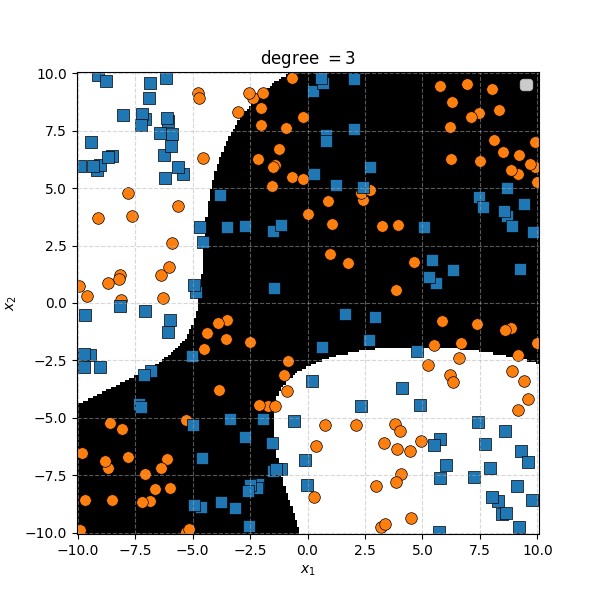

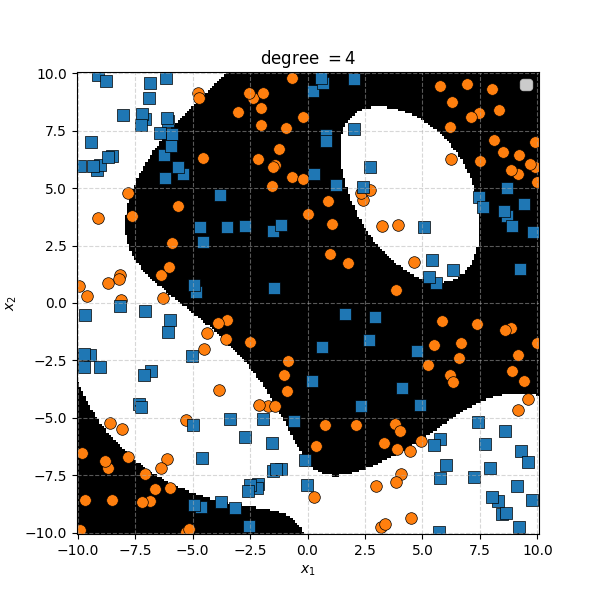

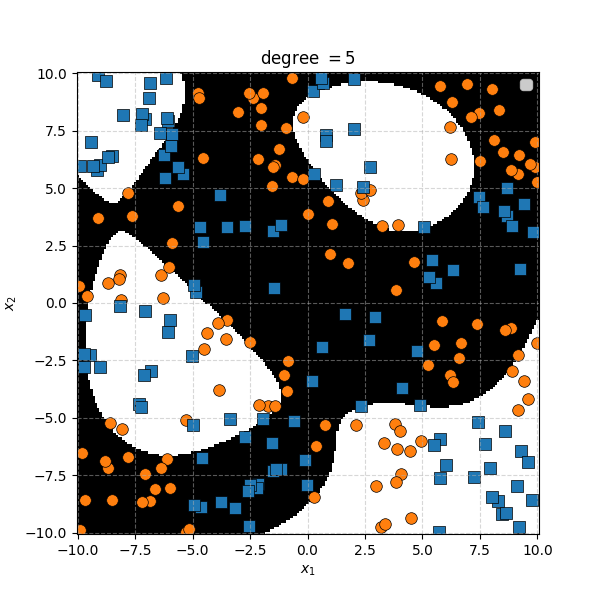

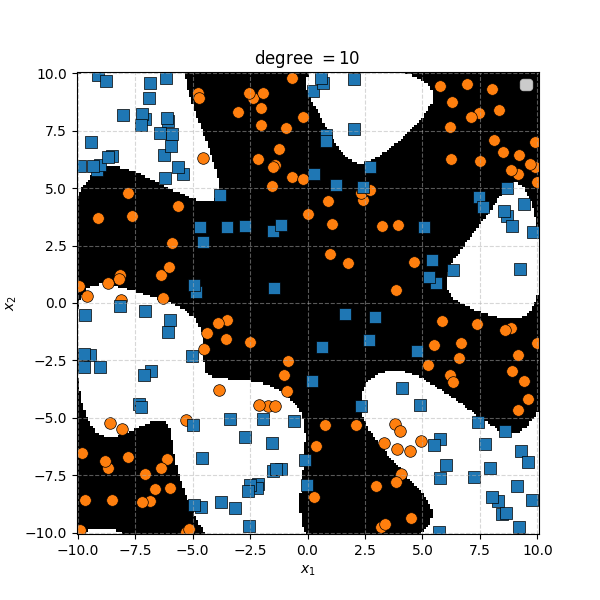

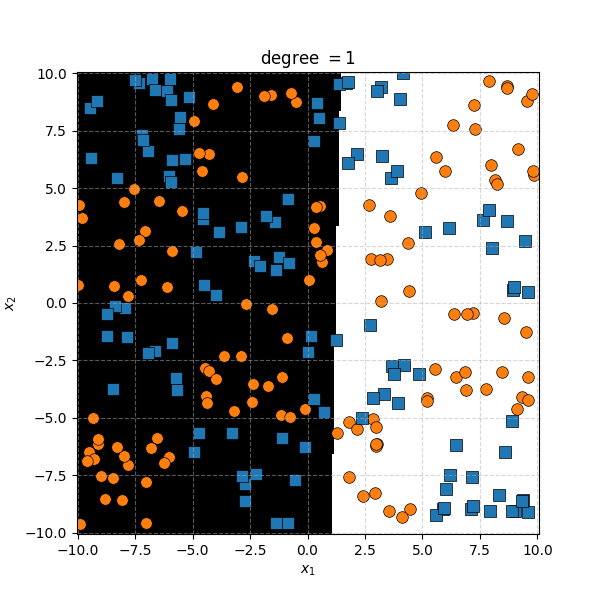

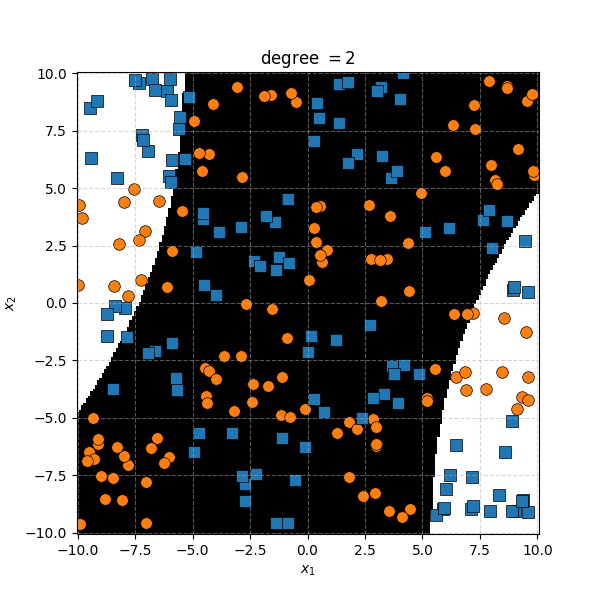

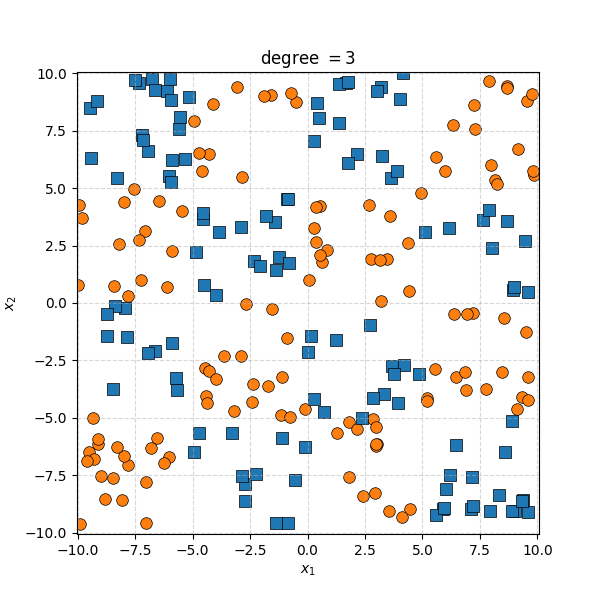

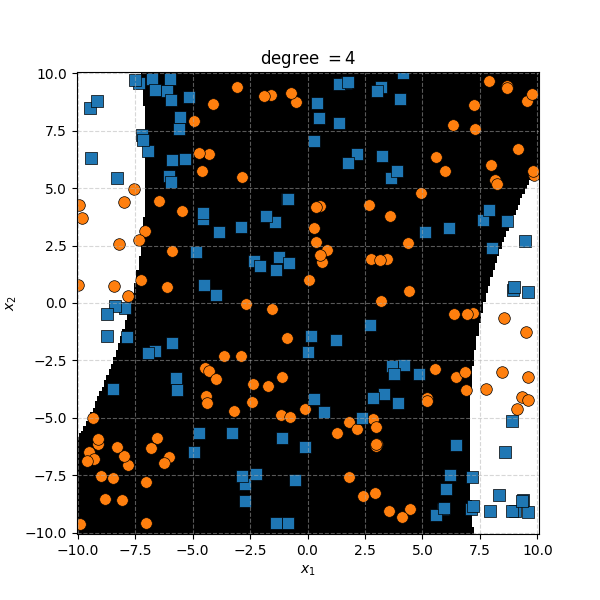

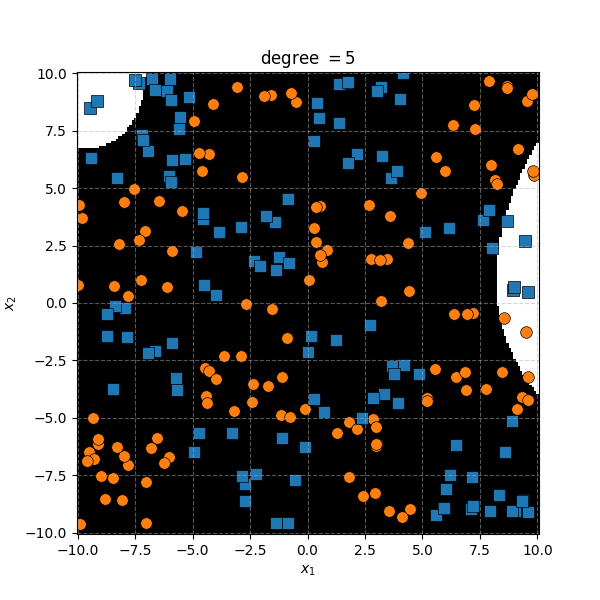

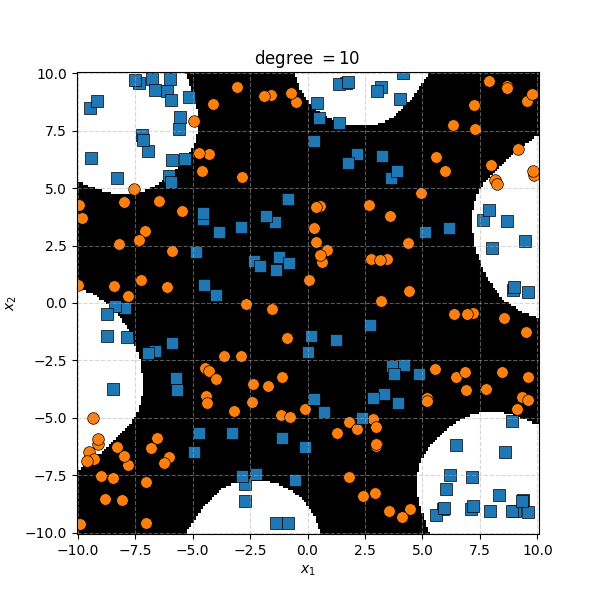

There isn’t an easy way to see what polynomial kernel would be able to distinguish between the two groups here. So let’s just examine the behaviour as we increase the degree of the polynomial kernel.

So the degree 1 polynomial kernel correspond to the linear separation boundary and degree 2 you can intuit is the same as the quadratic decision boundary. As we increase the degree of the kernel we can extract more fine tuned decision boundaries but it also becomes more susceptible to overfitting and noise. And we get to do all this without actually having to store or calculate within the project polynomial spaces!

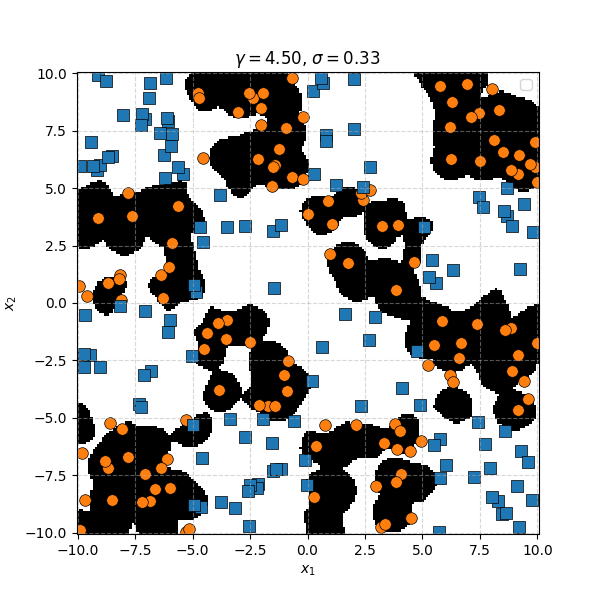

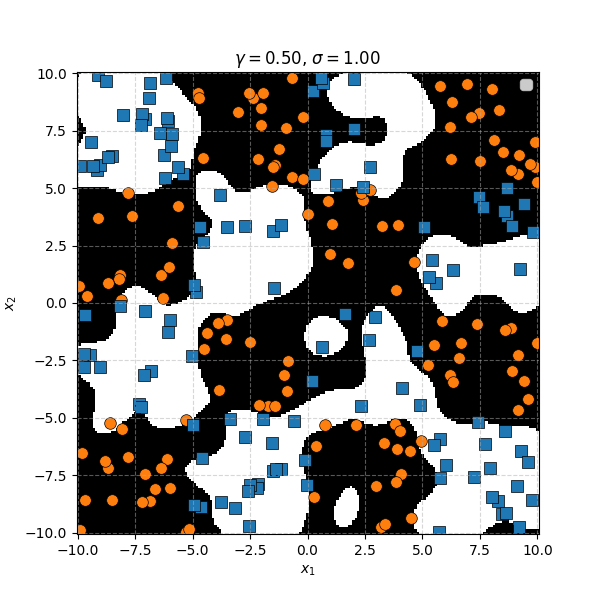

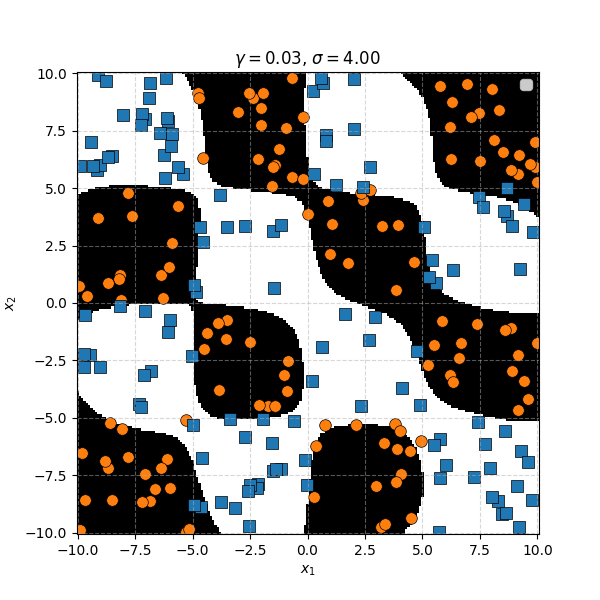

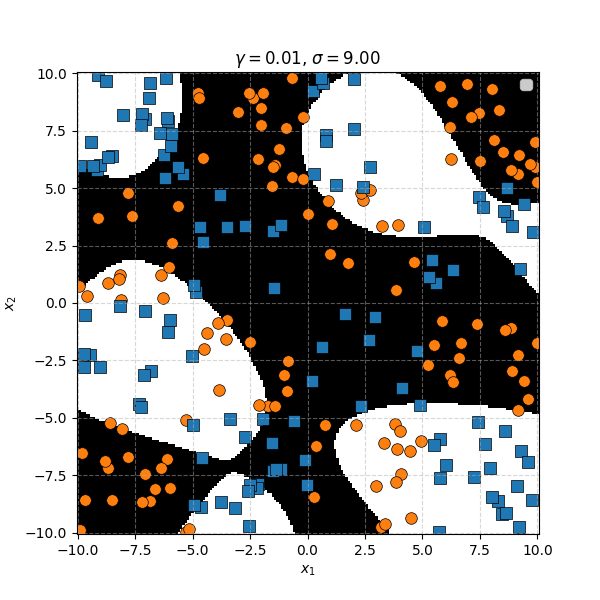

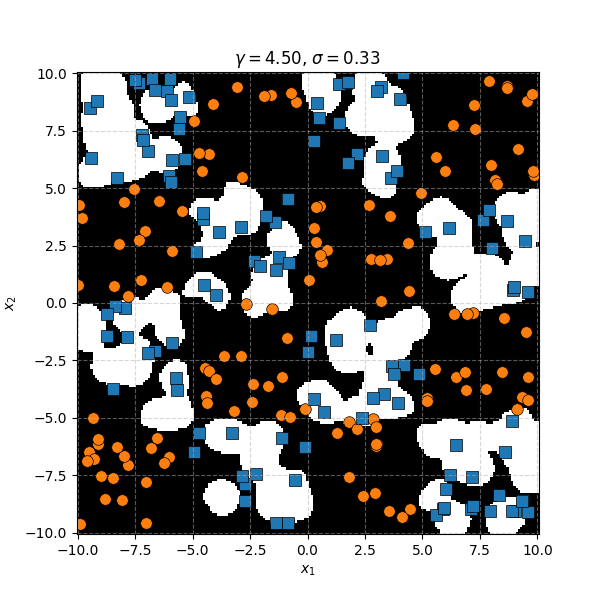

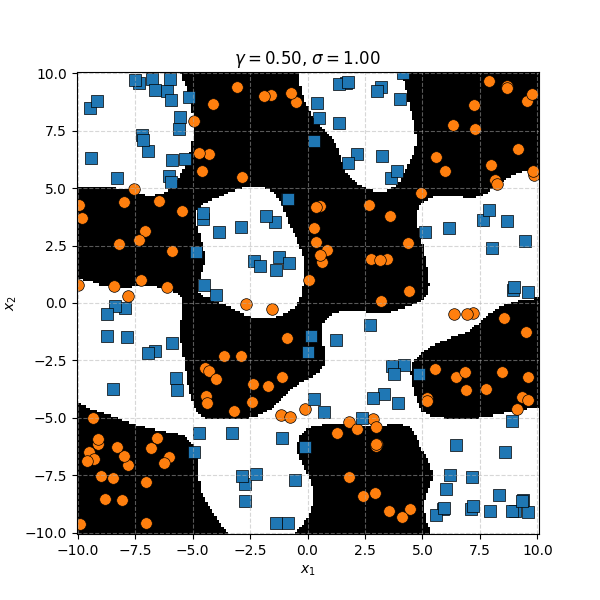

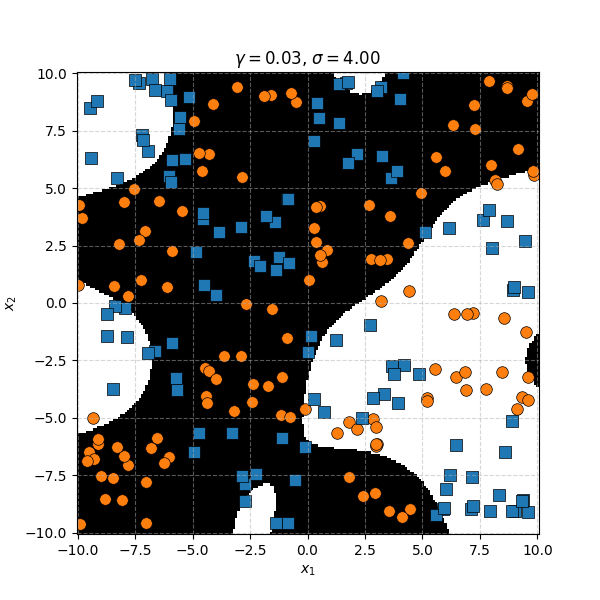

In this case we can just immediately tell that the data is formed into blocks of groups, so it might be better to apply the RBF kernel that allows us to encode behaviour based on the distances between the points. Scaling the \(\gamma\) or equvialently the \(\sigma\) of the kernel changes the length scale that we presume the data is following. Let’s also have a look at these and see the impact on the decision boundaries that the KDA implies.

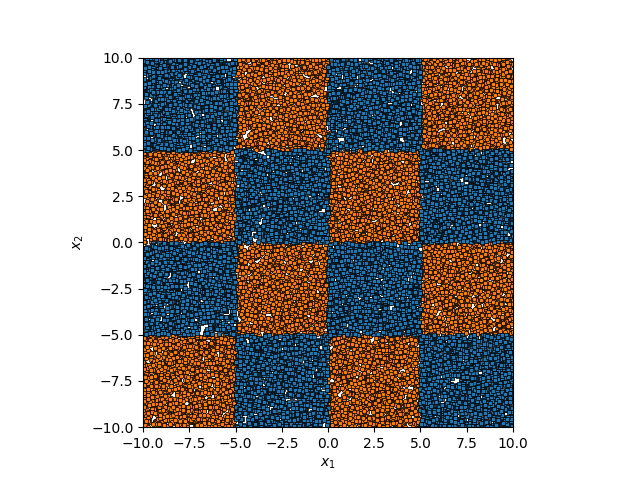

We can see that for smaller values of \(\sigma\) we can capture features on a smaller scale, but again, we become more susceptible to noise. While for larger length scales we can capture larger scale features or behaviours. In this case I would say that a length scale of 4 worked the best, which closely matches the checkboard pattern that I use to generate the samples which you can see below.

Here’s the code that used to do the KDA, all that it required was using the Gram matrix, K, and using that in place of the explicit dot products in the original section.

Support Vector Machines (SVM II)

Resources

- Manifold hypothesis

- Support Vector Machine - Wikipedia

- Only once did I get to this section did I realise how unoriginal I am sometimes. My support vector model plot is almost exactly the same as the Linear SVM plot on this page

- CS229 Lecture notes - Support Vector Machines

The Gist

The inclusion of the kernel is what turns a basic support vector model into the widely used support vector machine. Remember from the previous section on the topic that our loss was as follows,

\[\begin{align} L = \frac{1}{2} &\sum_i \left [ \alpha_i y_i \left(\sum_j \alpha_j y_j \, \vec{x}_i \cdot\vec{x}_j \right) - \alpha_i \right]. \end{align}\]To make this ‘kernel-ized’ we can imagine the projection operation into the higher dimensional spaces we were doing as some sort of transformation, \(T\), and chuck that in.

\[\begin{align} L = \frac{1}{2} &\sum_i \left [ \alpha_i y_i \left(\sum_j \alpha_j y_j \, T(\vec{x}_i) \cdot T(\vec{x}_j) \right) - \alpha_i \right]. \end{align}\]We then can replace that dot product by the kernel of our choice, \(K(\vec{x}_i, \vec{x}_j)\),

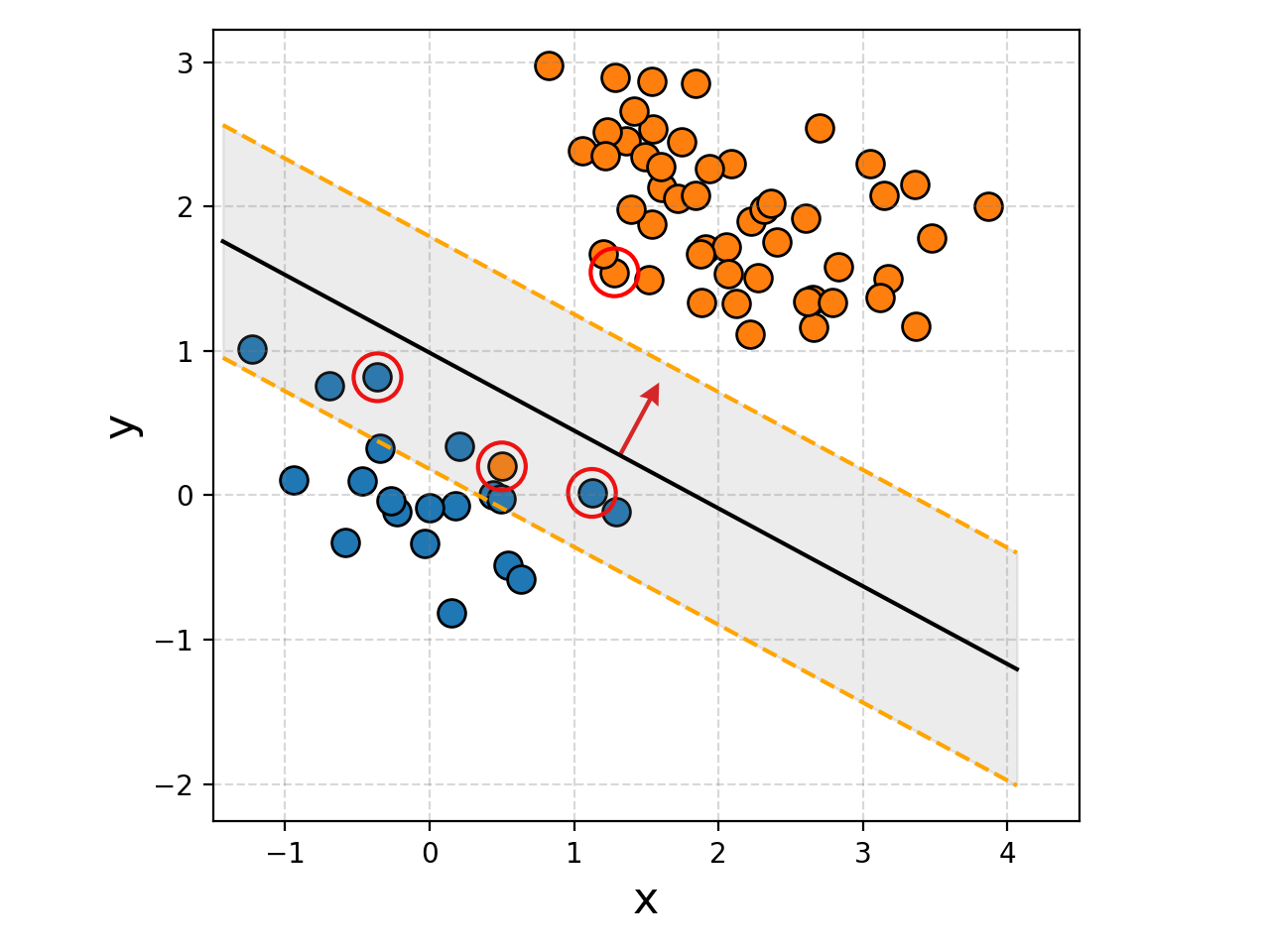

\[\begin{align} L = \frac{1}{2} &\sum_i \left [ \alpha_i y_i \left(\sum_j \alpha_j y_j \, K(\vec{x}_i, \vec{x}_j) \right) - \alpha_i \right]. \end{align}\]Then boom! We have a kernel-based method! Now let’s see how much better/worse the support vector machine setup does compared to the KDA setup on the checkerboard example.

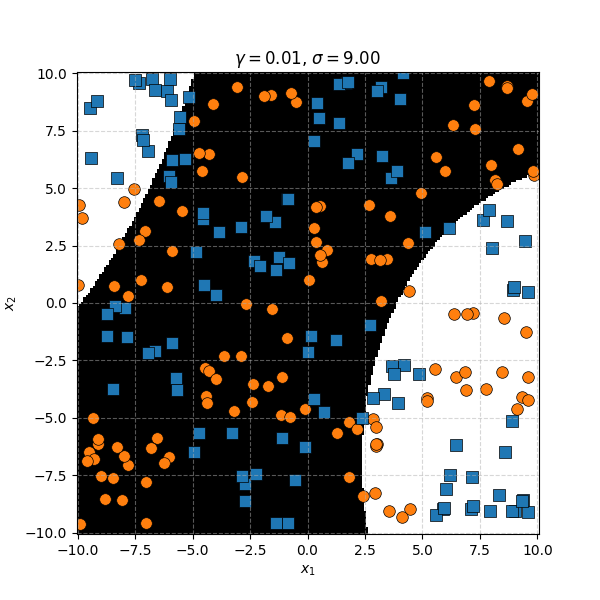

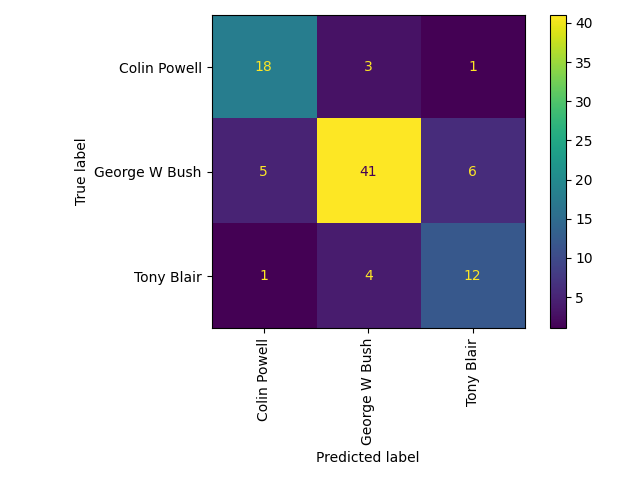

Instead of writing my own code this time I’m just using `sklearn.svm.SVC’ which is a support vector machine classifier which I modelled the inputs on my custom class above on. First we can look at the polynomial kernels.

It seems the support vector machine is doing notably worse. However it generally isn’t fitting random noise variations like the KDA approach did. Likely because the data is so noisy that it can’t create reasonable margins very well. And then the RBF kernels.

Similar to before, for this specific dataset the SVM isn’t doing as well. However, it’s interesting to note that in the case of the very small length scale it does pretty well. Eespecially if you compare it to the equivalent KDA plot that seems to be not really picking up any overall structure while the SVM seems to be doing so.

And quick side note, using the above class you can extract the support vectors being used to make the decision boundaries. In the cases above however it turns out to be almost every single point so I didn’t see the point either.

Kernel Ridge Regression (KRR II)

Resources

- Kernel ridge Regression - Max Welling

- Kernel method

- Kernel regression - Wikipedia

- Only useful as far as to know that it isn’t the same as Kernel Ridge Regression

- Kernel Methods and SVMs - Justin Domke

- Kernel Ridge Regression - Cynthia Rudin

- Is much simpler than what I have, but I didn’t like a substitution that they make at the beginning without a robust explanation. I basically follow what they do after I express \(\vec{\alpha}\) in terms of \(\vec{\beta}\)

- Comparison of kernel ridge and Gaussian process regression - scikit learn documentation

The Gist

Similar how we transition from linear support vector machines to general support vector machines we can generalise ridge regression but reducing the problem down to just involve dot products between the variables that we wish to project into the higher dimensional feature space.

What we can do is note that the lost function we constructed,

\[\begin{align} L(\vec{\alpha}) = (Y - X \vec{\alpha})^2 + \lambda \vec{\alpha}^2 , \end{align}\]likely won’t actually be able to go to exactly 0, but the optimisation will be done when we reach a local minima with respect to \(\vec{\alpha}\). Hence, taking a derivative of the above and setting it to \(\vec{0}\) we find,

\[\begin{align} \vec{0} = \frac{\partial L}{\partial \vec{\alpha}}(\vec{\alpha}) &= \frac{\partial}{\partial \vec{\alpha}} \left( (Y - X \vec{\alpha})^2 + \lambda \vec{\alpha}^2 \right) \\ &= \sum_i \frac{\partial}{\partial \vec{\alpha}} (Y_i - X_i \vec{\alpha})^2 + 2 \lambda \vec{\alpha} \\ &= - 2 \sum_i \frac{\partial}{\partial \vec{\alpha}} (Y_i - X_i \vec{\alpha}) X_i + 2 \lambda \vec{\alpha} \\ \end{align}\]Noting here for clarity that \((Y_i - X_i \vec{\alpha})\) is a scalar and \(X_i \vec{\alpha} = \vec{\alpha}^T X_i^T\) (scalar) with \(P\) being the dimensionality of \(X_i\).

\[\begin{align} \vec{0} = \frac{\partial L}{\partial \vec{\alpha}}(\vec{\alpha}) &= \frac{\partial}{\partial \vec{\alpha}} \left( (Y - X \vec{\alpha})^2 + \lambda \vec{\alpha}^2 \right) \\ &= \sum_i \frac{\partial}{\partial \vec{\alpha}} (Y_i - X_i \vec{\alpha})^2 + 2 \lambda \vec{\alpha} \\ \lambda \vec{\alpha} &= \sum_i X_i^T Y_i - X_i^T X_i \vec{\alpha} \\ (\lambda I_P + \sum_j X_j^T X_j ) \vec{\alpha} &= \sum_i X_i^T Y_i \\ \vec{\alpha} &= (\lambda I_P + \sum_j X_j^T X_j )^{-1} \sum_i X_i^T Y_i \\ \vec{\alpha} &= (\lambda I_P + X^T X)^{-1} X^T Y\\ \end{align}\]Utilising an identity equivalent Woodbury matrix identity called the push-through identity, with \(U = [m\times n]\) and \(V = [n\times m]\)

\[\begin{align} (I_m + UV)^{-1} U = U(I_n+VU)^{-1}, \end{align}\]we can then move some of this around with \(N\) being the number of observations/datapoints.

\[\begin{align} \vec{\alpha} &= (\lambda I_P + X^T X)^{-1} X^T Y\\ &= X^T (\lambda I_N + X X^T)^{-1} Y. \end{align}\]Huzzah! \(X X^T\) is just the Gram matrix! If we look at the \(ij^{th}\) entry then it is \((X X^T)_{ij} = X_i^T X_j\). We can see that the terms on the right are all very calculatable with kernels, but the \(X^T\) hanging around out the front is a little weird. Let’s replace the thing that we can calculate efficiently with just \(\vec{\beta}\) of size \([N\times 1]\) such that,

\[\begin{align} \vec{\alpha} = X^T \vec{\beta}. \end{align}\]If we plug this into our loss we find something really nice,

\[\begin{align} L(\vec{\alpha}) &= (Y - X \vec{\alpha})^2 + \lambda \vec{\alpha}^2 \\ &= (Y - X X^T \vec{\beta})^2 + \lambda \vec{\beta}^T X X^T \vec{\beta} \\ \end{align}\]There it is again! Plopping in the Gram matrix for \(X X^T\).

\[\begin{align} L(\vec{\beta}) &= (Y - K \vec{\beta})^2 + \lambda \vec{\beta}^T K \vec{\beta} \\ \end{align}\]So we can efficiently calculate our loss and project our data onto \(\vec{\alpha}\) implicitly (unless \(N\) is large, in which case inverting \(K + \lambda I\) ain’t gonna be easy). Except we don’t really need to optimise now because we already have the solution for a given \(X\) and \(Y\) by projecting any data implicitly by the \(K \vec{\beta}\) term or for a single observed datapoint \(x_*\), \(\sum_i x_*^T X \vec{\beta} = \sum_i k(x_*, x_i) \vec{\beta}\).

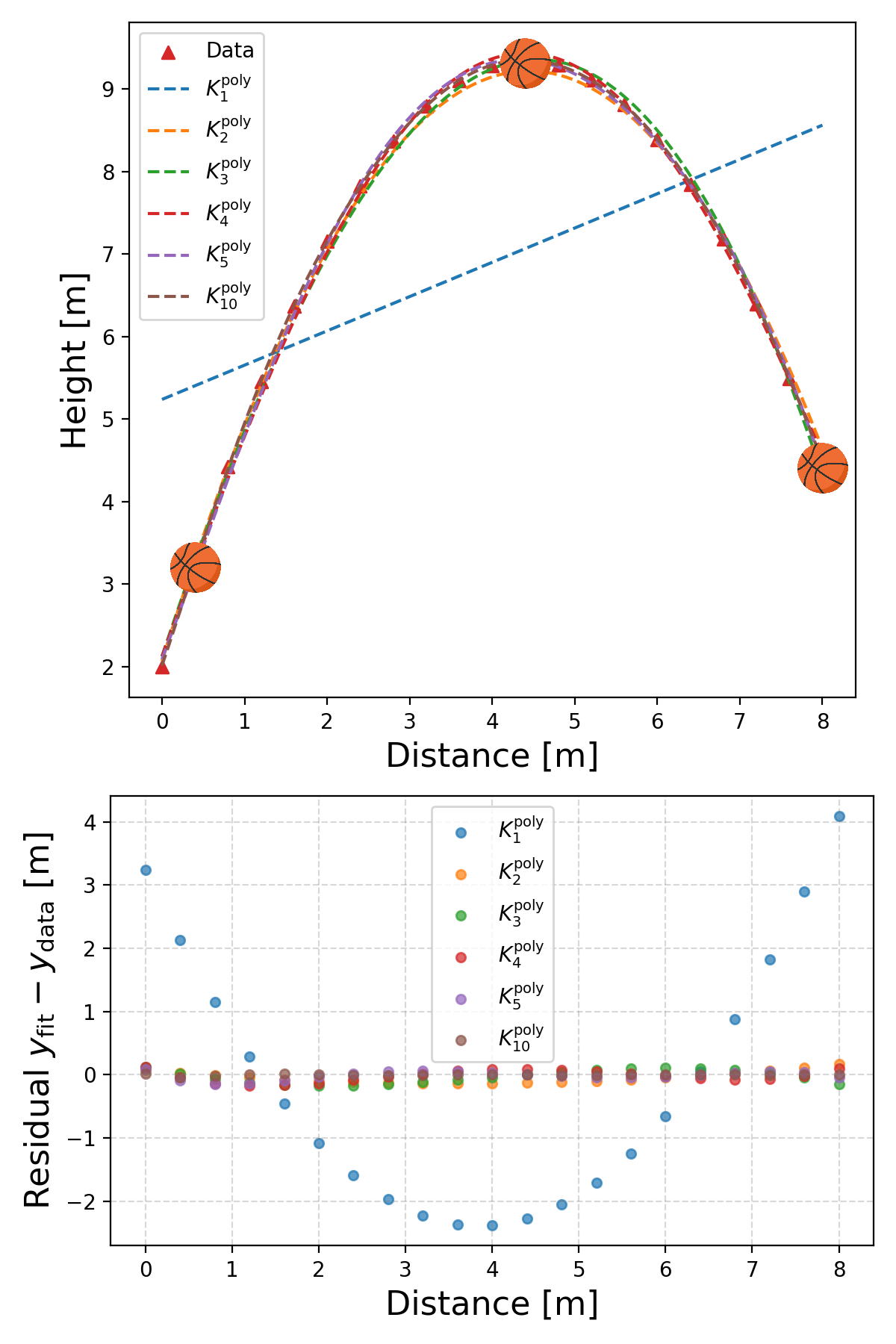

Now that we know that we can do it. Look at the problems from the previous section again. First the basketball data, let’s have a look at some fits for the polynomial kernels and RBF kernels.

First the fits with the polynomial kernel.

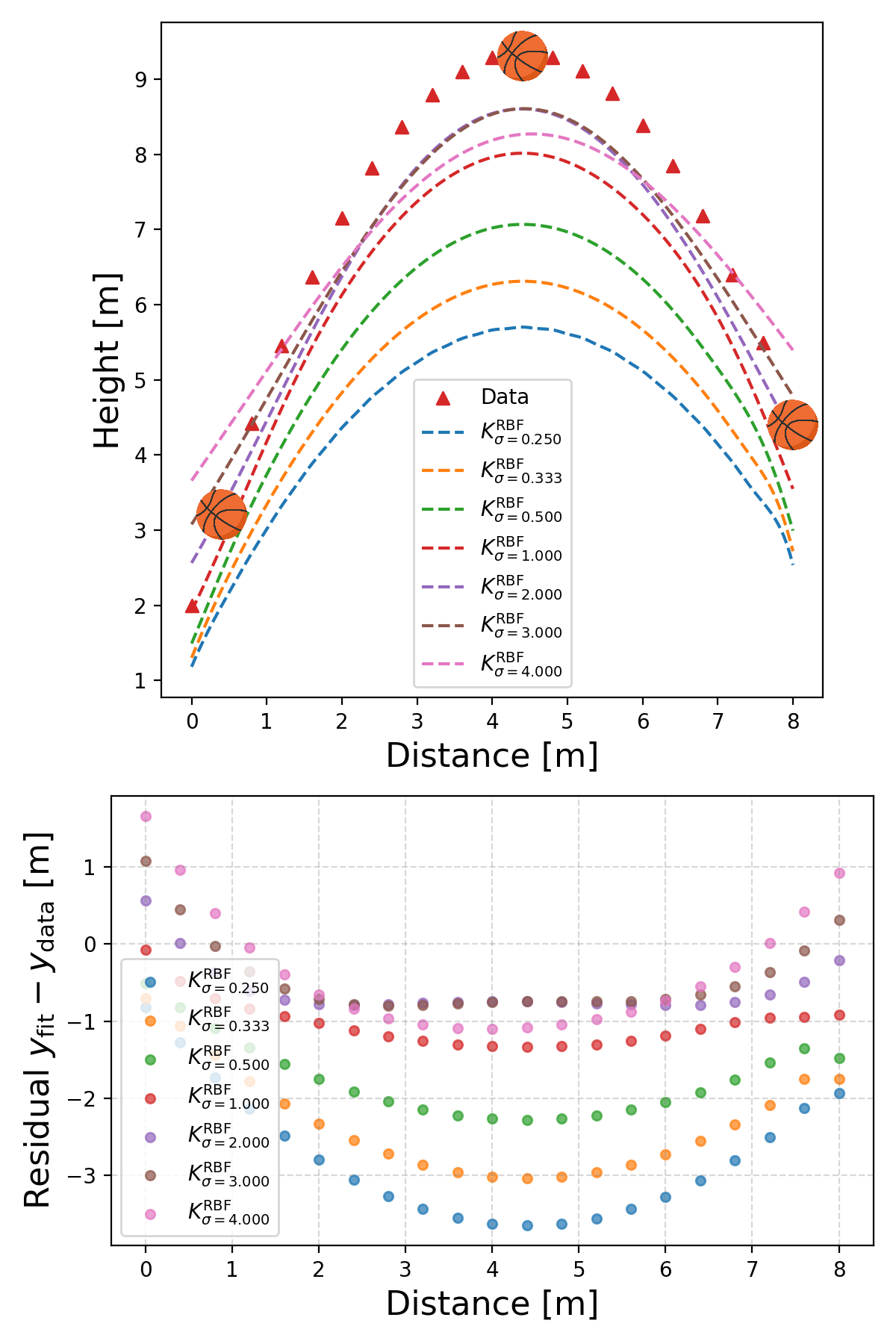

Basically works out to the more the better! And then the RBF kernel.

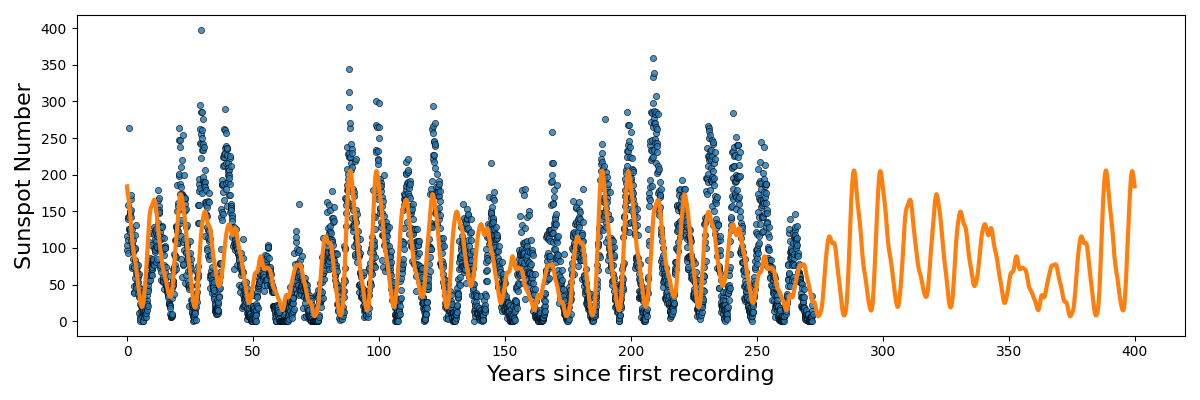

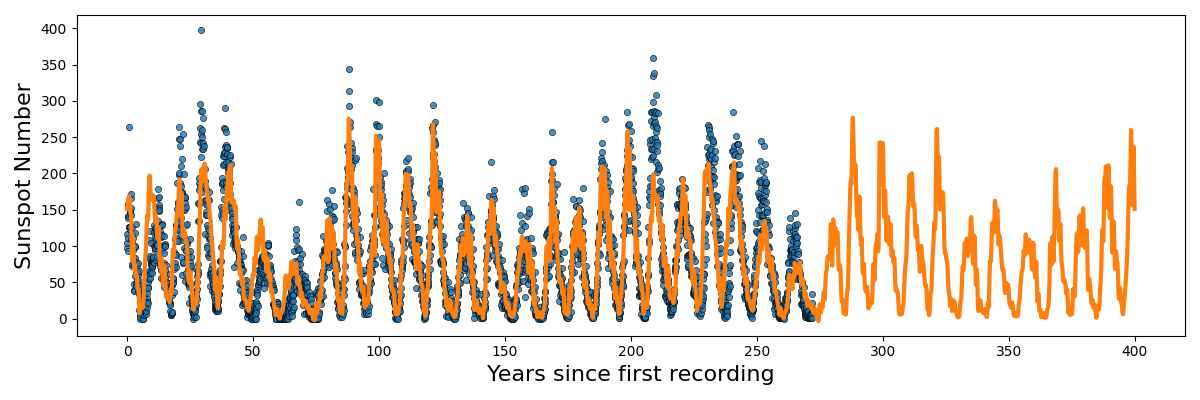

Not as good … basically this is a demonstration that different kernels are suited for different problems. There isn’t a single ‘best’ kernel. For example with the sunspot data.

We obviously have periodic components with non-negative data.

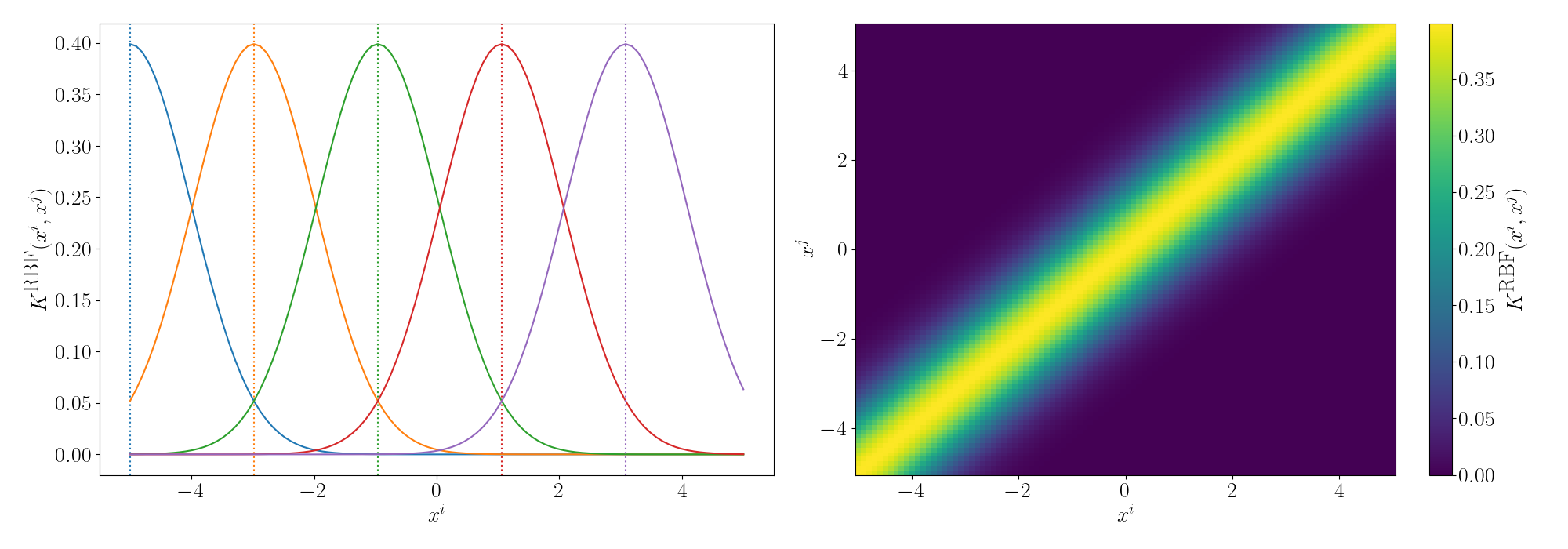

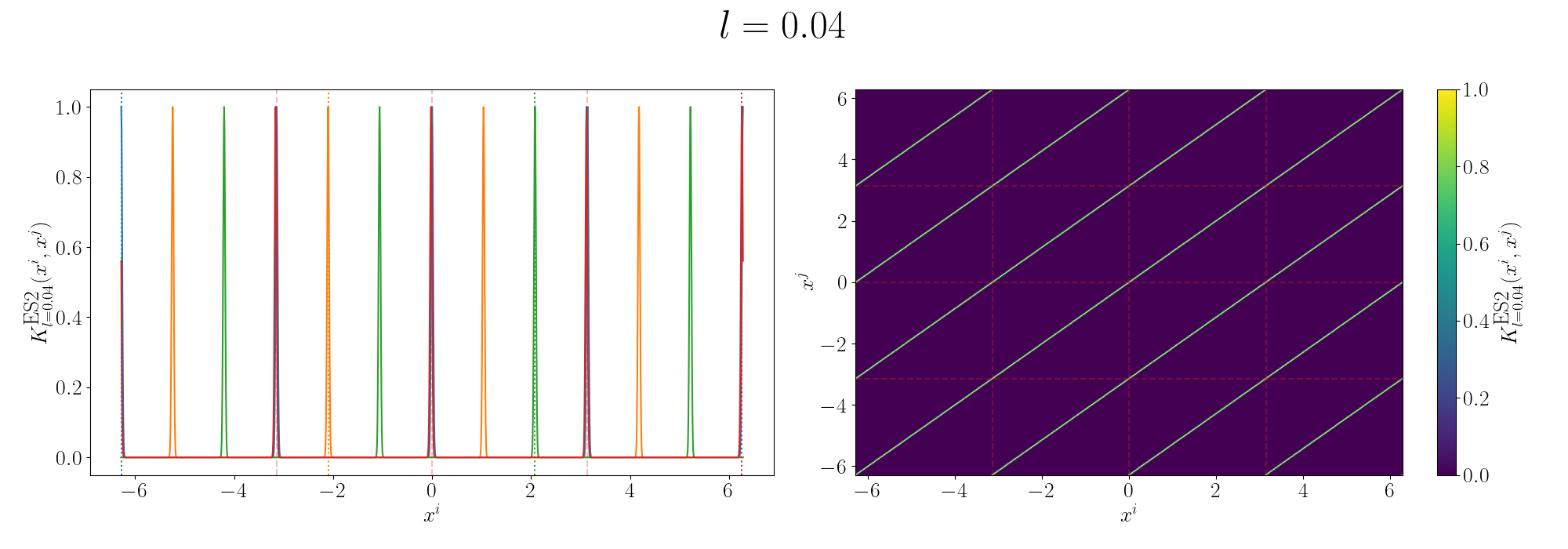

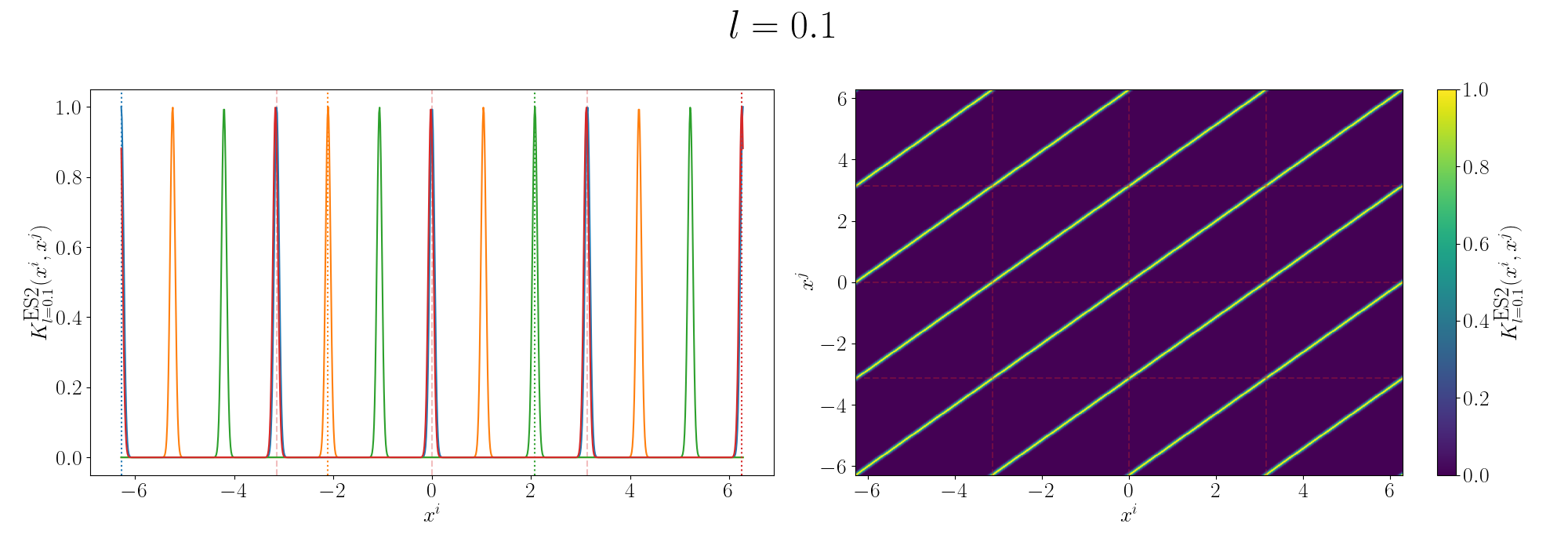

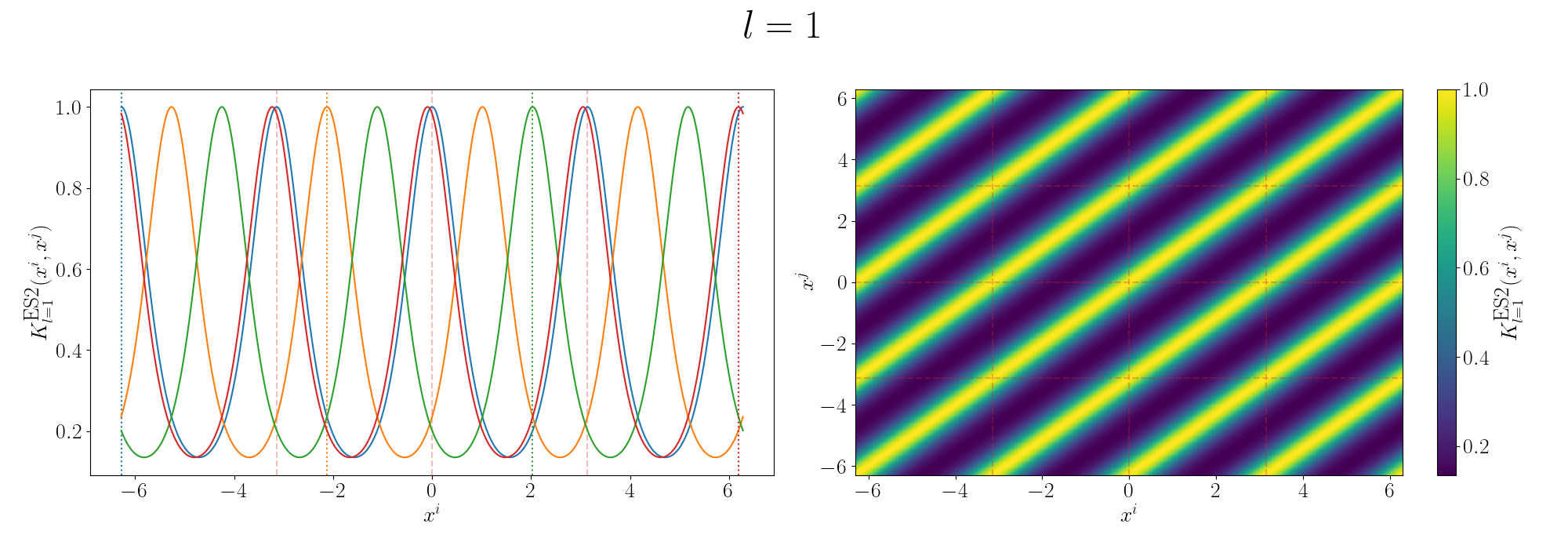

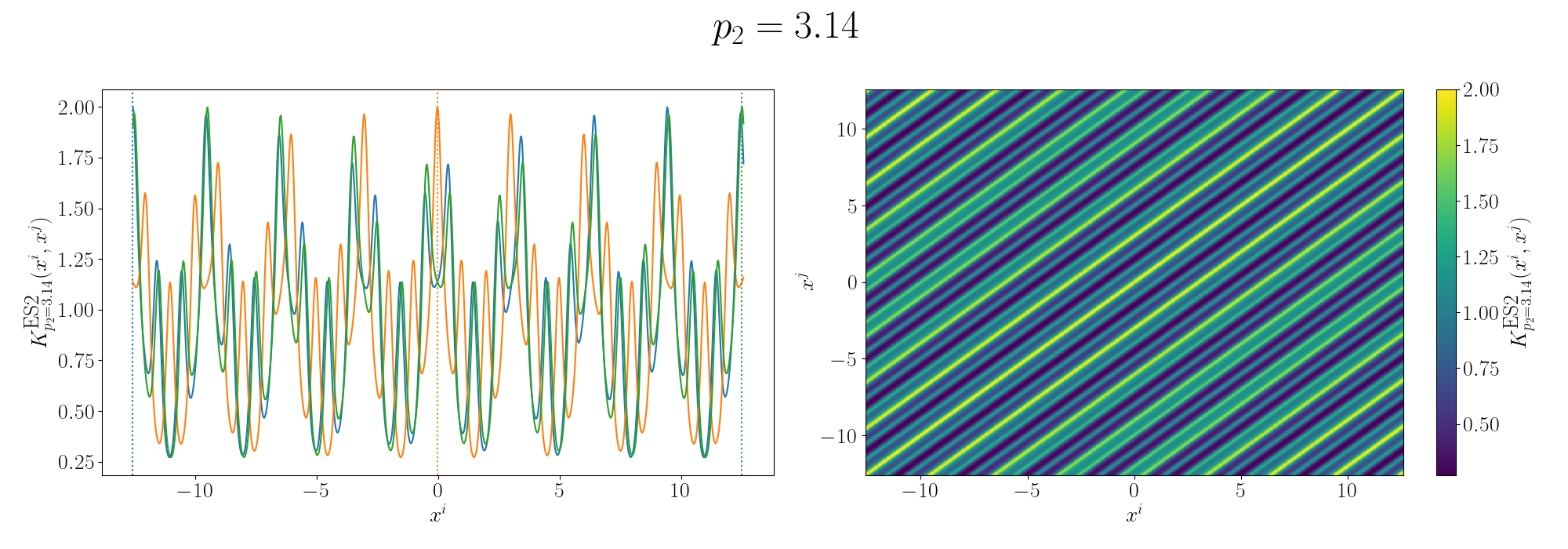

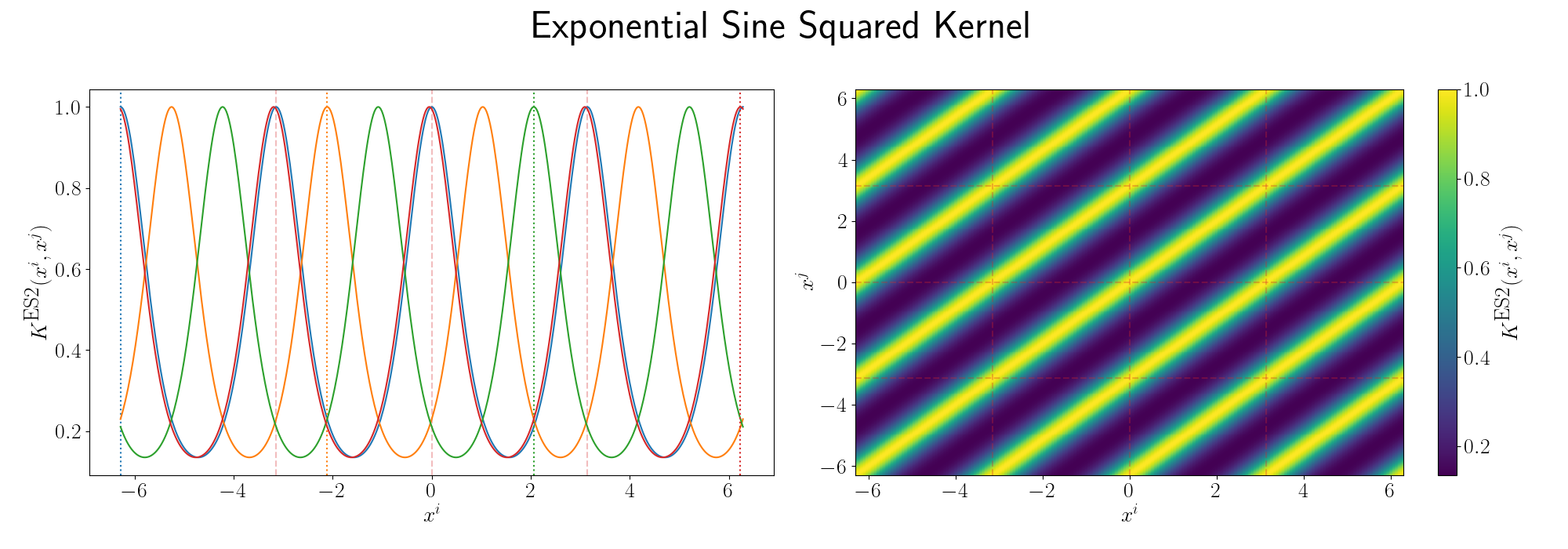

For the periodicity we can use the ExpSinSquared or simply periodic kernel. Functionally what the kernel looks like is the following,

\[\begin{align} k(x_i, x_j) = \exp\left[- 2 \frac{\sin^2\left(\pi \, \vert\vert x_i - x_j \vert\vert^2/p \right)}{l^2} \right], \end{align}\]where \(l\) is the length scale again and \(p\) is the period of the kernel. It’s pretty obvious how the periodicity works here, you have a \(\pi\) periodic kernel, you get \(\pi\) periodic functional representations. What’s not immediately obvious, or at least it wasn’t to me, was how the length scale comes into play.

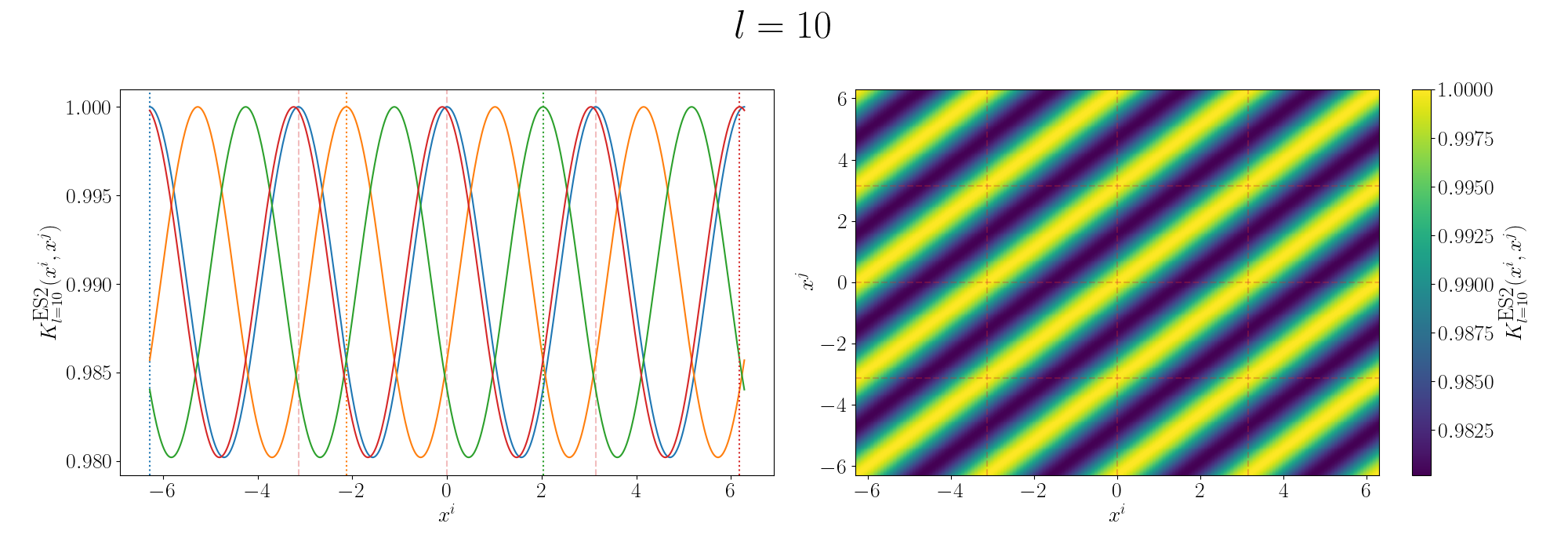

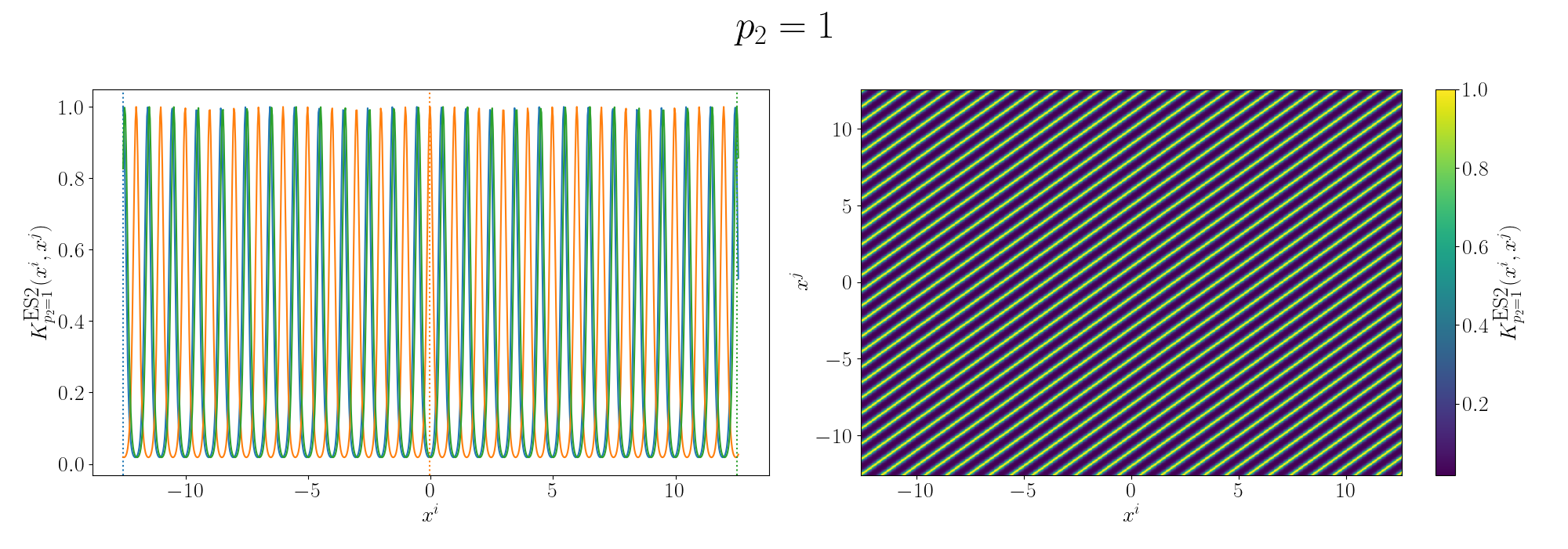

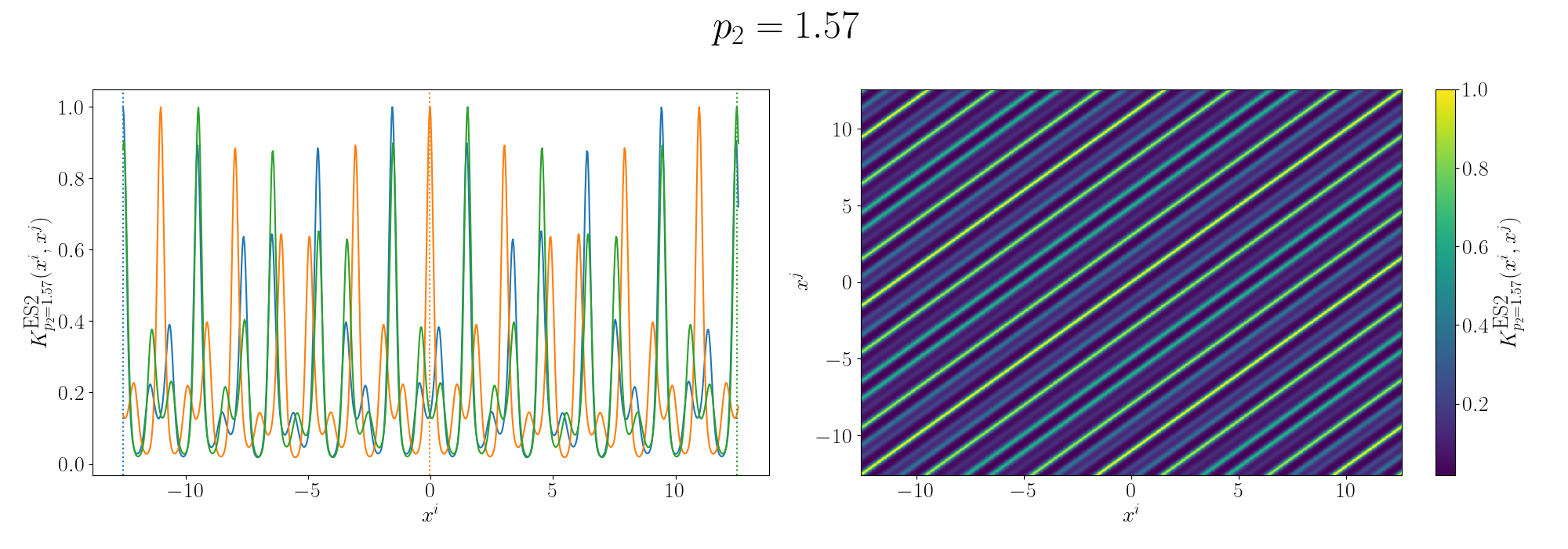

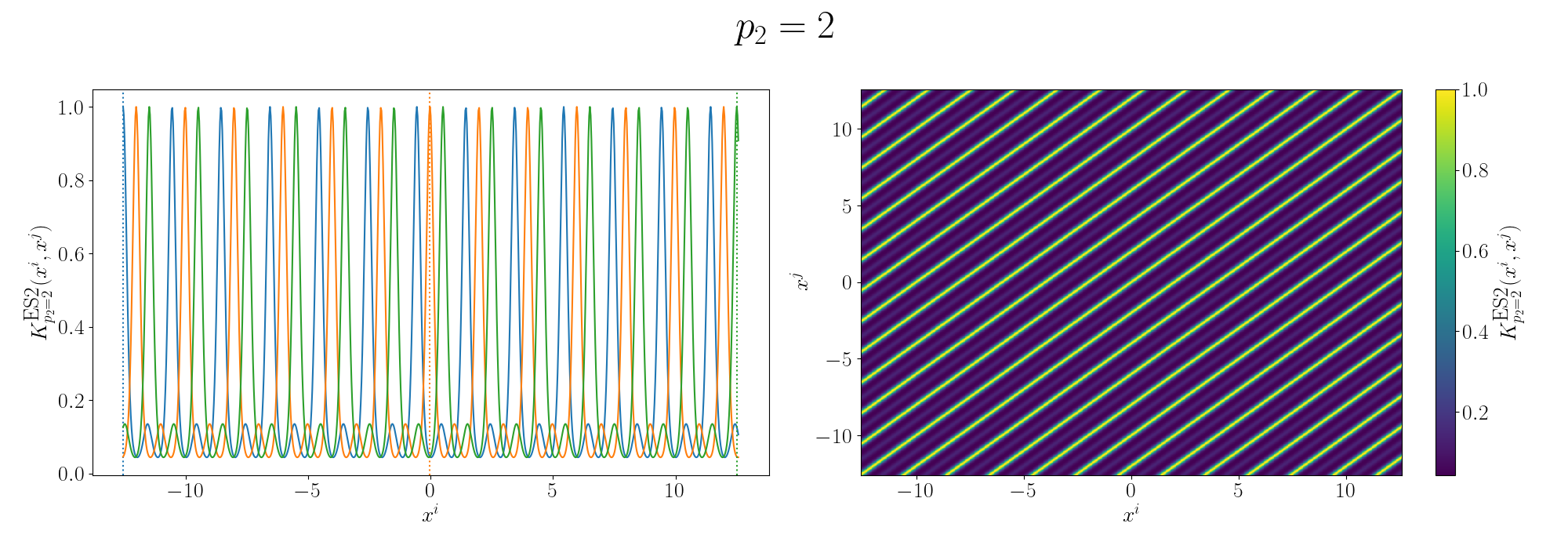

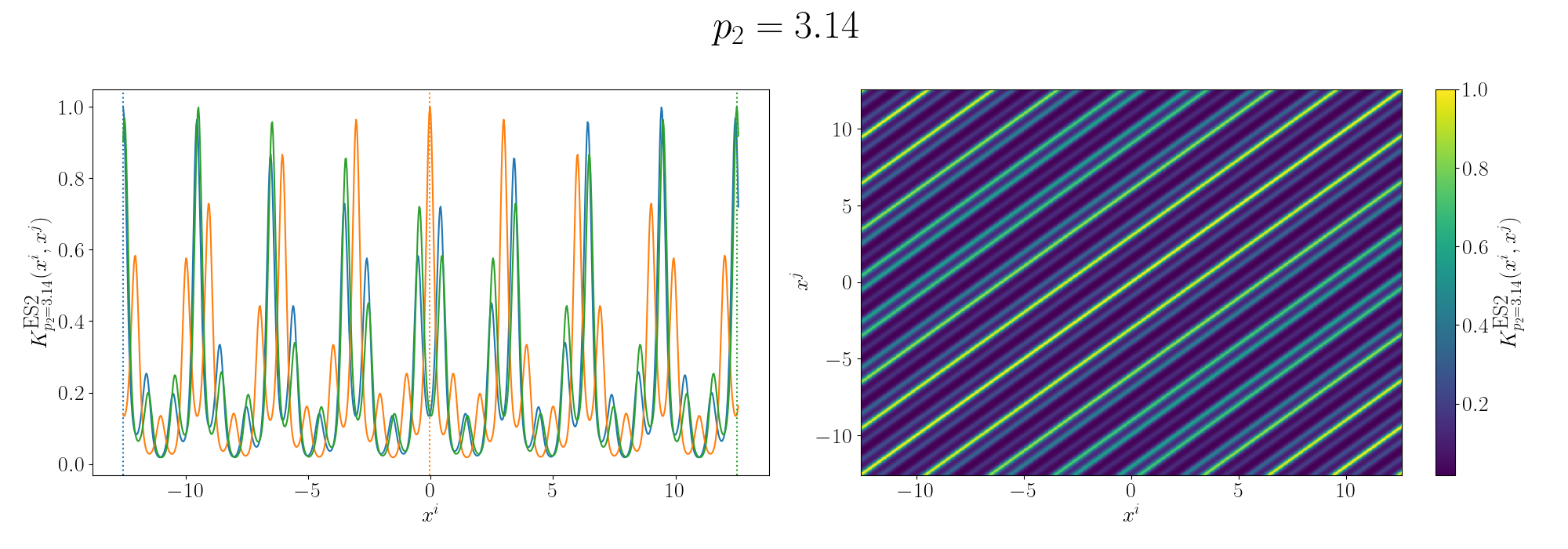

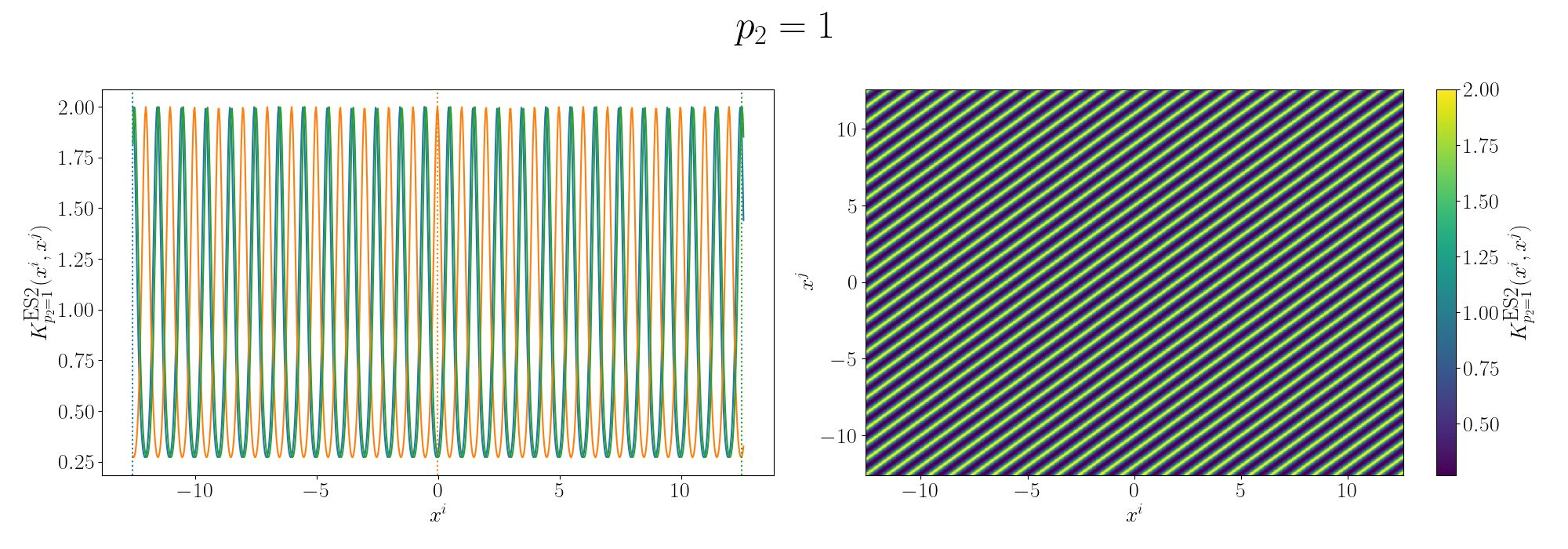

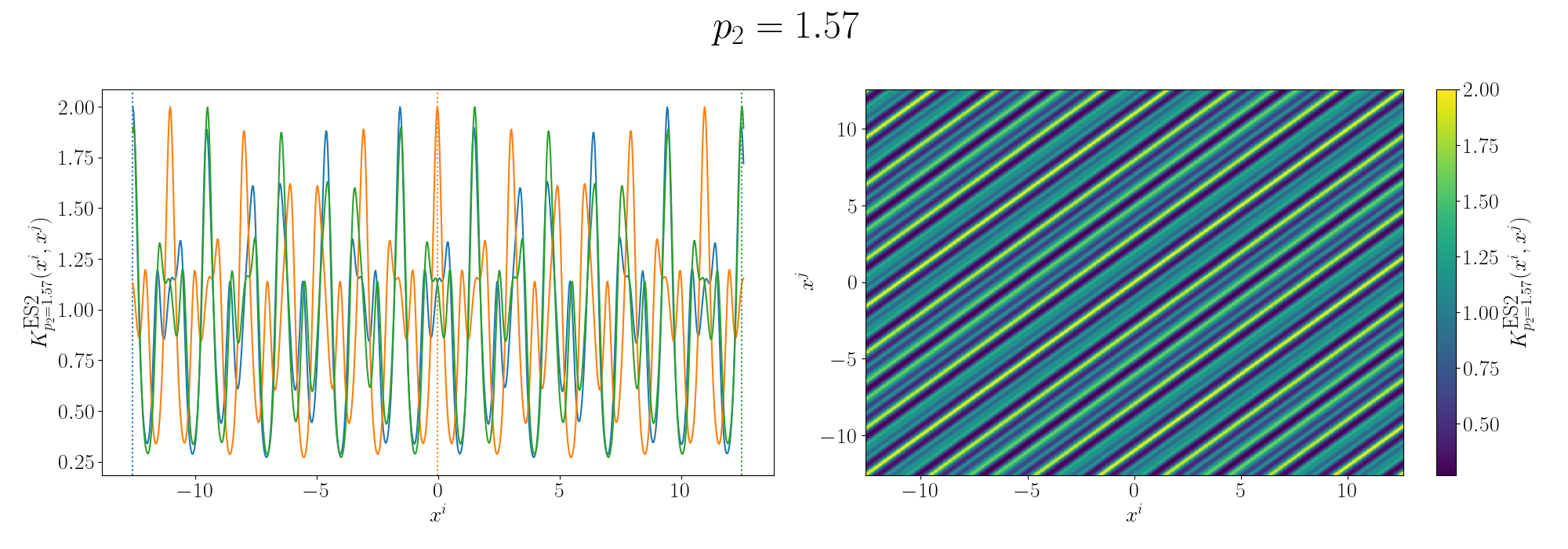

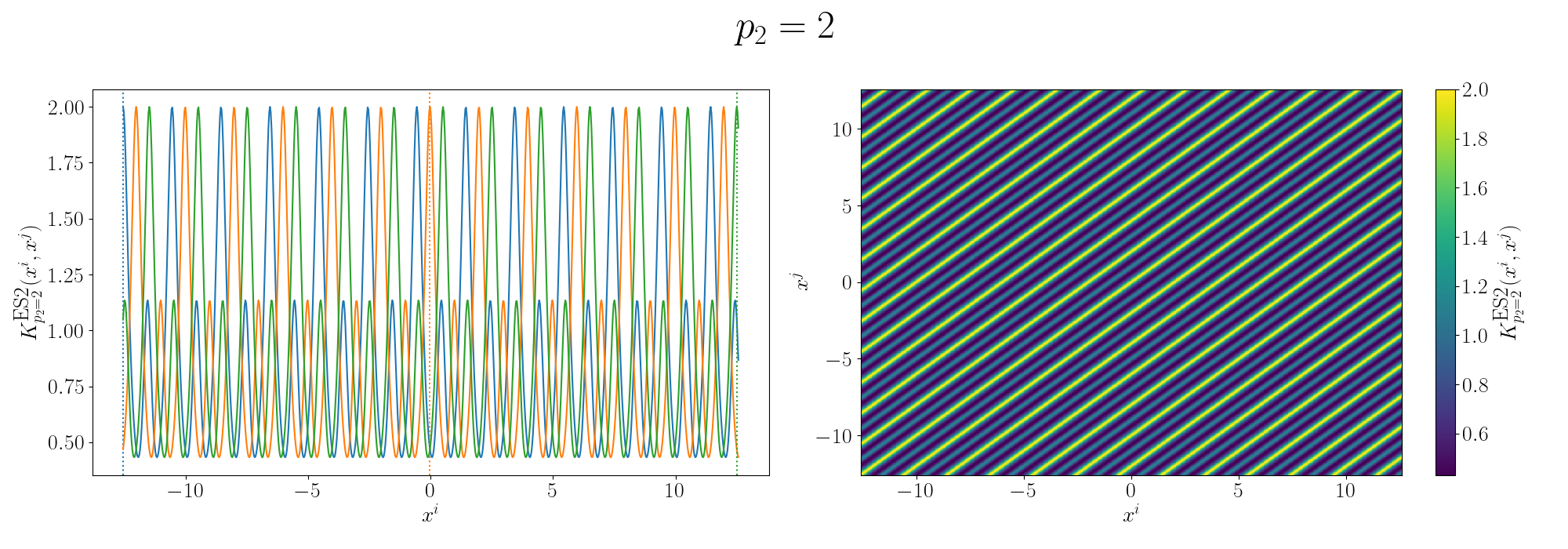

Looks look at some periodic kernel pseudo-Gram matrices for different length scales.

Remembering that these plots are showing the inner products between our inputs we can see the obvious periodicity, we except values that are some integer multiple of \(\pi\) apart from each other to be related. The length scale seems to be how hard of a relationship we want to expect/how much we are fine with deviating away from exact periodicity.

- For large values of the length scale we can model very smooth behaviour where nearby values to the exact integer distances are still meaningfully related.

- For small values of the length scale we model very rough or rapidly changing behaviour, with only values extremely close to the exact integer distances giving non-zero values of the inner product.

And this isn’t just the case for the periodic kernel, the length scale pretty much always behaves this way. Large = smooth and small = rough/rapidly changing or for behaviours that deviate from pure sinusoidal components.

Now, going back to the sunspot data. I couldn’t figure out a good way that wasn’t really ad-hoc for or even gave significantly better results ensuring the non-negativity so we’ll focus on the periodicity. We can first identify that the data isn’t a pure sinusoid. No duh. And that there are likely more than I frequency making up the data.

The remaining question is then how do we handle the multiple frequencies/periodicities? Well if we want to combine the behaviour of multiple kernels (in this case multiple periodic kernels) it’s actually pretty simple. To combine different kernels we can simply add or multiple the function outputs together. In this case I’m going to multiply them together as I think the relationship in the data is something like a periodic signal modulated by some envelope/another periodic component.

For now let’s say that the kernels have the same length scale, and for simplicity we’ll set it to one. Then the derived kernel is something like,

\[\begin{align} k^{prod}(x_i, x_j) &= k^{ExpSin2}_{p=p_1}(x_i, x_j) \cdot k^{ExpSin2}_{p=p_2}(x_i, x_j) \\ &= \exp\left[- 2 \sin^2\left(\pi \, \vert\vert x_i - x_j \vert\vert^2 /p_1 \right) \right] \exp\left[- 2 \sin^2\left(\pi \, \vert\vert x_i - x_j \vert\vert^2 /p_2 \right) \right] \\ &= \exp\left(- 2 \left[ \sin^2\left(\pi \, \vert\vert x_i - x_j \vert\vert^2 /p_1 \right) + \sin^2\left(\pi \, \vert\vert x_i - x_j \vert\vert^2 /p_2 \right) \right] \right) \end{align}\]Let’s look again at some examples of the Gram matrix.

So you can see that we kind of see the functional form of the behaviour we’re expecting6. We can also look at the same graphs for if we added the kernels instead.

So the additive version generally looks like you’ve added two sinusoidal functions together and broadly kind seems to relate the points in a similar looking way to the sunspot data. I’ll go into more details in the Gallery of Kernels section.

But at the end of the day, I was testing out both of them, and the compound/multiplied kernels seemed to do the best.

Here’s the code to reproduce the above.

from sklearn.gaussian_process.kernels import ExpSineSquared

sunspot_data = pandas.read_csv("sunspots/Sunspots.csv")

sunspot_data['Date'] = pandas.to_datetime(sunspot_data['Date'])

first_record = sunspot_data['Date'].min()

# 2. Calculate the difference (timedelta)

time_difference = sunspot_data['Date'] - first_record

# 3. Convert the timedelta to total days (as a float)

# .dt.total_seconds() returns the difference in seconds

# / (60 * 60 * 24) converts seconds to days

days_since_first = time_difference.dt.total_seconds() / (365 * 60 * 60 * 24)

sunspot_X = days_since_first

sunspot_design = np.array([np.ones_like(days_since_first), days_since_first]).T

sunspot_Y = sunspot_data['Monthly Mean Total Sunspot Number']

kernel = (ExpSineSquared(5.0, 10.0, periodicity_bounds=(1e-2, 1e3))

* ExpSineSquared(5.0, 100.0, periodicity_bounds=(1e-2, 1e3)) \

# * ExpSineSquared(60.0, 60.0, periodicity_bounds=(1e-2, 1e3)) \

)

regression_cls = KernelRidge(alpha=1e-8, kernel=kernel)

regression_cls.fit(sunspot_design, sunspot_Y)

num_times = 2000

test_sunspot_X = np.linspace(0, 400, num_times)

test_sunspot_design = np.array([np.ones(num_times), test_sunspot_X]).T

sunspot_Y_fit = regression_cls.predict(test_sunspot_design)

plt.figure(figsize=(12, 4))

plt.scatter(sunspot_X, sunspot_Y, s=20, lw=0.5, edgecolor='k', color='tab:blue', label='Data', alpha=0.8)

plt.plot(test_sunspot_X, sunspot_Y_fit, color='tab:orange', label='Kernel Ridge Prediction', lw=3.0)

plt.xlabel("Years since first recording", size=16)

plt.ylabel("Sunspot Number", size=16)

plt.tight_layout()

plt.savefig("KRidge_Figures/sunspot_data_with_kernel_ridge_fit.png")

plt.show()

And one just keeps multiplying with more and more periodic kernels you can get something that looks like this. At this point though it’s likely overfitting.

Kernel Principle Component Analysis (Kernel PCA II)

Resources

- Manifold hypothesis

- Kernel Principal Component Analysis - Bernhard Scholkopf (pdf link)

- What Are the Advantages of Kernel PCA Over Standard PCA? - Enes Zvornicanin

- Dimensionality reduction. PCA. Kernel PCA - COMP-652 and ECSE-608

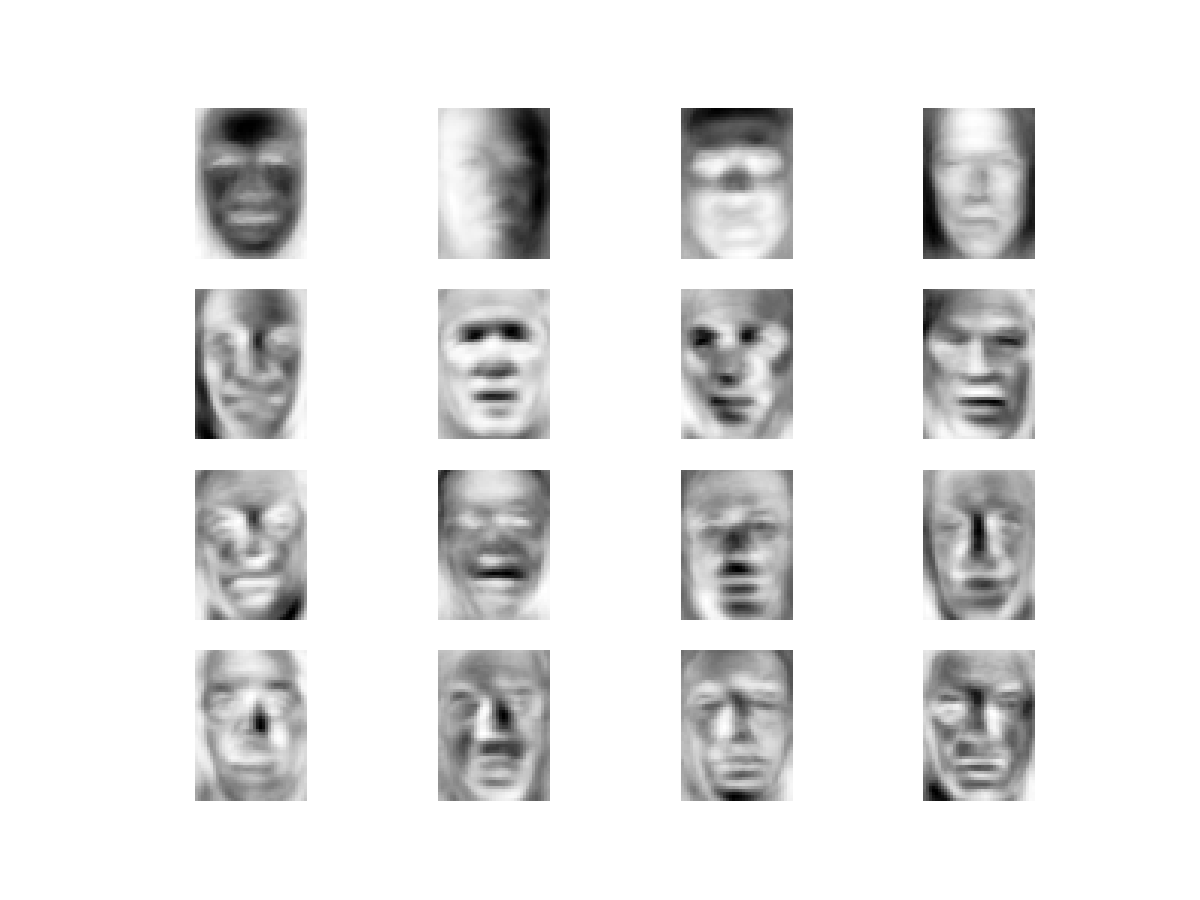

- ML | Face Recognition Using Eigenfaces (PCA Algorithm) - pawangfg

The Gist

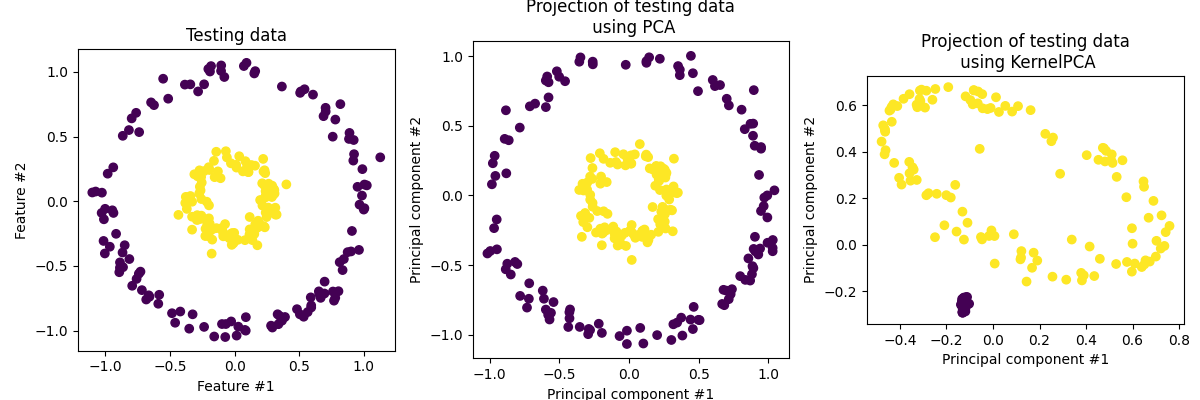

No I’m going to come right out of the gate with the fact that most of the time Kernel PCA or KPCA is not going to be useful to you in the same way that PCA is. Arguably the main benefit of PCA is that you are able to generate a very interpretable combination of your parameters that nicely explains most of the variation in your data. It does however allow separation of data in a similar way if that’s your game.

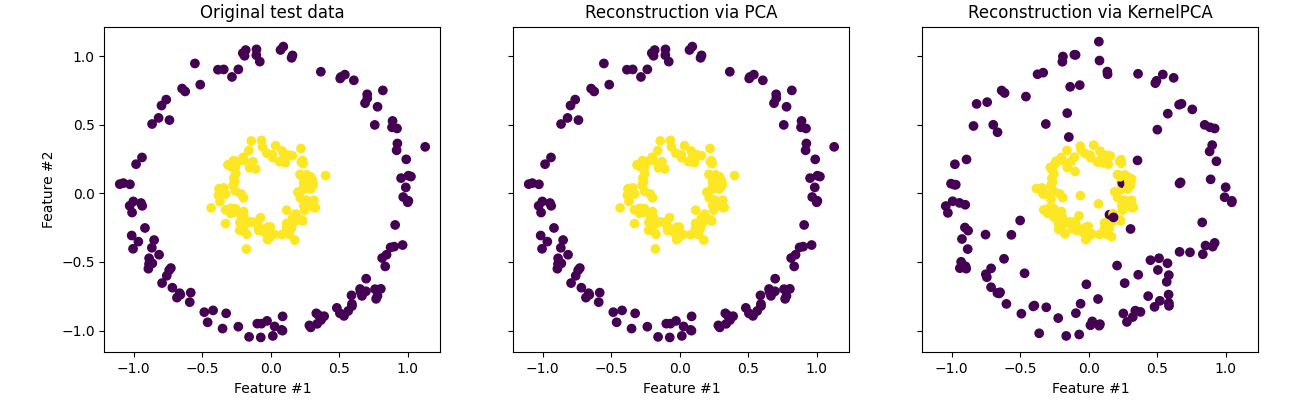

For example, stealing an example right from the scikit-learn page on KPCA.

The interpretability aspect in traditional PCA is because it presumes a linear relationship so any combination comes out to be similar to a weighted representation of how much each component contributes to the variation. It’s more often used as a dimensionality reduction technique than standard PCA particularly within the machine learning community. You can interpret the component coefficients of the as a representation of the data and use that as a smaller input to feed into a neural network or as a smaller latent space to learn key features of the data like an autoencoder for example. (stealing the example from the same page).

But I’m getting ahead of myself, let’s just see how it works first. As a reminder what we did was construct a covariance matrix,

\[\begin{align} S = \mathbb{E}[X^2] - \mathbb{E}[X]^2 = \frac{1}{n}\sum_{i=1}^n X_i X_i^T = \frac{1}{n} \mathbb{X}^T\mathbb{X}, \end{align}\]and then performed a eigen value or singular value decomposition to find the eigenvectors along which the variation in the data is maximised (particularly the first eigenvector),

\[\begin{align} S = P^T D P, \end{align}\]and we could use those eigen vectors, e.g. \(u_j\), and project our data on to them using a dot product \(u_j^T \vec{x_i}\). The key insight here is that if we describe the covariance matrix as the following sum of outer products,

\[\begin{align} S = \frac{1}{n} \sum_{i=1}^n \Phi(x_i) \Phi(x_i)^T, \end{align}\]where \(\Phi(x_i)\) denote the vectors in the projected feature space, then as any eigen vector can by definition be described as \(\lambda_j v = S v_j\) if we place this in the equation immediately above then we find,

\[\begin{align} \lambda_j v_j = S v_j = \frac{1}{n} \sum_{i=1}^n \Phi(x_i) \Phi(x_i)^T v_j = \sum_{i=1}^n \alpha_{ij} \lambda_j \Phi(x_i). \end{align}\](chucking the \(\lambda_j\) at the end and absorbing the \(1/n\) to make the following math nicer). This means than any eigenvector in the feature space can be described as a linear combination of the data points in this space.

\[\begin{align} v_j = \sum_{i=1}^n \alpha_{ij} \Phi(x_i). \end{align}\]No we can’t calculate the actual covariance matrix in the feature space as it would quickly get to big (and for RBF infinite!). But going back to the original PCA, what we really wanted was to project the data on to the PCA components and record the given result as value in this component dimension. So if we imagine that we wanted to project one of our data points \(\Phi(x_m)\) onto a given component in feature space it would look like the following.

Dot product with the given component.

\[\begin{align} \Phi(x_m)^T v_j = \sum_{i=1}^n \alpha_{ij} \Phi(x_m)^T \Phi(x_i) = \sum_{i=1}^n \alpha_{ij} k(x_m, x_i). \end{align}\]That shows that we can represent the dot product as a linear combination of the kernel values. But then what’s \(\alpha_{ij}\)? For this we’re going to chuck our \(v_j\) back into the eigenvalue/vector equation.

\[\begin{align} \lambda_j v_j &= S v_j \\ &= \frac{1}{n} \sum_{l=1}^n \Phi(x_l) \Phi(x_l)^T v_j \\ &= \frac{1}{n} \sum_{l=1}^n \Phi(x_l) \Phi(x_l)^T \left(\sum_{i=1}^n \alpha_{ij} \Phi(x_i) \right) \\ &= \frac{1}{n} \sum_{l=1}^n \sum_{i=1}^n \alpha_{ij} \Phi(x_l) \Phi(x_l)^T \Phi(x_i) \\ &= \frac{1}{n} \sum_{l=1}^n \sum_{i=1}^n \alpha_{ij} \Phi(x_l) k(x_l, x_i)\\ \end{align}\]Then we’re going to do something sneaky and multiply both sides by some arbitrary point projection of our data7, \(\Phi(x_q)\), and substituing our solution for \(v_j\) again on the LHS,

\[\begin{align} \lambda_j \Phi(x_q)^T v_j &= S v_j \\ \lambda_j \sum_{r=1}^n \alpha_{rj} \Phi(x_q)^T \Phi(x_r) &= \frac{1}{n} \sum_{l=1}^n \sum_{i=1}^n \alpha_{ij} \Phi(x_q)^T \Phi(x_l) k(x_l, x_i)\\ n \lambda_j \sum_{r=1}^n k(x_q, x_r) \alpha_{rj} &= \sum_{i=1}^n \left( \sum_{l=1}^n k(x_q, x_l) k(x_l, x_i) \right) \alpha_{ij} \\ \end{align}\]Hopefully you can see that in the LHS sum between the kernel and \(\vec{\alpha_j} = \{\alpha_{1j}, \alpha_{2j}, ..., \alpha_{nj}\}\) is a matrix product, more specifically a dot product (!). And the right hand side has a couple dot products, that we can represent as the following where \(K_{qp} = k(x_q, x_p)\).

\[\begin{align} \lambda_j \Phi(x_q)^T v_j &= S v_j \\ n \lambda_j \sum_{r=1}^n k(x_q, x_r) \alpha_{rj} &= \sum_{i=1}^n \left( \sum_{l=1}^n k(x_q, x_l) k(x_l, x_i) \right) \alpha_{ij} \\ n \lambda_j \sum_{r=1}^n K_{qr} \alpha_{rj} &= \sum_{i=1}^n (K^2)_{qi} \alpha_{ij} \\ n \lambda_j K \vec{\alpha}_j &= K^2 \vec{\alpha}_j \\ \end{align}\]Assuming that \(K\) has an inverse then we get a new eigenvalue problem,

\[\begin{align} \beta_j \vec{\alpha}_j = n \lambda_j \vec{\alpha}_j = K \vec{\alpha}_j . \end{align}\]In essence, instead of taking the eigenvalues and eigenvectors of the covariance matrix we take the eigenvalues and eigenvectors of the gram matrix!

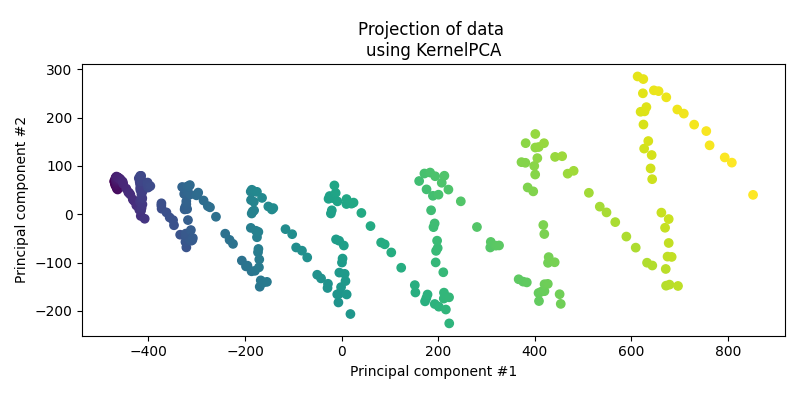

Now let’s go back to the following dataset.

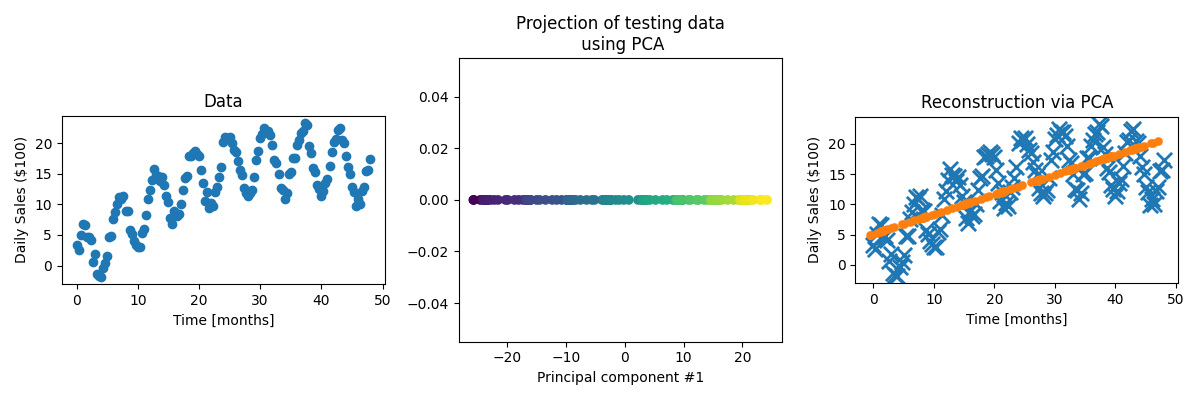

First let’s actually see how the standard PCA performs on the data.

For the data, it’s obviously done the best it can when presuming a linear relationship but is clearly missing some key information.

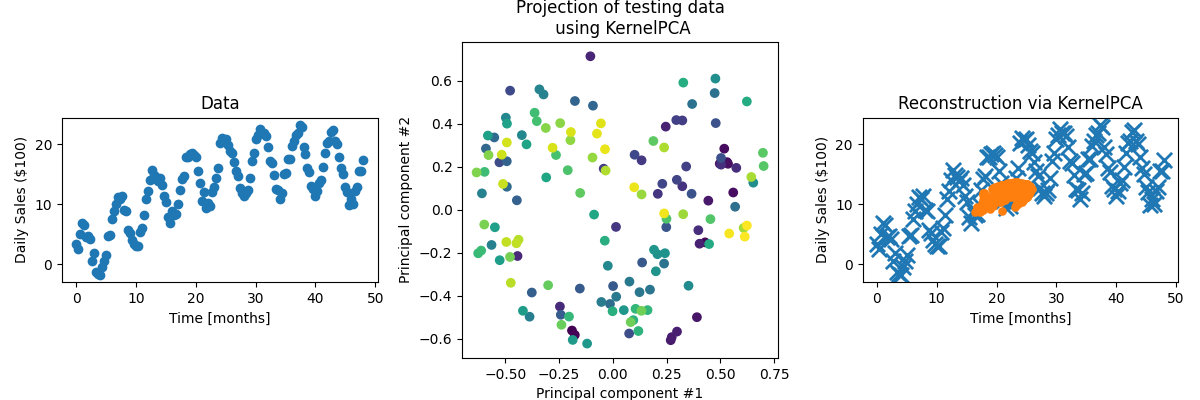

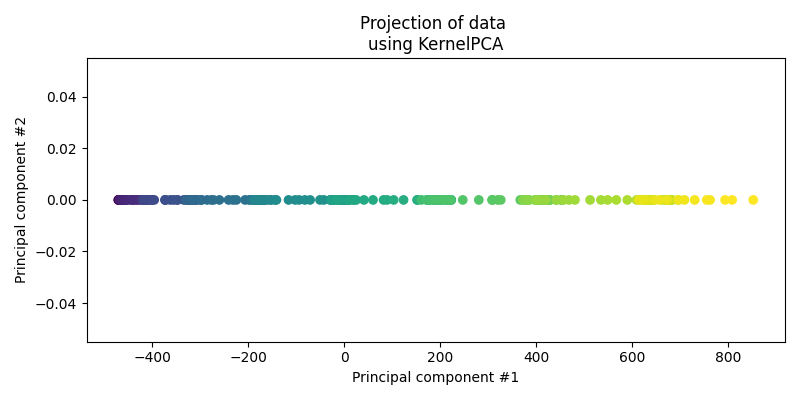

Now applying kernel PCA, we’re definitely seeing a periodic behaviour with some periodicity on the scale of ~6 months? And I’ll just guess a length scale around 5. Let’s try just the periodic kernel and see how it does.

Not very good! The projection of the data into the components is Which is good! Because there’s obviously some polynomial (quadratic I would guess) behaviour so it’d be weird if we got everything to work with just the periodic kernel. Let’s multiply the periodic and polynormial degree 2 kernels together and see how we do. The data in the projected space looks like…

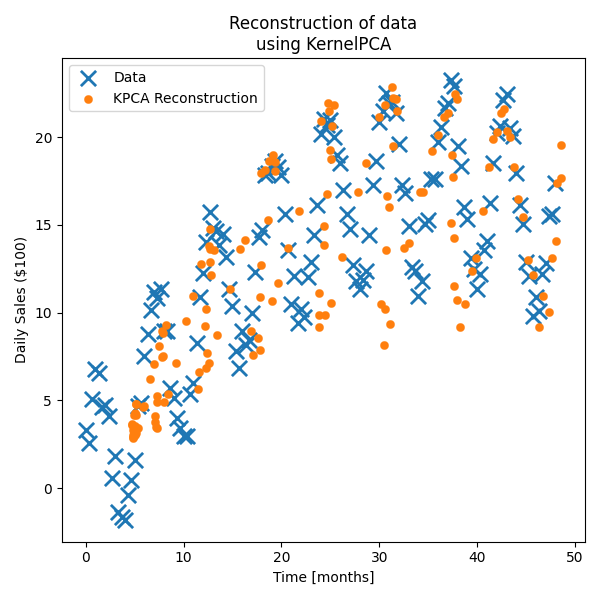

We can see that one component seems to be broadly tracking time and the other the amplitude which is a good sign. Let’s see how well we can reconstruct the data (extra easy as the testing data is also the training data).

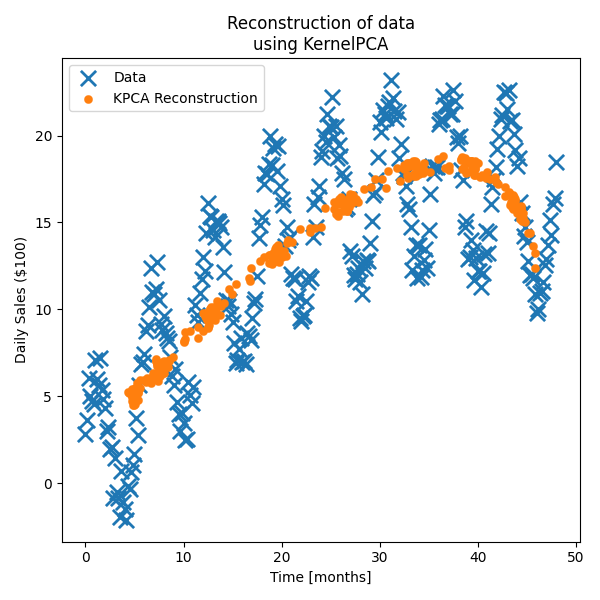

Much better! Still not fantastic if we’re being honest, but it’s obviously picking up some of the periodic and quadratic behaviour. Now let’s see how much information using 1 component KPCA retains with the same amount of training data but

Cool! We’ve lost the periodicity but we’ve actually kinda got the trend better than for 2 components?

Gaussian Processes

Resources

- A Practical Guide to Gaussian Processes

- Interactive Gaussian Process Visualization

- Gaussian process regression demo

- Gaussian Processes for Machine Learning

- 2023-01-09 PRML - From Bayesian Linear Regression to Gaussian processes - Stefan Harmeling

- A Visual Exploration of Gaussian Processes - distill

The Gist

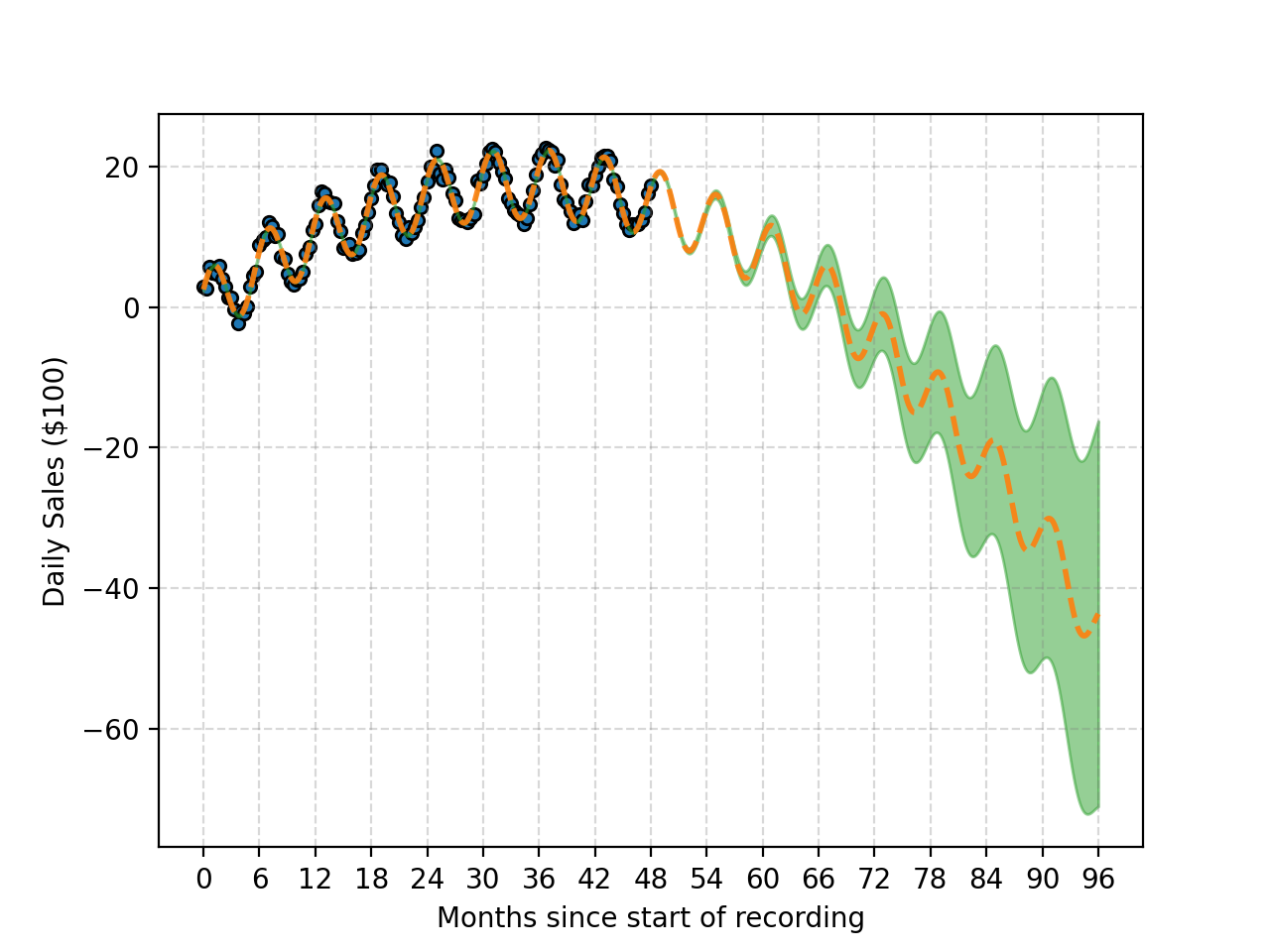

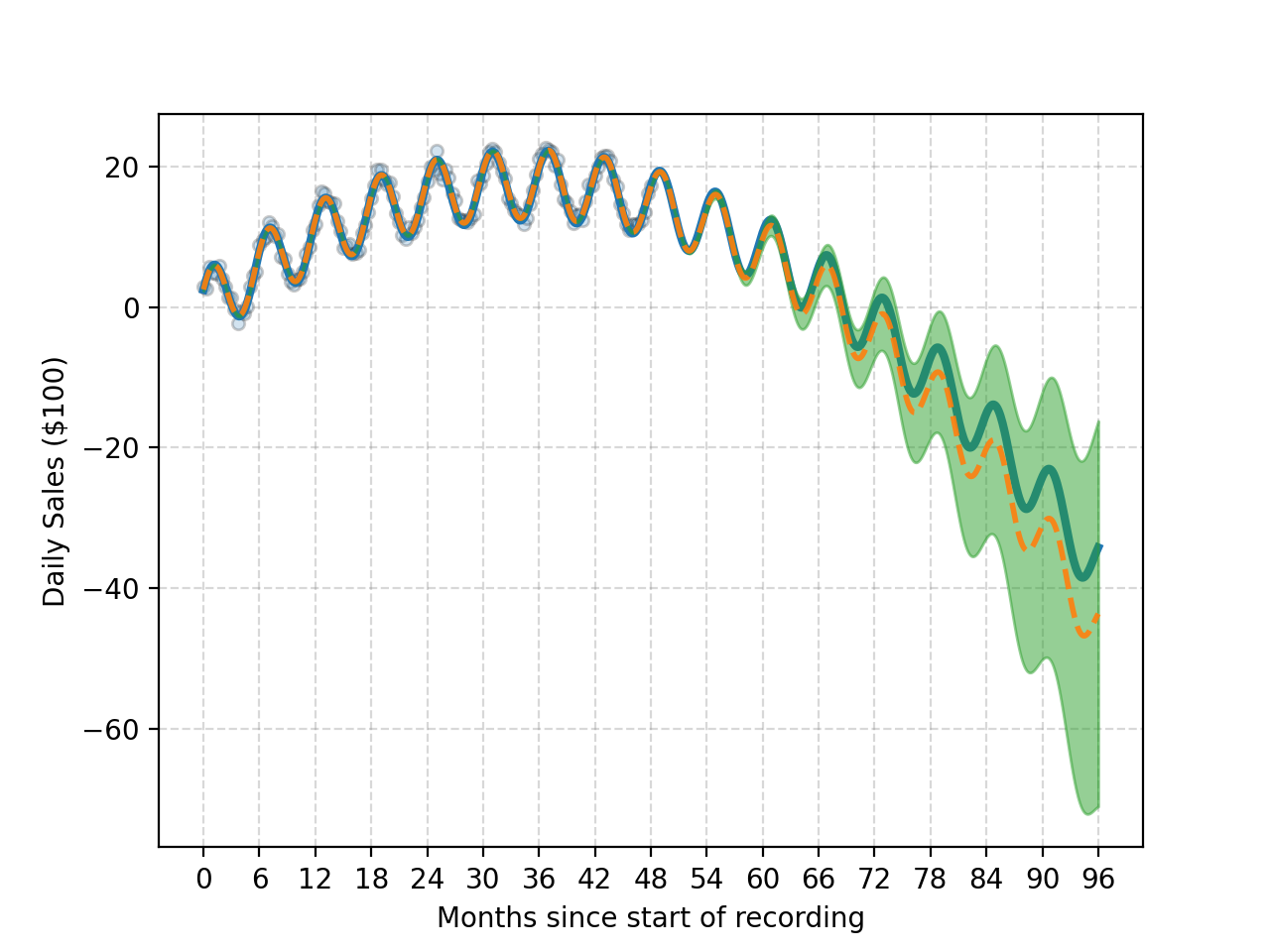

Gaussian processes is where I want to finish this post. It and KPCA were the main topics that I wanted to cover. But unlike KPCA, Gaussian processes to me were much harder to initially get a grasp on. However, if you’ve followed me up until this point, you’ve already done the hard work, because I’ve secretly already gone through the most of the main ideas of Gaussian processes through the sections on Kernel Ridge Regression. In fact one can (and one will) show that Kernel Ridge Regression is the mean result of the Gaussian process result.

(For those already ahead of me I’m quite following the weight-space explanation here)

So back-tracking a little through the section on standard Ridge Regression, we were interested in fitting a straight line to data, encapsulated in the likelihood.

\[\begin{align} y_i \sim \mathcal{N}(X\vec{\alpha}, \sigma^2 I), \end{align}\]or maybe more formulaically,

\[\begin{align} p(Y|X, \vec{\alpha}) &= \prod_{i=1}^N p(y_i | x_i, \vec{\alpha}, \sigma) \\ &= \prod_{i=1}^N \frac{1}{\sqrt{2\pi \sigma^2}} \exp\left(-\frac{(y_i - x_i \vec{\alpha})^2}{2\sigma^2}\right) \\ &= (2\pi \sigma^2)^{-N/2} \exp\left(-\frac{(Y - X\vec{\alpha})^2}{2\sigma^2}\right) \\ \end{align}\]We then said that \(\vec{\alpha} \sim \tau \mathcal{N}(\vec{0}, \vec{1})\) and finally arrived at the expression for the posterior to be proportional to,

\[\begin{align} p(\vec{\alpha}| Y, X) \propto \underbrace{\color{red} \exp\left(-\frac{(Y - X\vec{\alpha})^2}{2\sigma^2}\right) }_{\color{red} \text{likelihood}} \underbrace{\color{blue} \exp\left(-\frac{1}{2\tau^2}\vec{\alpha}^2\right)}_{\color{blue} \text{prior}}. \end{align}\]Then minimisation of the negative log-posterior ensued. With Kernel Ridge Regression replacing any dot products with the kernel function so that we could implicitly project our data into higher dimensional feature spaces and do linear regression there. Thus allowing us to do non-linear regression in the original data space.

There are two ways that we will deviate from the above:

- We will allow for a more general prior on \(\vec{\alpha}\) –> \(\vec{\alpha} \sim \mathcal{N}(\vec{0}, \Sigma_p)\). Where \(p\) denotes the number of parameters or simply the length of \(\vec{\alpha}\).

- We will care about uncertainties.

And that’s it, after that you get Gaussian processes (and the first one isn’t even really unique to Gaussian processes, it’s just generally done more often with them). Doing the first part just means replacing the prior in the posterior expression that we made above. If you’re happy with this explanation, and don’t care about the specifics on the math, then skip to the next subsection.

\[\begin{align} p(\vec{\alpha}| Y, X) \propto {\color{red} \exp\left(-\frac{(Y - X\vec{\alpha})^2}{2\sigma^2}\right) }{\color{blue} \exp\left(-\frac{1}{2} \vec{\alpha}^T \Sigma_p \vec{\alpha}\right)}. \end{align}\]Then through the \({\color{red}\text{m}}{\color{purple}\text{a}}{\color{blue}\text{g}}{\color{green}\text{i}}{\color{orange}\text{c}}\) \({\color{red}\text{o}}{\color{purple}\text{f}}\) \({\color{green}\text{g}}{\color{orange}\text{a}}{\color{red}\text{u}}{\color{purple}\text{s}}{\color{blue}\text{s}}{\color{green}\text{i}}{\color{orange}\text{a}}{\color{red}\text{n}}{\color{purple}\text{s}}\) we can combine the two normal distributions in the following way.

\[\begin{align} p(\vec{\alpha}| Y, X) \propto \exp\left[ -\frac{1}{2} \left(\vec{\alpha} - \hat{\vec{\alpha}}\right)^T \left(\frac{1}{\sigma^2} X^T X +\Sigma_p^{-1} \right) \left(\vec{\alpha} - \hat{\vec{\alpha}} \right) \right]. \end{align}\]With,

\[\begin{align} \hat{\vec{\alpha}} &= \sigma^{-2} (\sigma^{-2} X^T X + \Sigma_p^{-1})^{-1} X^T Y \\ &= \sigma^{-2} \Lambda X^T Y \end{align}\]which is just what we found for \(\vec{\alpha}\) previously8 i.e. that our result from Kernel Ridge Regression was the mean for the Gaussian process result. But now, instead of using the push-through identity we use the full Woodbury matrix identity.

With no relationship between the variables used above,

\[\begin{align} (A + UCV)^{-1} = A^{-1} - A^{-1}U(C^{-1} + VA^{-1}U)^{-1}VA^{-1} \end{align}\]Which we can use to rearrange \(\hat{\vec{\alpha}}\) in a similar way that we did before for \(\vec{\alpha}\) in the Kernel Ridge Regression section with,

\[\begin{align} \Lambda &= \Sigma_p - \Sigma_p X^T (\sigma^2 I + X \Sigma_p X^T)^{-1} X \Sigma_p \\ \end{align}\]Now redefining what we represent as the inner product (now a weighted dot/inner product) as,

\[\begin{align} k(x_i, x_j) = X_i \Sigma_p X_j^T, \end{align}\]and thus,

\[\begin{align} K = X \Sigma_p X^T. \end{align}\]Moving on with this new definition we replace the relevant terms,

\[\begin{align} \hat{\vec{\alpha}} &= \sigma^{-2} \left[ \Sigma_p - \Sigma_p X^T (K + \sigma^2 I)^{-1} X \Sigma_p \right] X^T Y\\ &= \sigma^{-2} \Sigma_p X^T y - \sigma^{-2} \Sigma_p X^T (K + \sigma^2 I)^{-1} \underbrace{X \Sigma_p X}_{K} {}^T Y \\ &= \Sigma_p X^T \sigma^{-2} \left[ I - (K + \sigma^2 I)^{-1} K \right] Y \end{align}\]Now we’re going to do something sneaky and replace \(I\) with \((K + \sigma^2 I)^{-1} (K + \sigma^2 I)\).

\[\begin{align} \hat{\vec{\alpha}} &= \Sigma_p X^T \sigma^{-2} \left[ (K + \sigma^2 I)^{-1}(K + \sigma^2 I) - (K + \sigma^2 I)^{-1} K \right] Y\\ &= \Sigma_p X^T \sigma^{-2} (K + \sigma^2 I)^{-1} (\underbrace{K + \sigma^2 I - K}_{\sigma^2 I}) Y \\ &= \Sigma_p X^T \sigma^{-2} (K + \sigma^2 I)^{-1} (\sigma^2 I) Y\\ &= \Sigma_p X^T (K + \sigma^2 I)^{-1} Y\\ \end{align}\]This is basically in the same form as before except \(\Sigma_p\) isn’t assumed to be a constant term multiplied by the identity matrix. So we continue on with the exact same thinking that now we have our solution, let’s project some input data, which when in the infinite dimensional feature space I will refer to as \(\phi(x_*)\) for a second, putting it into an approximate value for output data variable \(y_*\).

\[\begin{align} y_* = \phi(x_*)^T \hat{\vec{\alpha}} = \phi(x_*)^T \Sigma_p X^T (K + \sigma^2 I)^{-1} Y, \end{align}\]defining \(\phi(x_*)^T \Sigma_p X^T = k(x_*, X)\),

\[\begin{align} y_* = k_*^T (K + \sigma^2 I)^{-1} Y, \end{align}\]With this last term being equivalent to the projection that we got in the kernel ridge regression. i.e. We’ve just slightly generalise our kernel ridge regression result.

If we want something to sample from and get uncertainties for, we need a probability density. Starting over from here,

\[\begin{align} p(\vec{\alpha}| Y, X) \propto \exp\left[ -\frac{1}{2} \left(\vec{\alpha} - \hat{\vec{\alpha}}\right)^T \left(\frac{1}{\sigma^2} X^T X +\Sigma_p^{-1} \right) \left(\vec{\alpha} - \hat{\vec{\alpha}} \right) \right]. \end{align}\]This encodes the probabilities (up to a constant multiplier) on our parameters \(\vec{\alpha}\), which may be infinite dimensional so isn’t the most handy in practice. What we would want, is uncertainties on our fit, i.e. if we look at a given input \(x_*\) and output \(y_*\) \(p(y_*\vert x_*, \vec{\alpha}, Y, X)\). But then we have the dependency on \(\vec{\alpha}\) still 😢.

What we’re going to do is remove the explicit dependence on \(\vec{\alpha}\) through marginalisation which is basically the reason that I’m using the \(\propto\) instead of \(=\) in the above.

As a refresher, we can describe the conditional probability, \(p(A\vert B)\) via Baye’s theorem,

\[\begin{align} p(A|B) = \frac{p(B|A)p(A)}{p(B)}, \end{align}\]and if \(A\) and \(B\) are continuous quantities, we know the following by the definition of a probability density,

\[\begin{align} 1 = \int_A p(A|B) dA = \int_A \frac{p(B|A)p(A)}{p(B)} dA. \end{align}\]And with a bit of rearrangement using the fact that \(p(B)\) isn’t dependent on \(A\) then we find,

\[\begin{align} p(B) = \int_A p(B|A)p(A) dA. \end{align}\]This process of integrating out the variable \(A\) is called marginalisation and can be thought of as a weighted average of \(p(B\vert A)\) with respect to \(p(A)\)9 or a million other ways that I don’t have the time to go through atm. For us, this basically means that if we have some annoying variable \(A\) in our probability density \(p(B\vert A)\) we can integrate it away presuming we have the probability density \(p(A)\)… 🤔

Well, if we wanted \(p(y_* \vert x_*, X, Y)\) we could perform the following marginalisation,

\[\begin{align} p(y_*|x_*, X, Y) = \int_\vec{\alpha} p(y_*|x_*, \vec{\alpha}) p(\vec{\alpha}| Y, X) d\vec{\alpha}, \end{align}\]where we have \(p(\vec{\alpha} \vert Y, X)\) up to the multiplicative constant \(p(Y\vert X)\) (with respect to \(\vec{\alpha}\)) and is our original observational likelihood for a single point,

\[\begin{align} p(y_*|x_*, \vec{\alpha}) = \frac{1}{\sqrt{2\pi \sigma^2}}\exp\left(-\frac{1}{2} \frac{(y_* - x_*\vec{\alpha})^2}{2\sigma^2} \right). \end{align}\]Looking at just the exponent which I’ll denote with \(E\),

\[\begin{align} E = -\frac{1}{2} \left[ \frac{1}{\sigma^2}(y_*^2 - 2y_* x_* \vec{\alpha} + \vec{\alpha}^T x_*^T x_* \vec{\alpha}) + (\vec{\alpha}^T A \vec{\alpha} - 2\vec{\alpha}^T A \hat{\alpha} + \hat{\alpha}^T A \hat{\alpha}) \right]. \end{align}\]Where I’ve replaced some terms with \(A = \sigma^{-2} X^T X + \Sigma_p^{-1}\) to save on some math steps. We combine terms not involving \(\vec{\alpha}\),

\[\begin{align} M_* &= A + \sigma^{-2} x_*^T x_*\\ m_* &= A\hat{\alpha} + \sigma^{-2} y_* x_*^T \\ C &= \hat{\alpha}^T A \hat{\alpha} + \frac{y_*^2}{\sigma^2}. \end{align}\]This gives us a simplified expression for the exponent,

\[\begin{align} E = -\frac{1}{2} \left[ \vec{\alpha}^T M_* \vec{\alpha} - 2\vec{\alpha}^T m_* + C \right]. \end{align}\]What we want to do is turn this expression into a gaussian, as we expect that we should get one out due to the “magic of gaussians” and then we’d be able to analytically solve for the integration. So we want something like \(( \vec{\alpha} - \text{const1})^T \cdot \text{const2}^{-1} \cdot ( \vec{\alpha} - \text{const1})^T\) then we’ll be set. Hence, we find,

\[\vec{\alpha}^T M_* \vec{\alpha} - 2\vec{\alpha}^T m_* = (\vec{\alpha} - M_*^{-1}m_*)^T M_* (\vec{\alpha} - M_*^{-1}m_*) - m_*^T M_*^{-1} m_*\] \[E = -\frac{1}{2} \left[ \underbrace{(\vec{\alpha} - \dots)^T M_* (\vec{\alpha} - \dots)}_{\text{Gaussian in } \vec{\alpha}} \underbrace{- m_*^T M_*^{-1} m_* + C}_{\text{Terms independent of } \vec{\alpha}} \right]\]We can then pull the constants out of the integral, and now the the gaussian in \(\vec{\alpha}\) bit integrates to a constant (the normalization factor \((2\pi)^{D/2}\vert M_*\vert^{-1/2}\)). We are left with the predictive distribution for \(y_*\), which is determined just by the ‘constants’ terms in the exponent we pulled out:

\[\begin{align} p(y_*|x_*, X, Y) \propto \exp(-\frac{1}{2} \left[ C - m_*^T M_*^{-1} m_* \right]) \end{align}\]Now we want something that looks like a gaussian again, because gaussians (or more specifically normal distributions) are \({\color{red}\text{m}}{\color{purple}\text{a}}{\color{blue}\text{g}}{\color{green}\text{i}}{\color{orange}\text{c}}{\color{red}\text{a}}{\color{purple}\text{l}}\). So we want to expand this and get it into something like \(( y_* - \text{mean})^T \cdot \text{cov}^{-1} \cdot ( y_* - \text{mean})\). We already known what the constant will be, it’s the mean prediction, which is the projection of our data onto the mean parameter vector, i.e. what we got from the kernel ridge regression part above. Hence, we just need to find the covariance matrix, so we’ll similarly throw out terms that don’t involve \(y_*\) so that we can just complete the square. So then we can skip the math and just look for the \(y_*^T \cdot \text{cov}^{-1} \cdot y_*\) term.

\[\begin{align} p(y_*|x_*, X, Y) &\propto \exp(-\frac{1}{2} \left[ C - m_*^T M_*^{-1} m_* \right]) \\ &= \exp(-\frac{1}{2} \left[ \left(\hat{\alpha}^T A \hat{\alpha} + \frac{y_*^2}{\sigma^2}\right) \\ - \left(A\hat{\alpha} + \sigma^{-2} y_* x_*^T\right)^T M_*^{-1} \left(A\hat{\alpha} + \sigma^{-2} y_* x_*^T\right) \right]) \\ &= \exp(-\frac{1}{2} \left[ \left(\hat{\alpha}^T A \hat{\alpha} + \frac{y_*^2}{\sigma^2}\right) \\ - \left(\hat{\alpha}^T A^T + \sigma^{-2} x_* y_*^T\right) M_*^{-1} \left(A\hat{\alpha} + \sigma^{-2} y_* x_*^T\right) \right]) \\ &= \exp(-\frac{1}{2} \left[ \frac{y_*^2}{\sigma^2} \\ - \left(\sigma^{-2} x_* y_*^T\right) M_*^{-1} \left(\sigma^{-2} y_* x_*^T\right) \right])\cdot {C_\text{const}} \\ \end{align}\]Now we’re going to remember the fact that in this post we will assume \(y_*\) is a scalar so we \(y_*^T = y_*\) and move it around to find that the term just involving \(y_*\) or simply, the covariance \(\Lambda_*\) looks like,

\[\begin{align} p(y_*|x_*, X, Y) &= \exp(-\frac{1}{2} \left[ \frac{y_*^2}{\sigma^2} \\ - \left(\sigma^{-2} x_* y_*^T\right) M_*^{-1} \left(\sigma^{-2} y_* x_*^T\right) \right])\cdot {C_\text{const}} \\ &= \exp\left(-\frac{1}{2} y_*^2 \left[ \frac{1}{\sigma^2} - \frac{1}{\sigma^4} x_* M_*^{-1} x_*^T \right] \right)\cdot {C_\text{const}} \end{align}\]I swear we’re almost done. Now, the term in the brackets represents the inverse variance of our prediction \(y_*\). But it looks similar to the Woodbury Identity once we expand that middle term \(M_*^{-1} = (A + \sigma^{-2} x_*^T x_*)^{-1}\).